Author: Denis Avetisyan

A new framework leverages the power of ensemble forecasting and uncertainty quantification to identify subtle precursors to critical failures, offering a path towards proactive system maintenance.

This paper introduces FATE, an unsupervised method for detecting precursor-of-anomaly signals in time-series data, evaluated using a novel metric, PTaPR.

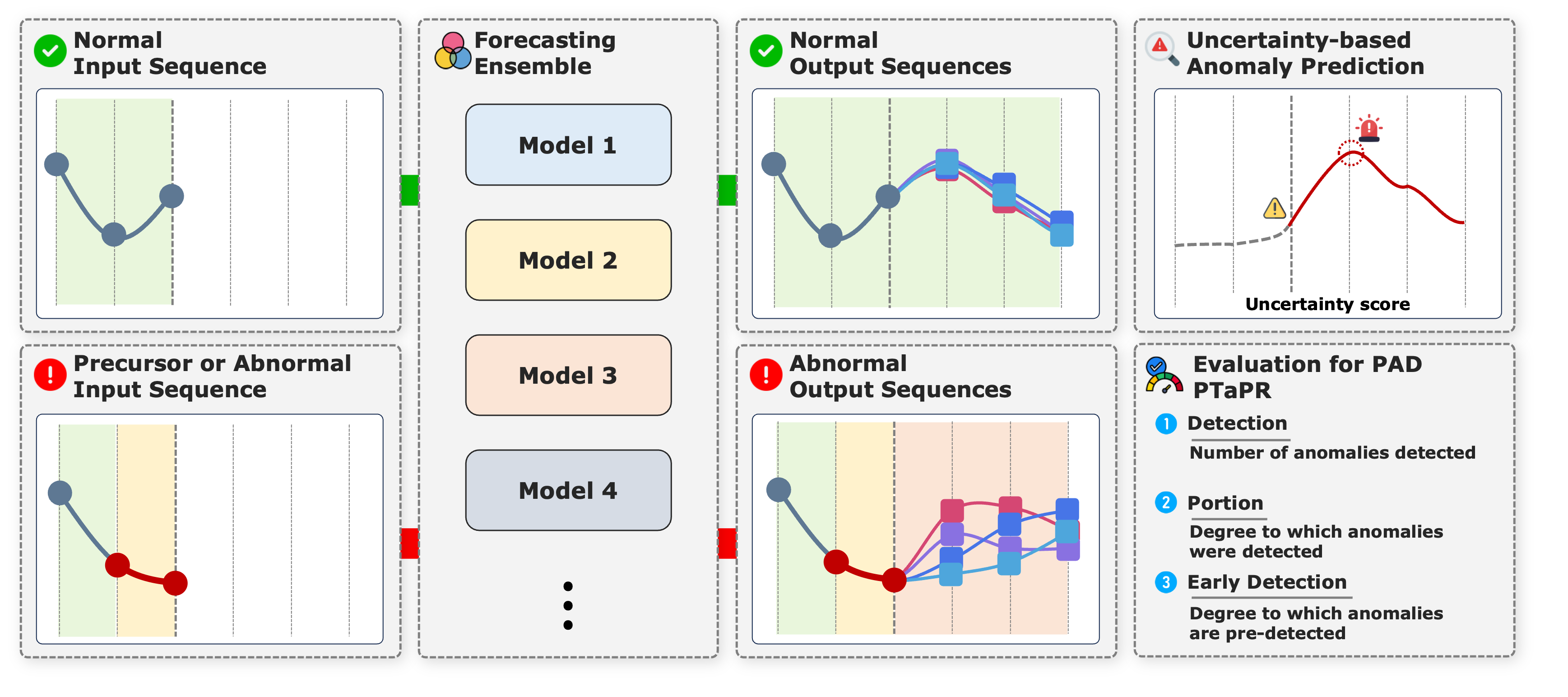

Existing time-series anomaly detection methods typically react to events after they occur, lacking the ability to provide proactive warnings. This paper introduces a novel approach, ‘Forecasting Anomaly Precursors via Uncertainty-Aware Time-Series Ensembles’, which leverages ensemble forecasting and uncertainty quantification to identify potential anomalies before their manifestation. By quantifying predictive uncertainty across a diverse set of models, the framework, FATE, signals early warnings without requiring labeled anomaly data or relying on reconstruction errors. Could this proactive approach unlock more robust and reliable early warning systems for critical applications across industries like finance and cybersecurity?

The Futility of Precision: Why Early Warnings Matter More

Conventional anomaly detection systems frequently prioritize identifying anomalies with high accuracy, often at the expense of detecting them promptly. This emphasis can be problematic because the value of anomaly detection isn’t solely about correct identification, but also about providing sufficient warning time to mitigate potential harm. A highly accurate system that signals an issue only after substantial damage has occurred offers limited practical benefit. Consequently, many real-world applications, such as predictive maintenance in manufacturing or intrusion detection in cybersecurity, demand a shift in focus towards timeliness – the ability to raise alerts early enough to enable effective intervention, even if it means accepting a slightly higher rate of false positives. The trade-off between precision and speed is thus crucial, and a system’s ultimate utility is determined not by what it detects, but by when it detects it.

Reconstruction-based anomaly detection methods, prominently featuring Long Short-Term Memory Autoencoders (LSTM-AE) and Uncertainty-aware Spatio-Temporal Anomaly Detection (USAD), frequently prioritize identifying anomalies with high precision after they have fully manifested, rather than providing early warnings. These techniques operate by learning a normal system state and flagging deviations based on reconstruction error; however, subtle initial anomalies often produce only minor reconstruction errors, falling below detection thresholds. Consequently, a significant delay can occur between the onset of a problem and its identification, hindering proactive intervention. This limitation arises because the models are trained to accurately reproduce typical behavior, and require a substantial deviation before signaling an anomaly-a critical drawback in time-sensitive applications where preemptive action is essential to mitigate potential damage or disruption.

The repercussions of delayed anomaly detection extend across critical infrastructure and environmental safeguards. In industrial control systems, a postponed alert regarding a malfunctioning sensor or equipment failure can escalate into cascading errors, leading to costly downtime, product spoilage, or even safety hazards. Similarly, in environmental monitoring-whether tracking pollution levels, deforestation, or wildlife poaching-a late identification of unusual patterns can hinder effective intervention, potentially causing irreversible damage to ecosystems. The ability to detect anomalies before they manifest as full-blown crises is therefore paramount, demanding a shift in focus from solely maximizing accuracy to prioritizing the speed and timeliness of alerts – a balance that is often overlooked in conventional approaches.

From Reaction to Anticipation: A Framework for Precursor Detection

The FATE framework differentiates itself from traditional anomaly detection systems by shifting the focus from reactive identification of established anomalies to proactive detection of precursor events. This is achieved by analyzing time-series data for subtle, often statistically insignificant, deviations from established baselines that indicate a potential future anomaly. Instead of triggering alerts based on exceeding predefined thresholds, FATE aims to identify early warning signals – indicators that, while not anomalies themselves, suggest an increased probability of anomalous behavior developing. This approach requires algorithms capable of discerning nuanced patterns and predicting future states based on incomplete or noisy data, enabling intervention before significant disruptions occur.

FATE’s implementation of Ensemble Forecasting utilizes multiple forecasting models – each trained on the same input data but potentially employing differing algorithms or parameterizations – to generate a range of predictions. These individual forecasts are then aggregated, typically through methods such as averaging or weighted averaging, to produce a consolidated prediction. This approach enhances robustness by mitigating the impact of errors inherent in any single model; if one model produces an inaccurate forecast, the others can compensate. Accuracy is similarly improved, as the ensemble can capture a broader range of potential outcomes and reduce overall prediction variance, leading to more reliable precursor detection compared to reliance on a single forecasting model.

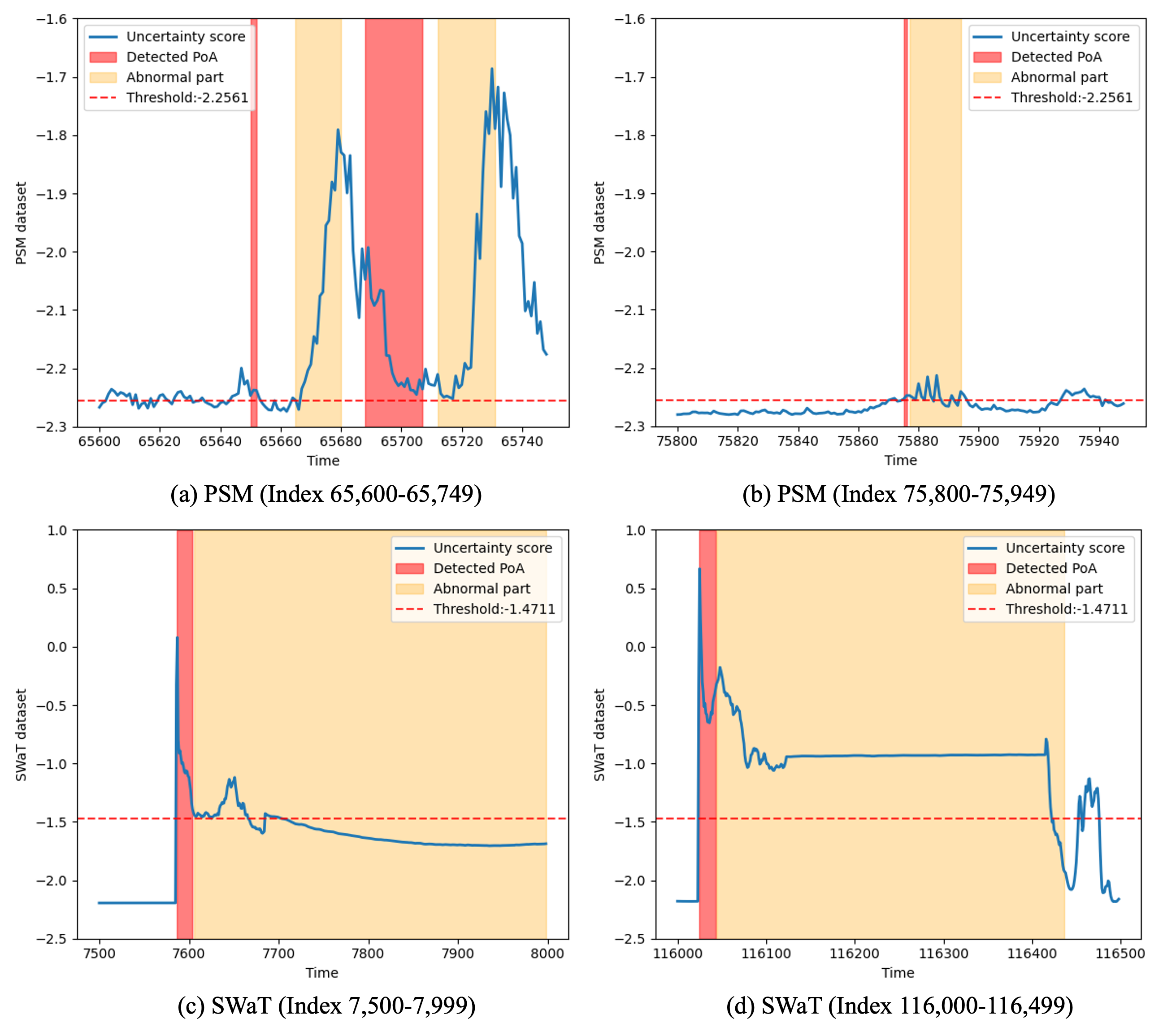

FATE incorporates Uncertainty Quantification (UQ) to evaluate the confidence level associated with its anomaly predictions. Rather than solely relying on point forecasts, FATE models generate probabilistic forecasts, providing a distribution of possible outcomes. Increasing uncertainty, as measured by metrics like prediction intervals or variance, is then treated as a potential indicator of anomalous behavior. This approach allows FATE to flag deviations from expected patterns even before a definitive anomaly is observed, effectively functioning as an early warning system based on the reliability of the predictive models themselves. The system doesn’t simply identify what might happen, but also how confident it is in that prediction, with reduced confidence signaling a potential precursor event.

Beyond Accuracy: Measuring Precursor Detection with PTaPR

The PTaPR metric was developed to provide a more accurate evaluation of precursor detection capabilities than traditional metrics. Building upon the established Time-to-Alarm Performance Ratio (TaPR), PTaPR specifically quantifies both the timeliness and precision of identifying precursors. Unlike TaPR, which focuses solely on detection latency, PTaPR incorporates a measure of confidence in the identified precursor, rewarding systems that not only detect early but also minimize false positives. This refined metric allows for a nuanced assessment of performance, highlighting systems capable of providing reliable and timely warnings before a full-scale attack or system failure occurs.

The PTaPR metric facilitates a detailed evaluation of precursor detection capabilities by quantifying both the timeliness and confidence of identified precursors. Unlike traditional metrics that may only assess accuracy, PTaPR assigns higher scores to systems that detect potential anomalies earlier in the timeline and with greater certainty, as indicated by associated confidence levels. This nuanced approach allows for differentiation between systems that simply identify anomalies and those that provide actionable insights with sufficient lead time for effective response, thereby providing a more representative measure of practical performance in critical applications like intrusion or fault detection.

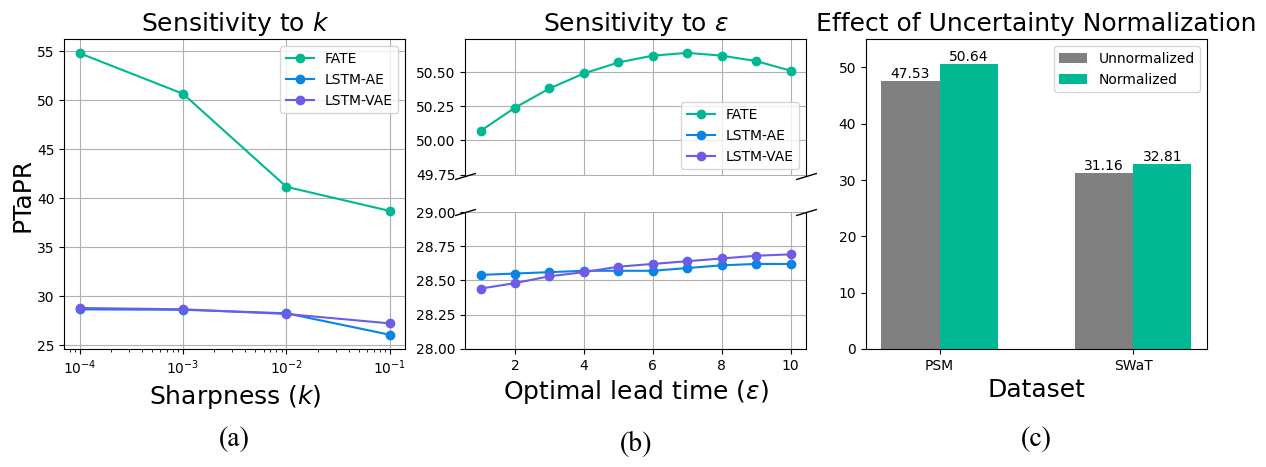

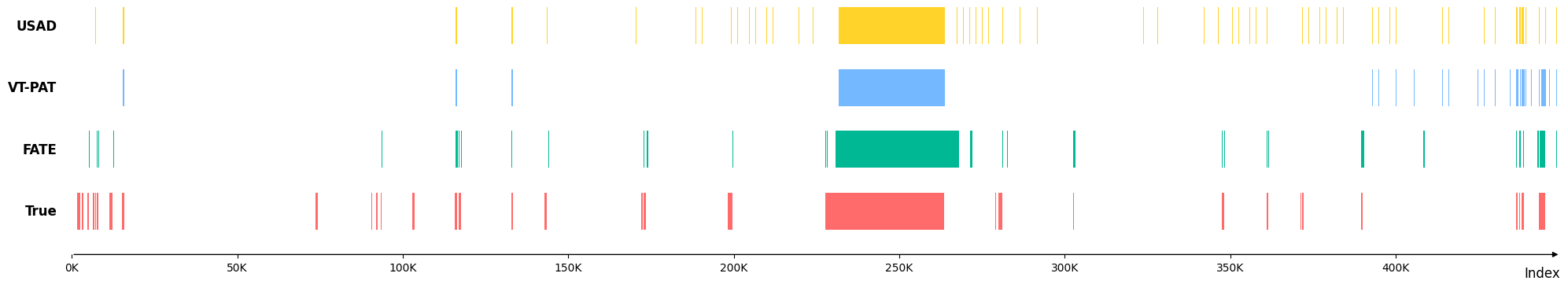

Evaluation of FATE’s precursor detection capabilities was conducted using benchmark datasets including SWaT, PSM, MSL, and SMAP. Results indicate FATE outperforms alternative approaches in identifying precursors, achieving a Peak-to-Average Precision-Recall (PTaPR) Area Under the Curve (AUC) of 53.54% on the PSM dataset. This PTaPR AUC score serves as a quantitative metric demonstrating FATE’s ability to accurately and promptly detect precursor events within the evaluated datasets, providing a basis for comparison against existing methods.

Performance evaluations utilizing the PTaPR metric demonstrate that FATE achieves significant improvements over baseline models across multiple datasets. Specifically, FATE exhibits a +22 percentage point gain on the PSM dataset, +5.35 percentage points on SWaT, +32.15 percentage points on MSL, +34.29 percentage points on SMAP, and +5.70 percentage points on the SMD dataset. These gains, calculated as the difference in Area Under the Receiver Operating Characteristic Curve (AUC) using the PTaPR metric, indicate a substantial enhancement in the timely and precise identification of attack precursors compared to existing methods.

Evaluation of the FATE system on the SMAP dataset indicates a maximum performance improvement of +34.29% over baseline approaches when measured using the PTaPR Area Under the Curve (AUC). This represents the largest observed improvement across the datasets tested, which included SWaT, PSM, MSL, and SMD. The PTaPR AUC metric specifically assesses the timeliness and precision of precursor detection, providing a nuanced evaluation beyond traditional accuracy measures. This substantial gain on SMAP highlights FATE’s enhanced capability to identify early-stage anomalies within that specific dataset compared to alternative methods.

Under the Hood: Bayesian Foundations and Transformer Integration

The foundation of FATE’s predictive capability lies in Bayesian Neural Networks, a sophisticated machine learning approach that moves beyond simple point predictions. Unlike traditional neural networks which offer a single, deterministic output, Bayesian Neural Networks provide a probability distribution over possible outcomes, effectively quantifying the uncertainty associated with each prediction. This is achieved by treating the network’s weights not as fixed values, but as probability distributions themselves. By propagating these distributions through the network, FATE doesn’t just predict what will happen, but also *how confident it is in that prediction. This inherent uncertainty quantification is crucial for reliable anomaly detection; a low-confidence prediction can flag potentially problematic data, while a high-confidence prediction provides reassurance, enabling a more nuanced and informed response to complex time-series data.

FATE leverages the Transformer Architecture, a deep learning model originally developed for natural language processing, to analyze intricate temporal dependencies within time-series data. Unlike traditional recurrent neural networks, Transformers employ a self-attention mechanism that allows the model to weigh the importance of different data points across the entire time series, regardless of their distance. This capability is crucial for identifying subtle, long-range precursor patterns that might indicate an impending anomaly – patterns that would likely be missed by methods focused on immediate, local changes. By processing the entire time series in parallel, the Transformer architecture significantly enhances the speed and efficiency of anomaly detection, allowing FATE to respond proactively to potential issues and minimize disruptions.

The fusion of Bayesian Neural Networks and Transformer architecture within FATE yields substantial gains in anomaly detection capabilities. This synergistic approach doesn’t merely identify deviations from expected behavior, but also quantifies the confidence in those detections, reducing false alarms and prioritizing genuine threats. Consequently, FATE moves beyond reactive responses to enable proactive intervention; by anticipating potential issues with increased accuracy and speed, the system facilitates timely adjustments and mitigates the risk of escalating damage. This preemptive capability is particularly valuable in critical infrastructure monitoring and complex industrial processes, where even minor anomalies can have significant consequences, allowing for preventative measures to be taken before failures occur and minimizing costly downtime.

The pursuit of precursor-of-anomaly detection, as detailed in this framework, feels predictably optimistic. It proposes FATE, an ensemble forecasting system meant to anticipate failures, and a new metric, PTaPR, to judge its success. One imagines production systems will quickly discover edge cases the model missed, transforming elegant uncertainty quantification into a new category of alert fatigue. As Marvin Minsky once observed, “You can’t always get what you want, but sometimes you get what you need.” This feels apt; the need for early warning is clear, but the promise of perfect prediction is a familiar illusion. Everything new is just the old thing with worse docs.

What’s Next?

The pursuit of ‘precursors’ feels suspiciously like seeking order in chaos. This framework, FATE, offers a statistically sound method for identifying potential anomalies before they manifest – a neat trick, if it survives contact with actual data. The PTaPR metric, while logically constructed, will inevitably become another tuning parameter, optimized to flatter whichever ensemble happens to be in favor this quarter. Anything claiming ‘self-healing’ properties hasn’t broken spectacularly enough yet.

The real challenge isn’t improving forecast accuracy-it’s acknowledging the inherent limitations of predictability. A system that flags every potential issue will drown users in false positives, rendering it useless. Conversely, a truly selective system will miss things. The sweet spot-if it exists-will be determined not by elegant algorithms, but by the tolerance for risk exhibited by those ultimately responsible when the inevitable happens.

Future work will undoubtedly focus on more sophisticated ensemble methods and perhaps the incorporation of domain knowledge. However, a more fruitful avenue might be accepting that complete anomaly prevention is a fantasy. Instead, the focus should shift towards graceful degradation and minimizing the blast radius when – not if – things go wrong. Documentation, as always, remains a collective self-delusion; if a bug is reproducible, the system is, by definition, stable.

Original article: https://arxiv.org/pdf/2602.17028.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- All Itzaland Animal Locations in Infinity Nikki

- NBA 2K26 Season 5 Adds College Themed Content

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Critics Say Five Nights at Freddy’s 2 Is a Clunker

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- Gold Rate Forecast

- BREAKING: Paramount Counters Netflix With $108B Hostile Takeover Bid for Warner Bros. Discovery

2026-02-20 14:06