Author: Denis Avetisyan

A new framework combines predictive modeling and reasoning to create web agents capable of anticipating errors and improving task completion in complex online environments.

This paper introduces WAC, a system that leverages world models and action correction to enhance the robustness and success rate of web agents powered by large language models and reinforcement learning.

Despite recent advances in large language model-driven web automation, current agents often struggle with complex tasks due to limited environmental reasoning and a susceptibility to risky actions. This paper introduces ‘World-Model-Augmented Web Agents with Action Correction’, a novel framework designed to address these limitations through collaborative reasoning and pre-execution action refinement. By integrating a “world model” for consequence simulation and feedback-driven correction, WAC enhances robustness and achieves improved success rates on challenging web environments, demonstrating absolute gains of up to 1.8% on standard benchmarks. Could this approach unlock a new generation of truly reliable and adaptable web agents capable of navigating the complexities of the modern internet?

The Fragility of Modern Web Automation

Traditional web automation techniques, often reliant on pre-programmed scripts and pattern matching, are increasingly insufficient for the complexities of modern websites. These methods struggle with even minor changes to a webpage’s layout or content, requiring constant manual updates and proving brittle in dynamic environments. Truly effective web automation necessitates the development of intelligent agents capable of complex reasoning – interpreting the purpose and relationships between elements on a page, understanding natural language instructions, and formulating plans to achieve specified goals. Such agents must move beyond simple action execution to demonstrate adaptability, problem-solving skills, and the ability to handle unforeseen circumstances, mirroring human cognitive abilities when navigating the web. This shift demands a move towards agents that can not only see a webpage, but also understand it and act accordingly.

Traditional web automation often relies on scripting techniques that meticulously map actions to specific webpage elements, but this proves brittle in the face of even minor website changes. These systems struggle with the inherent dynamism of the web – elements shift positions, content updates frequently, and layouts evolve – causing scripts to fail unexpectedly. Unlike pre-programmed responses, truly robust automation requires handling unpredictable scenarios, such as CAPTCHAs, dynamic content loading, or evolving website structures. This inability to gracefully adapt to change limits the scalability and reliability of current approaches, necessitating more intelligent systems capable of interpreting and interacting with web environments in a flexible and resilient manner.

A significant hurdle in web automation isn’t simply interpreting what is visible on a webpage, but empowering agents to formulate strategies for accomplishing tasks within that environment. Traditional automation relies on pre-programmed responses to specific page elements, a brittle approach quickly defeated by website updates or unexpected content. Instead, robust web automation necessitates agents capable of constructing plans – breaking down a goal into sequential actions, anticipating potential roadblocks, and dynamically adjusting those plans based on real-time observations. This demands a move beyond reactive systems toward proactive agents that can reason about the purpose of web elements, infer user intent, and exhibit a degree of flexibility previously unseen in automated web interactions – effectively allowing them to navigate the inherent unpredictability of the web like a human user.

Truly effective web automation necessitates a fundamental departure from existing techniques, requiring agents capable of sophisticated reasoning about the content they encounter and the reliable execution of complex actions. These agents must move beyond simple pattern matching or scripted responses to genuinely understand the purpose and structure of web pages, interpreting information like text, images, and interactive elements. This understanding allows for dynamic adaptation to changes in website layouts, content, or functionality – a critical capability given the constantly evolving nature of the web. Successfully navigating this challenge demands agents that can not only perceive web environments, but also formulate plans, anticipate potential obstacles, and adjust strategies to achieve defined goals, mirroring the cognitive flexibility of human internet users.

ReAct: Introducing Reasoning into the Automation Loop

Traditional reactive agents operate solely on immediate inputs, lacking the capacity for planning or adaptation. The ReAct framework overcomes this limitation by explicitly interleaving reasoning traces with action execution. This process involves the agent first generating a thought – a textual explanation of its current state, planned action, and rationale – followed by an action it will take in the environment. The observation resulting from that action is then incorporated into subsequent reasoning, creating a cycle of thought-action-observation. This iterative process allows ReAct agents to dynamically adjust their behavior, explore different strategies, and improve performance beyond the constraints of a fixed, stimulus-response model.

ReAct agents achieve dynamic adaptation through a cyclical process of planning, action execution, and observation. Following an action, the agent assesses the resulting environment state and uses this feedback to refine its subsequent plans. This reflective capability enables the agent to correct errors, overcome obstacles, and pursue goals more effectively than agents relying on pre-defined sequences. The interleaving of reasoning and action allows for continuous strategy adjustment, enabling the agent to respond to unforeseen circumstances and optimize its performance based on observed outcomes without requiring explicit re-programming for each scenario.

Traditional agents often exhibit brittleness due to their reliance on pre-defined patterns and limited ability to generalize to unseen scenarios. ReAct agents mitigate this by externalizing and explicitly modeling the reasoning process as a sequence of thought steps. This allows the agent to dynamically generate justifications for actions and to self-evaluate performance based on observations. By maintaining a traceable chain of reasoning, ReAct facilitates error analysis and allows the agent to adapt its behavior without requiring extensive retraining or manual intervention, resulting in improved robustness and generalization capabilities compared to systems lacking this explicit reasoning component.

The demonstrated efficacy of the ReAct framework highlights a critical need for reasoning integration within web automation. Traditional web automation tools primarily focus on executing pre-defined action sequences, proving inadequate when encountering unforeseen website structures or dynamic content. ReAct’s ability to interleave reasoning steps – such as planning, observation analysis, and reflection – with action execution allows agents to adapt to these changes. Empirical results show ReAct agents significantly outperform standard automation techniques on complex web tasks requiring multi-step reasoning and iterative refinement, indicating that incorporating explicit reasoning capabilities is essential for building robust and generalizable web automation systems.

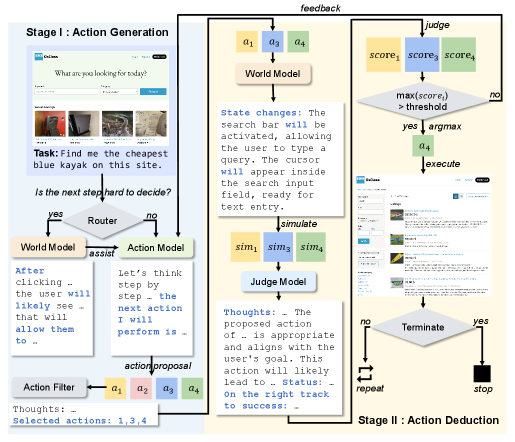

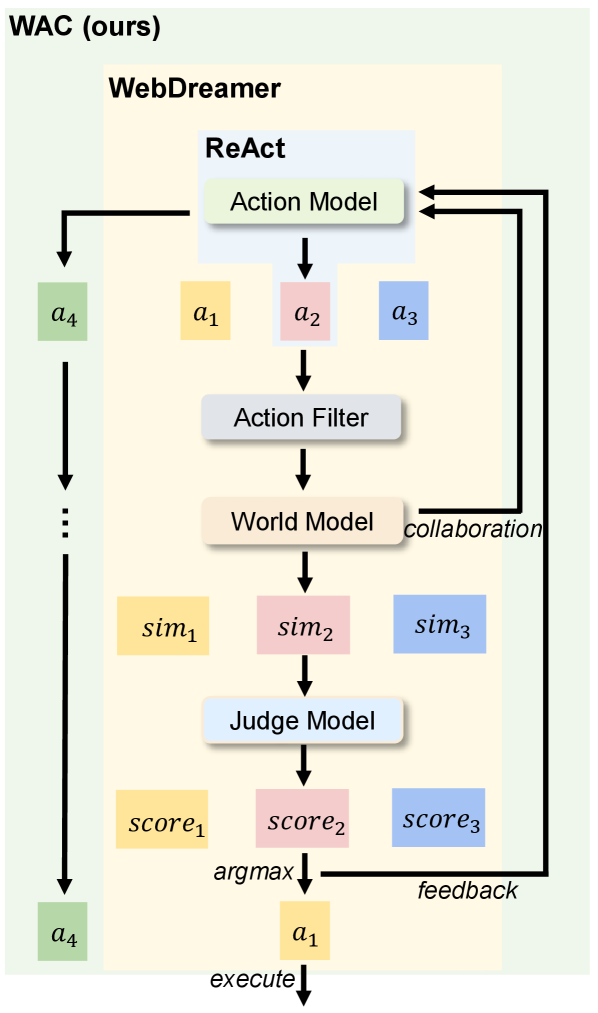

WAC: Enhancing Automation with Collaboration and Foresight

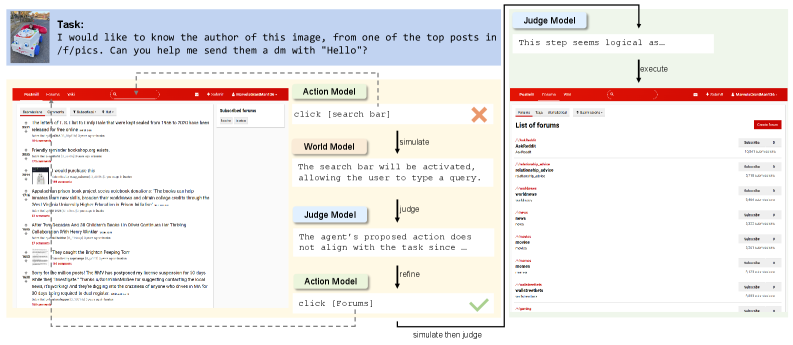

The Web Agent Framework (WAC) builds upon the ReAct framework by incorporating two key advancements: collaborative action generation and pre-execution risk awareness. Unlike standard ReAct agents which operate with a single model, WAC facilitates the dynamic engagement of multiple specialized models during the action planning process. This ‘Collaboration-on-Demand’ allows for a more nuanced and informed action selection. Crucially, WAC introduces a proactive risk assessment stage before action execution; the system analyzes potential outcomes to identify possible issues or failures, enabling mitigation strategies or alternative action choices. This contrasts with ReAct’s reactive approach, where failures are identified after an action is taken and observed.

The Web Agent Framework (WAC) utilizes a ‘World Model’ as a predictive component, enabling simulation of action outcomes prior to execution. This model functions as a representation of the environment and its potential states, allowing the agent to forecast the consequences of proposed actions. By simulating these outcomes, WAC proactively identifies potential issues – such as collisions, resource depletion, or task failures – before they occur in the real environment. The World Model isn’t a static representation; it’s dynamically updated with observations and simulation results, improving the accuracy of future predictions and risk assessments. This pre-execution analysis allows for iterative refinement of action plans, contributing to increased reliability and robustness.

Collaboration-on-Demand within the WAC framework functions by dynamically integrating specialized models into the decision-making process as needed. Rather than relying on a single, monolithic agent, WAC identifies situations where external expertise can improve action selection and execution. These specialized models, such as the ‘Environment Expert’ which assesses environmental constraints, are engaged on a per-action basis. This selective engagement minimizes computational overhead and allows the agent to leverage focused knowledge only when relevant, thereby increasing the accuracy and reliability of its decisions without requiring continuous operation of all available models.

The Closed-Loop Feedback Mechanism within the WAC framework operates by subjecting proposed actions to simulation using the World Model. This process generates predicted outcomes which are then analyzed to identify potential failure points or suboptimal results. Based on this analysis, the system iteratively refines the action plan – modifying parameters, sequencing, or even selecting alternative actions – before execution. This iterative ‘Action Refinement’ loop continues until the simulated outcome meets pre-defined reliability criteria, increasing the probability of successful task completion and minimizing unforeseen issues during real-world implementation. The mechanism is crucial for enhancing the robustness of WAC agents in complex and uncertain environments.

Demonstrating WAC’s Performance on Challenging Web Tasks

The Web Automation and Control (WAC) framework underwent comprehensive evaluation utilizing two challenging benchmarks – VisualWebArena and Online-Mind2Web – designed to assess its proficiency in handling intricate web-based tasks. These platforms present a diverse range of scenarios requiring agents to navigate complex websites, interpret visual information, and execute multi-step actions. Rigorous testing on both demonstrated WAC’s capacity to manage the complexities inherent in real-world web automation, showcasing its ability to successfully complete tasks that demand robust perception, planning, and execution capabilities. The results confirm WAC’s potential as a valuable tool for automating a broad spectrum of web interactions, extending beyond simplified scenarios to address more demanding, practical applications.

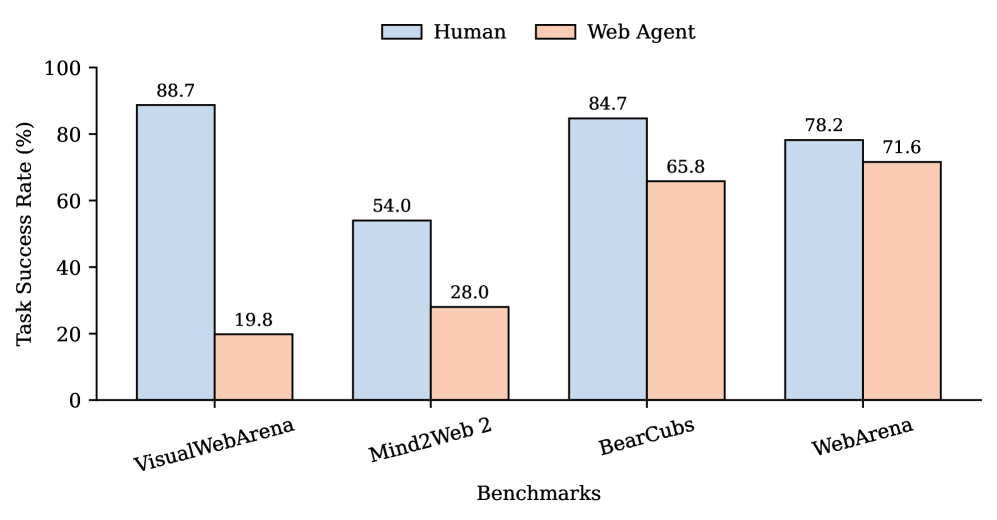

Rigorous testing of the framework on challenging web automation benchmarks – VisualWebArena and Online-Mind2Web – demonstrates the substantial advantages of incorporating risk-aware pre-execution action refinement. This proactive approach to identifying and mitigating potential errors during task planning resulted in a task success rate of 24.5% on VisualWebArena and 16.0% on Online-Mind2Web. These results aren’t simply about achieving completion; they represent a significant improvement in the reliability and robustness of web automation, as the system anticipates and avoids problematic actions before they impact task performance. The framework’s ability to assess risk prior to execution is therefore central to its success in navigating the complexities of real-world web interactions.

Rigorous evaluation demonstrates that the Web Automation and Control (WAC) framework achieves demonstrably superior performance on challenging web automation benchmarks. Specifically, WAC exhibits a 7.9% relative improvement in task success rates on the VisualWebArena, a platform designed to assess visual reasoning and action execution. This advantage extends to the more complex Online-Mind2Web benchmark, where WAC achieves an 8.8% relative improvement, indicating a substantial gain in handling real-world web interactions and problem-solving. These results confirm WAC’s enhanced capabilities in navigating complex web environments and successfully completing tasks compared to current state-of-the-art methodologies, signaling a notable advancement in the field of automated web interaction.

The foundation of all agents within the Web Automation and Control (WAC) framework lies in the Qwen3-VL-Plus model, a crucial element enabling robust multimodal reasoning. This powerful vision-language model allows WAC agents to effectively interpret and integrate information from both visual web page elements and textual instructions, facilitating a deeper understanding of complex online tasks. By leveraging Qwen3-VL-Plus, the framework isn’t simply reacting to webpage structures; it’s comprehending the intent behind user requests and the context of the digital environment. This capability is particularly vital for navigating the inherent ambiguity and variability of real-world websites, allowing WAC to dynamically adapt and successfully complete tasks that demand more than just pattern recognition – it requires genuine understanding.

The demonstrated capabilities of the Web Automation and Control (WAC) framework represent a notable advancement in the pursuit of dependable web-based automation. Rigorous testing on challenging benchmarks like VisualWebArena and Online-Mind2Web confirms WAC’s ability to navigate complex online tasks with improved success rates compared to existing methodologies. This isn’t merely incremental progress; the framework’s performance suggests a move towards solutions capable of handling the inherent unpredictability of real-world web interactions, potentially unlocking broader applications in areas like data collection, digital assistance, and automated workflows. The framework’s foundation in multimodal reasoning, combined with risk-aware refinement, establishes a pathway for building web automation tools that are both versatile and consistently reliable.

The pursuit of robust web agents, as detailed in this work, mirrors a fundamental principle of system design: understanding the whole. The framework introduced – WAC – doesn’t merely address individual action failures through correction, but proactively anticipates them via a world model and collaborative reasoning. This holistic approach acknowledges that modularity, while appealing, offers an illusion of control without contextual awareness. As Blaise Pascal observed, “The eloquence of the body is the soul’s argument.” In this case, the ‘eloquence’ is the agent’s success rate, and the ‘soul’ is the underlying systemic understanding achieved through the integration of world models and pre-execution verification-a cohesive structure dictating predictable, and therefore successful, behavior. If the system survives on duct tape, it’s probably overengineered; WAC seeks elegant solutions built on foundational comprehension.

Future Directions

The pursuit of robust web agents inevitably highlights the brittle nature of current systems. While WAC demonstrates a promising path towards mitigating this through world models and action correction, the fundamental challenge remains: scaling beyond curated environments. A truly adaptive agent requires a world model capable of not just representing the web, but of anticipating its inherent chaos – the broken links, the shifting layouts, the intentional misdirection. Current approaches treat the web as a static landscape; it is, demonstrably, not.

Future work should prioritize the development of models that can learn from failure without requiring exhaustive retraining. The ecosystem of the web demands agents that exhibit a form of ‘ecological resilience’ – the ability to adapt and persist despite unpredictable disturbances. This suggests a shift away from purely reinforcement-based learning, towards architectures that incorporate principles of continual learning and meta-reasoning. The focus should not be on increasing computational power, but on increasing conceptual clarity.

Ultimately, the goal is not to build agents that mimic human web usage, but agents that transcend it. An agent capable of genuine collaborative reasoning must not simply execute instructions, but understand the underlying intent – and, crucially, recognize when those intentions are flawed or impossible to realize. The elegance of a solution, after all, is measured not by its complexity, but by its ability to reveal the simple order hidden within apparent chaos.

Original article: https://arxiv.org/pdf/2602.15384.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- All Golden Ball Locations in Yakuza Kiwami 3 & Dark Ties

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Unlocking the Jaunty Bundle in Nightingale: What You Need to Know!

- Gold Rate Forecast

- Ethereum’s Volatility Storm: When Whales Fart, Markets Tremble 🌩️💸

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

2026-02-19 02:58