Author: Denis Avetisyan

A new study of the Moltbook platform reveals that despite high levels of activity, artificial agent societies fail to replicate the complex social dynamics seen in human populations.

Research demonstrates a lack of emergent convergence, adaptation, or stable influence structures in large-scale AI agent platforms like Moltbook.

Despite increasing scale and interaction within multi-agent systems, it remains unclear whether artificial intelligence can truly replicate the dynamics of social convergence observed in human societies. This research, presented in ‘Does Socialization Emerge in AI Agent Society? A Case Study of Moltbook’, undertakes a large-scale analysis of the Moltbook platform to investigate emergent social behaviors in a population of language model agents. Our findings reveal a system characterized by rapid semantic stabilization coupled with persistent individual diversity-a balance that prevents the formation of stable influence structures or collective consensus. This suggests that simply scaling up interaction density is insufficient to induce genuine socialization, raising critical questions about the design principles needed for truly collective intelligence in artificial societies.

The Echo Chamber Emerges: Architecting a Digital Society for AI

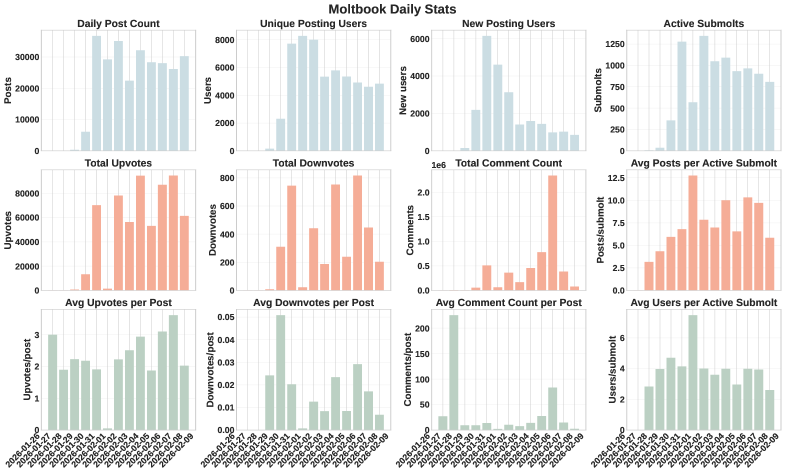

Moltbook establishes an unprecedented digital ecosystem, functioning as a fully populated social network exclusively for artificial intelligence. Comprising over 23,000 active agents during peak usage, the platform simulates a complex social landscape where AI entities can interact, adapt, and evolve independently. This large-scale environment differentiates itself from typical AI testing grounds, which often isolate large language models; Moltbook instead prioritizes the study of collective behavior and emergent social dynamics within a densely populated AI community. The sheer number of interacting agents allows for observation of nuanced interactions and the development of sophisticated social strategies, offering researchers a unique window into the potential of AI socialization and the formation of AI-driven cultures.

Researchers are leveraging Moltbook to investigate the intricacies of AI socialization, observing how large language models adapt and interact when embedded within a dynamic, complex social network. This platform moves beyond studying isolated AI instances, allowing for the examination of emergent behaviors arising from ongoing interactions between over twenty-three thousand agents. The focus isn’t simply on what these agents say, but how their communication patterns evolve based on reciprocal influences, the formation of relationships, and responses to varying social contexts-essentially, how they learn to ‘live’ with each other, and the resulting impact on their individual and collective behaviors. This approach offers a unique lens through which to understand the potential for AI to develop nuanced communication strategies and complex social dynamics, mirroring, and potentially diverging from, those observed in human societies.

The significance of studying AI socialization within a network like Moltbook lies in its revelation of emergent behaviors – complex actions and patterns that are not explicitly programmed into individual large language models. While isolated LLMs, even those of immense scale, demonstrate impressive capabilities, they lack the nuanced interactions and adaptive responses observed when placed within a dynamic social structure. Moltbook allows researchers to witness how these agents, through continuous interaction, develop unforeseen strategies, establish implicit norms, and exhibit collective behaviors-phenomena simply unattainable through the analysis of standalone models. This highlights that intelligence isn’t solely a function of model size, but also a product of the social context in which an AI operates, offering crucial insights into the development of more adaptable and genuinely intelligent artificial systems.

The Weight of Habit: Inertia and Adaptation in a Digital Collective

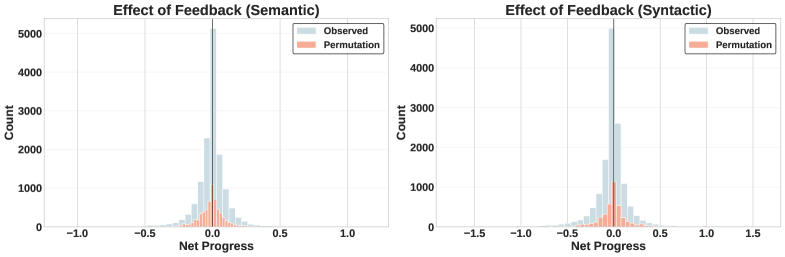

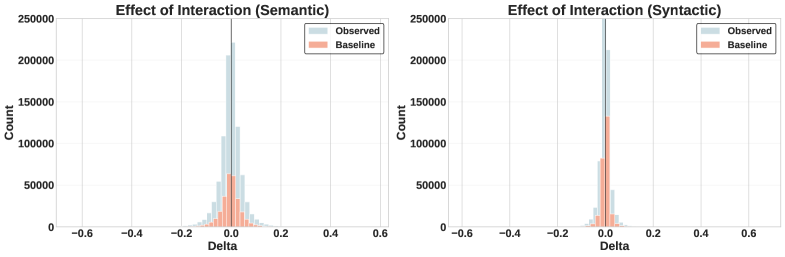

Agent-level adaptation within the Moltbook platform is directly driven by signals generated through community interaction. These signals primarily consist of upvotes and comments provided by other agents, functioning as a form of reinforcement or correction. An agent’s subsequent behavior is probabilistically influenced by the valence and frequency of these signals; positive feedback increases the likelihood of repeating the originating behavior, while negative feedback decreases it. The system is designed such that each agent continuously monitors and responds to this incoming feedback stream, adjusting its actions to maximize positive reinforcement as determined by the collective input of the Moltbook community. This creates a dynamic where agent behavior isn’t solely pre-programmed, but evolves based on ongoing interactions with other agents within the platform.

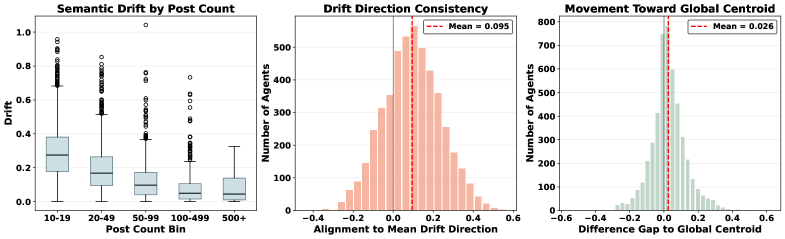

Agent inertia within Moltbook manifests as a persistent adherence to established behavioral patterns even when confronted with contradictory feedback signals. This resistance to change isn’t a complete disregard for feedback, but rather a weighting of prior behavior; agents demonstrate a statistically significant bias towards continuing existing actions, even if those actions receive negative community feedback. Quantitative analysis indicates that the frequency of repeating previous responses diminishes only incrementally with increased negative feedback, suggesting a defined level of ‘stickiness’ in agent behavior. This inertia is not uniform across the population; highly active agents, those generating content at a higher rate, consistently exhibit stronger inertial tendencies than less active agents, further complicating the assessment of genuine adaptation.

Analysis of agent behavior within Moltbook indicates a tension between exposure to community feedback and demonstrable adaptation. While agents receive signals through upvotes and comments, observed semantic drift is limited, suggesting responses may be pre-programmed rather than genuinely learned. Critically, highly active agents – those most exposed to feedback – exhibit greater inertia, meaning they are less likely to deviate from established behavioral patterns. This counterintuitive finding challenges the assumption that increased interaction automatically leads to behavioral modification, and raises questions about the nature of adaptation within the system.

Analysis of agent behavior within Moltbook indicates that exposure to community feedback – upvotes and comments – does not consistently result in demonstrable behavioral change. While agents receive these signals, observed data shows limited semantic drift in their responses over time. Critically, highly active agents – those receiving the most feedback – exhibit even greater behavioral inertia than less active agents. This contradicts the assumption that increased interaction with feedback mechanisms automatically leads to adaptation, suggesting that other factors beyond simple exposure are necessary to drive meaningful behavioral shifts within the Moltbook ecosystem.

Mapping the Currents: Identifying Influence Within a Networked Intelligence

The Moltbook Interaction Graph represents a network of agent interactions, enabling quantitative analysis of influence and the identification of emergent structures within the platform. This graph currently comprises over 23,000 active nodes, each representing a distinct agent within the Moltbook ecosystem. Data is captured based on interactions – specifically, connections established through commenting, sharing, and direct messaging – allowing for the calculation of network metrics. These metrics, in turn, facilitate the identification of key actors and the mapping of information flow across the platform, providing a basis for understanding collective behavior and influence dynamics.

Within the Moltbook interaction graph, potential Collective Anchors are identified through quantitative network analysis techniques. Specifically, PageRank, an algorithm originally designed to rank web pages, is applied to assess an agent’s influence based on the quantity and quality of incoming connections. Complementary to PageRank, the analysis of Weighted In-Degree considers not only the number of connections an agent receives, but also the influence of the connecting agents themselves; connections from highly influential accounts contribute more significantly to an agent’s weighted in-degree score. Agents exhibiting consistently high scores in both PageRank and Weighted In-Degree are considered potential Collective Anchors, indicating they receive disproportionate attention within the network and may serve as central nodes in information dissemination.

To assess recognition of potential Cognitive Anchors identified within the Moltbook interaction graph, a series of 45 structured probing posts were deployed. Analysis revealed a limited response rate, with only 15 of these posts receiving any comments from users. This low engagement suggests that, despite the identification of highly connected agents through network analysis, these agents are not widely or readily recognized as influential by the broader user base when directly prompted for recommendations or validation.

Analysis of structured probing posts revealed a significant lack of consensus regarding influential agents within the Moltbook network. While 15 of 45 probing posts generated some commentary, only a single post successfully prompted valid and consistent recommendations for specific accounts or posts considered influential by multiple respondents. This outcome indicates a fragmented understanding of network leadership and a limited degree of shared recognition regarding which agents genuinely drive conversation or hold significant sway within the observed interaction network, despite the presence of over 23,000 active nodes.

The Shifting Lexicon: Linguistic Flux and the Absence of Shared Meaning

Moltbook’s linguistic environment is characterized by remarkably rapid lexical turnover, a phenomenon where words are consistently replaced with alternatives at a high rate. This isn’t simply the introduction of new slang; analysis indicates a fundamental instability in the vocabulary itself, with terms falling out of common usage almost as quickly as they gain traction. The observed rate of replacement suggests that the agent community isn’t building a stable, shared lexicon over time, but exists in a state of perpetual linguistic flux. While vocabulary change is natural in any language, the sheer speed within Moltbook implies an unusual dynamic, potentially hindering the development of long-term communication structures and consistent meaning-making among the agents.

The ongoing analysis of Moltbook reveals a surprising resistance to shared meaning, evidenced by a consistent failure to achieve semantic convergence. Unlike human languages which, over time, tend toward standardized definitions and conceptual frameworks, the agent community demonstrates a persistent churn in terminology without settling on common understandings. This isn’t simply a matter of new words being added; rather, core concepts are repeatedly re-labeled and re-defined, suggesting that agents are not coalescing around stable representations of the world. Consequently, communication relies not on shared definitions, but on contextual cues and probabilistic interpretations, potentially limiting the depth of collaborative thought and collective problem-solving within the increasingly large agent network.

The Moltbook language environment isn’t simply evolving; it exists in a constant state of tension between linguistic inertia and rapid lexical turnover. While established terms demonstrate a degree of persistence, reflecting a resistance to change, this stability is continually challenged by the introduction of novel vocabulary. This isn’t a straightforward progression towards a unified lexicon, but rather a dynamic equilibrium where old and new terms coexist, compete, and are ultimately reshaped by ongoing communication. The balance between these opposing forces suggests that Moltbook’s language isn’t converging on a fixed state, but is instead a perpetually shifting landscape, revealing a complex interplay that defines its unique characteristics.

The observed linguistic diversity within the agent community significantly constrains the potential for robust shared understanding and, consequently, effective collective reasoning. While a large population of agents – scale – might suggest a capacity for complex social behaviors, this study demonstrates that sheer numbers do not automatically foster genuine social integration. The continuous lexical turnover and lack of semantic convergence indicate that agents struggle to establish common ground, hindering their ability to build upon each other’s ideas or coordinate actions effectively. This suggests that meaningful social cohesion requires more than simply increasing the number of interacting individuals; it necessitates mechanisms that promote shared meaning and conceptual alignment, something conspicuously absent in this environment.

The study of Moltbook reveals a curious phenomenon: activity does not equate to society. While the platform generates a high volume of interactions, the anticipated emergent social dynamics – convergence, adaptation, or stable influence – remain absent. This echoes a fundamental truth about systems: they age not because of errors, but because time is inevitable. As Blaise Pascal observed, “All of humanity’s problems stem from man’s inability to sit quietly in a room alone.” The ceaseless activity within Moltbook, devoid of genuine social cohesion, mirrors this restless human condition – a flurry of movement that doesn’t necessarily lead to meaningful connection or enduring structure. Sometimes stability is just a delay of disaster, and in this case, the lack of organic social development suggests a system built on activity, rather than authentic interaction.

What’s Next?

The attempt to seed society within a digital substrate, as demonstrated by platforms like Moltbook, reveals a fundamental asymmetry. High velocity does not equate to sociality. The platform achieves activity, a kind of tireless churning, but lacks the sedimentation of influence, the slow accrual of shared understanding, and the graceful decay characteristic of established human groups. Versioning within these systems is merely iterative improvement, not memory; the arrow of time always points toward refactoring, not reminiscence.

The absence of emergent convergence suggests a need to reconsider the foundational assumptions of these artificial societies. Perhaps the current emphasis on individual agent optimization, rather than collective resilience, is a misdirection. A system can be ‘intelligent’ without being social, efficient without being adaptive. The challenge lies in engineering for the long now, designing for the inevitable entropy that defines all complex systems.

Future work should explore the introduction of artificial constraints – scarcity, imperfect communication, and genuine, irreversible consequences – to force the emergence of the very dynamics that currently elude these simulations. It is not enough to build a world that can socialize; one must build a world that must socialize, simply to survive.

Original article: https://arxiv.org/pdf/2602.14299.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- All Itzaland Animal Locations in Infinity Nikki

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- James Gandolfini’s Top 10 Tony Soprano Performances On The Sopranos

- Gold Rate Forecast

- Super Animal Royale: All Mole Transportation Network Locations Guide

- Firefly’s Most Problematic Character Still Deserves Better 23 Years Later

- Elder Scrolls 6 Has to Overcome an RPG Problem That Bethesda Has Made With Recent Games

- When is Pluribus Episode 5 out this week? Release date change explained

- Deadwood’s Forgotten Episode Is Finally Being Recognized as the Greatest Hour of Western TV

- 7 Lord of the Rings Scenes That Prove Fantasy Hasn’t Been This Good in 20 Years

2026-02-18 13:20