Author: Denis Avetisyan

Researchers have developed a novel framework for controlling activity in spiking neural networks, enabling continual learning across vision tasks while minimizing energy consumption.

This work introduces an energy-aware spike budgeting approach for continual learning in spiking neural networks applied to both frame-based and event-based neuromorphic vision systems.

Catastrophic forgetting remains a significant obstacle to deploying spiking neural networks (SNNs) in real-world, continually changing environments despite their potential for ultra-low-power perception. This work, ‘Energy-Aware Spike Budgeting for Continual Learning in Spiking Neural Networks for Neuromorphic Vision’, addresses this challenge by introducing a framework that jointly optimizes accuracy and energy efficiency during continual learning. Through adaptive spike allocation and learnable neuron parameters, our approach demonstrates modality-dependent benefits-sparsity-inducing regularization for frame-based data and accuracy gains with minimal overhead for event-based vision-across five benchmarks. Could this energy-aware strategy pave the way for more robust and sustainable neuromorphic vision systems capable of lifelong learning?

Decoding the Brain’s Efficiency: A New Computing Paradigm

Conventional machine learning algorithms, while powerful, are increasingly constrained by the demands of real-world applications. These systems typically process data in a continuous, synchronized manner, requiring substantial computational resources and energy, even when data is sparse or irrelevant. This approach proves particularly inefficient when handling the constant, noisy streams characteristic of sensory input – think of visual scenes or audio recordings – where much of the information is redundant. Furthermore, these algorithms often struggle to adapt quickly to changing environments or unexpected data patterns, necessitating frequent retraining and limiting their deployment in dynamic, real-time applications. The inherent limitations in energy efficiency and adaptability are pushing researchers to explore fundamentally new computing paradigms that more closely mimic the brain’s remarkable ability to process information with minimal resources and maximum flexibility.

Neuromorphic computing represents a significant departure from traditional von Neumann architectures, instead drawing inspiration from the biological brain’s remarkable efficiency. This approach prioritizes event-driven processing, where computations occur only when stimulated by changes in input – mirroring how neurons fire only when they receive sufficient signals. Crucially, neuromorphic systems employ sparse communication, meaning that only essential information is transmitted, drastically reducing energy consumption compared to the constant data transfer in conventional computers. This combination allows these systems to tackle complex, real-world data streams – like those from vision or auditory sensors – with significantly lower power requirements and greater adaptability, potentially unlocking new possibilities in areas such as robotics, edge computing, and artificial intelligence.

Spiking Neural Networks represent a fundamental shift in how computation is approached, moving beyond the continuous value representation of traditional artificial neural networks to a system based on discrete, asynchronous events known as spikes. These networks mimic the signaling mechanisms of biological neurons, where information is encoded not just in the frequency of spikes, but also in their precise timing. This event-driven approach results in remarkably energy-efficient computation; neurons only activate and transmit information when a spike occurs, dramatically reducing power consumption compared to systems that constantly process data. The sparse communication inherent in spiking networks also allows for the creation of highly parallel and scalable architectures, potentially unlocking new capabilities in areas like real-time sensor processing, robotics, and edge computing where energy constraints are paramount. By embracing the temporal dynamics of biological systems, these networks offer a pathway toward more intelligent and sustainable computing technologies.

The Peril of Forgetting: A Challenge to Spiking Systems

Continual learning, the ability of a system to learn new tasks without forgetting previously learned ones, is a critical requirement for Spiking Neural Networks (SNNs) intended for deployment in real-world, dynamic environments. Traditional machine learning approaches to continual learning frequently exhibit catastrophic forgetting, wherein the performance on previously mastered tasks degrades significantly upon learning a new task. This phenomenon occurs because the network’s weights are adjusted to accommodate the new information, often overwriting the representations crucial for older tasks. The challenge is amplified in SNNs due to their event-driven, sparse nature and the complexities of temporal coding, requiring specialized methods to preserve existing knowledge during incremental learning.

Established continual learning techniques – Elastic Weight Consolidation (EWC), Progressive Neural Networks (PNNs), and Experience Replay (ER) – demonstrate efficacy in artificial neural networks by addressing catastrophic forgetting. However, directly applying these methods to Spiking Neural Networks (SNNs) presents challenges due to the fundamental differences in information processing. EWC, which protects important weights by penalizing changes, requires careful adaptation to the sparse and asynchronous spiking activity. PNNs, relying on expanding network capacity for each task, may negate the energy efficiency benefits of SNNs. Similarly, ER, which stores and replays past experiences, is complicated by the temporal nature of spike trains and the need to efficiently store and replay spiking patterns rather than static data points, increasing memory overhead and computational cost.

Spiking Neural Networks (SNNs) utilize sparse, event-driven communication and temporal coding, presenting unique challenges for continual learning. Traditional techniques designed for dense, rate-based networks often fail to effectively preserve previously learned information due to the sensitivity of spiking dynamics to synaptic modifications. The low firing rates and reliance on precise spike timing in SNNs necessitate continual learning strategies that minimize disruption to established temporal patterns. Furthermore, maintaining energy efficiency – a key advantage of SNNs – requires that these novel approaches avoid computationally expensive procedures or excessive synaptic plasticity, as these would negate the inherent power savings of sparse coding.

Orchestrating Sparsity: A Framework for Adaptive Learning

Energy-Aware Spike Budgeting addresses the challenge of continual learning in Spiking Neural Networks (SNNs) by implementing a dynamic regulation of neuronal activity. Traditional SNN training often suffers from catastrophic forgetting when sequentially learning new tasks, and also struggles with high energy consumption due to persistent network firing. This framework introduces a method to optimize both performance and energy efficiency by controlling the overall ‘spike budget’ – the total number of spikes emitted by the network. By limiting this budget and strategically allocating spikes to critical synaptic connections, the system prioritizes the processing of relevant information and mitigates the overwriting of previously learned knowledge, thereby enabling adaptation to new tasks without significant performance degradation on older ones.

The framework utilizes Learnable Neuron Dynamics to modulate individual neuron thresholds and time constants, allowing for adaptive sensitivity to input signals. This dynamic adjustment, coupled with Proportional Control, regulates spiking rates based on the relevance of incoming information; stronger signals trigger higher spiking activity, while weaker or irrelevant inputs are suppressed. Proportional Control specifically scales spiking output proportionally to the strength of the input current, effectively filtering noise and prioritizing salient features. This combined approach ensures that network activity is focused on essential data, minimizing energy consumption and maximizing information processing efficiency by selectively activating neurons based on input significance.

Knowledge consolidation within the Energy-Aware Spike Budgeting framework is achieved by restricting synaptic modification to a limited ‘spike budget’. This budget represents the total allowable spiking activity for weight updates and is dynamically allocated to connections deemed most critical for retaining previously learned information. By prioritizing essential synapses, the framework minimizes destructive interference from new learning, thereby preserving performance on older tasks. This proportional allocation ensures that synapses supporting established knowledge receive sufficient reinforcement to prevent forgetting, while allowing adaptation to new inputs without complete overwriting of prior learning. The limited budget inherently encourages efficient representation and prevents the network from simply memorizing new data, fostering robust continual learning capabilities.

Validating Intelligence: Performance Across Diverse Landscapes

Energy-Aware Spike Budgeting was subjected to rigorous testing across a range of established benchmark datasets to assess its performance capabilities. These datasets included static image datasets such as MNIST, CIFAR-10, and N-MNIST, as well as event-based datasets specifically designed for spiking neural networks, namely CIFAR-10-DVS and DVS-Gesture. Evaluations across this diverse selection of datasets consistently demonstrated the superior performance of the framework compared to existing methodologies, indicating a broad applicability and robust functionality across different data modalities and network architectures.

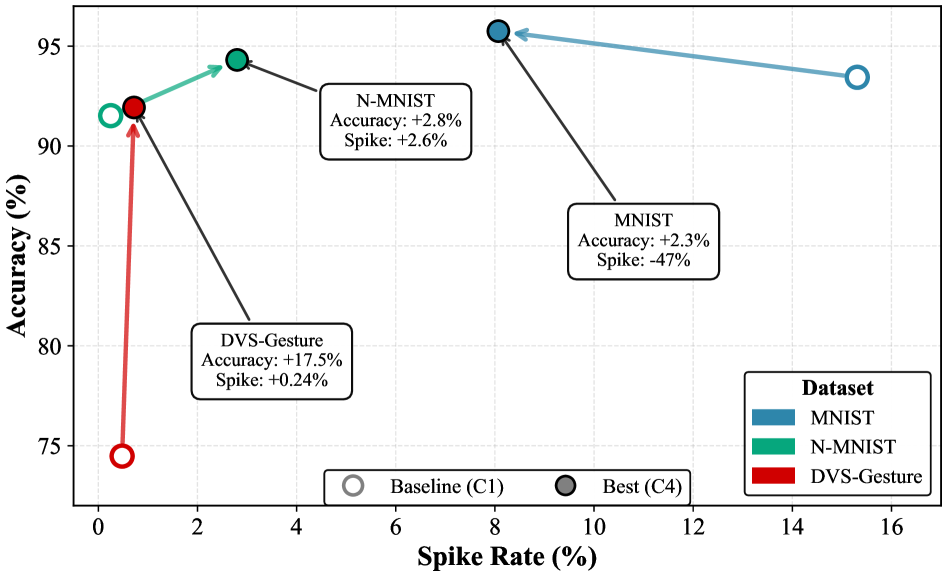

Evaluations demonstrate that the Energy-Aware Spike Budgeting framework achieves a maximum accuracy improvement of 17.45% when applied to event-based datasets. This performance gain is particularly notable on the DVS-Gesture dataset. Concurrent with these accuracy improvements, the framework also delivers substantial reductions in computational cost; specifically, spike counts are reduced by 47% on frame-based datasets such as MNIST, indicating improved energy efficiency without compromising performance on traditional datasets.

Evaluations on the DVS-Gesture dataset demonstrate the framework’s ability to achieve a 91.93% accuracy rate. This performance is notable due to the concurrent maintenance of an ultra-sparse operational profile, characterized by a spike rate of less than 1%. This result indicates efficient information processing with minimal neuronal communication, highlighting the framework’s effectiveness in handling event-based data while minimizing computational cost.

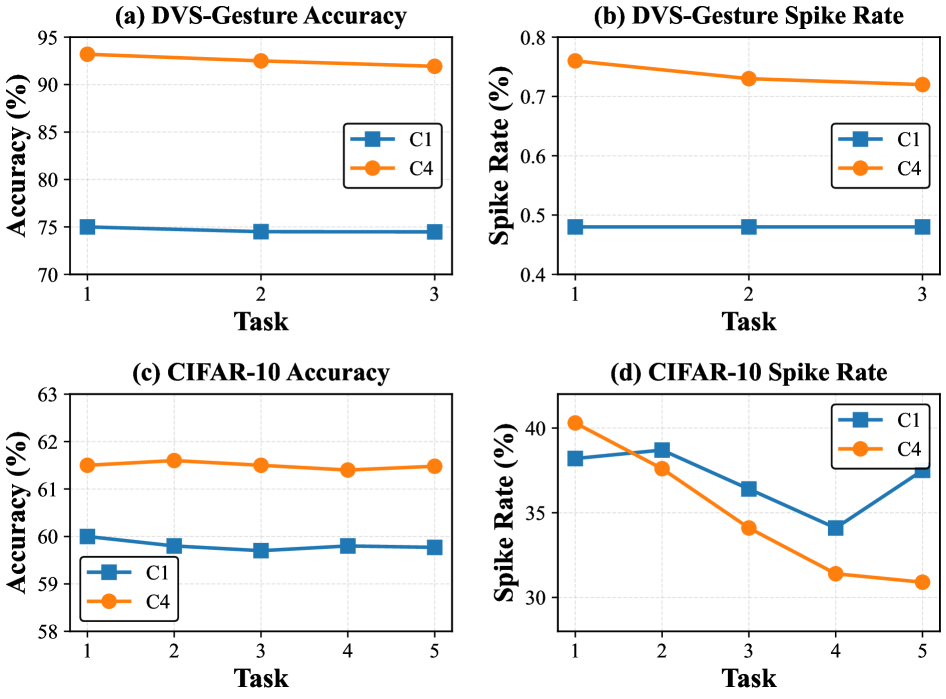

Backward Transfer (BWT) measures the degree to which learning a new task negatively impacts performance on previously learned tasks. The Energy-Aware Spike Budgeting framework demonstrated a significant improvement in BWT on the DVS-Gesture dataset, reducing the negative transfer from -25.35% to -5.90%. This reduction indicates an enhanced ability to retain previously acquired knowledge while learning new information, suggesting the framework mitigates catastrophic forgetting and improves overall learning stability within a sequential task paradigm.

Performance across diverse datasets, when coupled with the Task-Incremental Learning paradigm, demonstrates the framework’s ability to adapt to new information and maintain performance over time. Task-Incremental Learning involves sequentially learning tasks without forgetting previously acquired knowledge; successful implementation within this paradigm indicates robustness to catastrophic forgetting. The framework’s consistent performance gains on datasets including MNIST, CIFAR-10, N-MNIST, CIFAR-10-DVS, and DVS-Gesture, combined with improved Backward Transfer – reducing information loss from -25.35% to -5.90% on DVS-Gesture – confirms its adaptability and ability to retain learned information while learning new tasks.

Beyond Simulation: A Glimpse into the Future of Intelligence

The Energy-Aware Spike Budgeting framework demonstrates significant compatibility with the unique architecture of neuromorphic hardware, paving the way for true On-Chip Learning. This approach moves beyond simply executing pre-trained models and allows the network to adapt and refine its parameters directly within the silicon, drastically minimizing data transfer and associated energy demands. By intelligently allocating computational resources – specifically, the number of spikes a neuron is permitted to emit – the framework optimizes performance while adhering to strict energy constraints. This is particularly crucial for resource-limited devices and applications where continuous learning is essential, enabling Spiking Neural Networks (SNNs) to operate with unprecedented efficiency and unlock the potential for always-on, self-improving systems. The result is a pathway toward deploying complex artificial intelligence directly onto low-power hardware, bringing the promise of brain-inspired computing closer to reality.

Neuromorphic computing stands to gain significant advancements through the synergistic combination of the Energy-Aware Spike Budgeting framework with sophisticated learning techniques. Specifically, the application of Surrogate Gradient Learning allows for efficient training of Spiking Neural Networks (SNNs) – a challenge historically posed by the non-differentiable nature of spiking neurons. Further optimization of the Leaky Integrate-and-Fire Neuron – a common model for biological neurons – refines the precision and responsiveness of these networks. By carefully tuning parameters governing spike generation and synaptic plasticity within these models, researchers aim to maximize computational efficiency and minimize energy consumption, ultimately unlocking the full potential of brain-inspired hardware and enabling complex, real-time processing capabilities.

The culmination of energy-efficient spiking neural networks and on-chip learning presents a transformative opportunity across diverse technological landscapes. Autonomous robotics stands to benefit from the low-power consumption and rapid processing capabilities, enabling more sophisticated and enduring robotic systems. Simultaneously, edge computing devices, constrained by power and space, can leverage these networks for localized, intelligent data analysis without relying on cloud connectivity. Beyond these practical applications, the biologically inspired nature of spiking neural networks fosters advancements in brain-inspired artificial intelligence, potentially unlocking novel approaches to machine learning and cognitive computing that more closely mimic the efficiency and adaptability of the human brain. This convergence promises not only incremental improvements but a fundamental shift in how artificial intelligence is designed and deployed.

The pursuit of continual learning in spiking neural networks, as detailed in this work, echoes a fundamental principle: systems reveal their limitations only under stress. This research doesn’t simply accept the energy constraints inherent in neuromorphic computing; it actively probes them with spike budgeting, seeking the precise point of optimal performance. As Blaise Pascal observed, “The eloquence of angels is no more than the silence of reason.” Similarly, this paper suggests that true intelligence isn’t about maximizing activity-about a constant barrage of spikes-but about intelligently limiting it, achieving more with less. The adaptive control of network activity isn’t merely an optimization; it’s a dismantling of assumptions about what constitutes effective computation, revealing the underlying architecture of efficiency.

Beyond the Spike Count

The demonstrated efficacy of energy-aware spike budgeting, while promising, merely shifts the locus of optimization. True continual learning isn’t about doing more with fewer spikes-it’s about fundamentally altering what constitutes ‘information’ within the network. The current paradigm still relies on relatively static architectures attempting to absorb a dynamic world; a brittle approach. Future work must investigate networks capable of self-reconfiguration-of actively pruning, rewriting, and even forgetting connections based on intrinsic measures of relevance, not externally defined tasks.

A persistent, and largely unaddressed, challenge lies in scaling these techniques beyond simplified vision problems. Neuromorphic systems excel at low-latency processing of sparse data, but the computational cost of enforcing energy budgets-of actively monitoring and modulating spiking activity-could quickly negate these benefits in more complex scenarios. The question isn’t simply “can it learn continuously?”, but “can it learn continuously without collapsing under its own metabolic demands?”

Ultimately, this work serves as a useful stress test of the LIF neuron model. It reveals the limitations of treating spikes merely as discrete events in a flow of computation. A more nuanced understanding of dendritic processing, synaptic plasticity, and the inherent noise within biological systems is crucial. The goal shouldn’t be to perfectly mimic the brain, but to reverse-engineer the principles that enable its remarkable efficiency and adaptability-even if that means dismantling our current assumptions about neural computation.

Original article: https://arxiv.org/pdf/2602.12236.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- Will there be a Wicked 3? Wicked for Good stars have conflicting opinions

- LINK PREDICTION. LINK cryptocurrency

- Ragnarok X Next Generation Class Tier List (January 2026)

- Hell Let Loose: Vietnam Gameplay Trailer Released

- Decoding Cause and Effect: AI Predicts Traffic with Human-Like Reasoning

- All Itzaland Animal Locations in Infinity Nikki

- The Best TV Performances of 2025

- Inside the War for Speranza: A Full Recap

- 🤑 Altcoin Bottom or Bear Trap? Vanguard & Ethereum Whisper Secrets! 🕵️♂️

2026-02-16 05:40