Author: Denis Avetisyan

A new technique identifies and isolates malicious participants in collaborative machine learning, even when they work together.

BlackCATT offers a practical black-box traitor tracing scheme for federated learning, defending against collusion attacks through optimized watermarking and functional regularization.

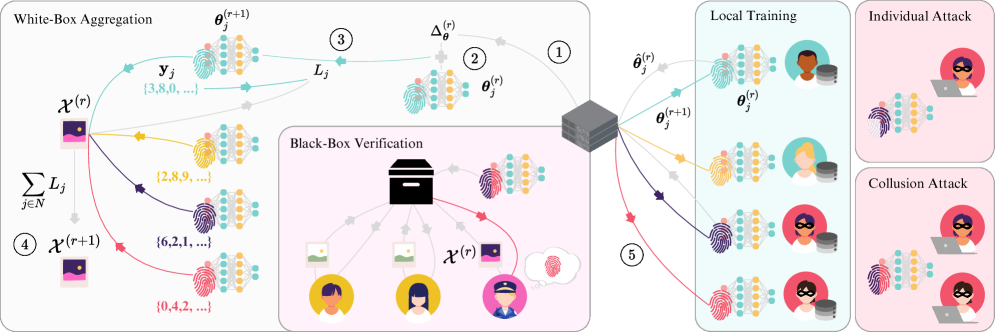

Despite the increasing popularity of federated learning for privacy-preserving machine learning, existing watermarking techniques for identifying malicious participants remain vulnerable to sophisticated collusion attacks. This paper introduces BlackCATT: Black-box Collusion Aware Traitor Tracing in Federated Learning, a novel scheme designed to robustly trace the origin of leaked models even when adversaries cooperate. BlackCATT achieves this through a collusion-aware embedding loss and iterative trigger optimization, while simultaneously mitigating model drift caused by watermark learning via functional regularization. Can this approach pave the way for truly trustworthy and accountable federated learning systems in sensitive application domains?

Unveiling the Cracks: Securing Distributed Intelligence

The increasing prevalence of Deep Neural Networks across critical infrastructure, consumer products, and sensitive applications necessitates robust protections for both the intellectual property embedded within these models and their ongoing functional integrity. These networks, often representing substantial investment and expertise, are vulnerable to replication and theft, impacting competitive advantage and potentially enabling malicious use. Beyond simple copying, compromised model integrity-through adversarial attacks or data poisoning-can lead to unpredictable and potentially dangerous outcomes, especially in safety-critical systems like autonomous vehicles or medical diagnostics. Therefore, safeguarding these increasingly vital assets is no longer simply a matter of competitive advantage, but a fundamental requirement for ensuring reliability, safety, and trust in a world increasingly reliant on artificial intelligence.

Conventional security protocols, designed for centralized data storage and processing, struggle to maintain efficacy within the distributed architecture of Federated Learning and similar decentralized systems. These approaches often rely on securing a single point of access, a strategy rendered ineffective when model training occurs across numerous independent devices. This inherent vulnerability exposes models to both extraction attacks – where malicious actors reconstruct the model through aggregated updates – and tampering, involving the injection of backdoors or the corruption of model parameters during the collaborative learning process. Consequently, a paradigm shift is necessary, focusing on techniques like differential privacy, secure multi-party computation, and robust aggregation methods to safeguard model integrity and intellectual property in this increasingly prevalent decentralized landscape.

Digital Signatures in the Machine: Watermarking Neural Networks

Watermarking of deep neural networks involves algorithmically modifying a model’s numerical parameters – the weights and biases – to embed a specific signal. This embedded signal functions as a digital signature, enabling verification of ownership or detection of unauthorized duplication. The watermark is not directly perceptible in the model’s output; instead, it’s extracted through a dedicated detection algorithm that analyzes the model’s parameters. Successful watermark embedding requires careful consideration of parameter selection and modification magnitude to balance watermark robustness with minimal impact on model performance. The resulting watermarked model, when shared or deployed, carries this hidden signature, allowing the owner to prove provenance or identify instances of illicit copying.

Watermarking techniques for deep neural networks are broadly categorized as either White-Box or Black-Box, differentiated by the level of access required to the model during the watermarking process. White-Box watermarking necessitates complete access to the model’s parameters, allowing modifications directly within the network’s weights and biases to embed the watermark. Conversely, Black-Box watermarking operates without requiring knowledge of the internal model parameters; instead, it relies on input-output behavior and perturbations to the input data to encode the watermark. This distinction impacts the implementation complexity, robustness, and potential applicability of each approach, with White-Box methods generally offering higher capacity but lower practicality in scenarios where model internals are unavailable.

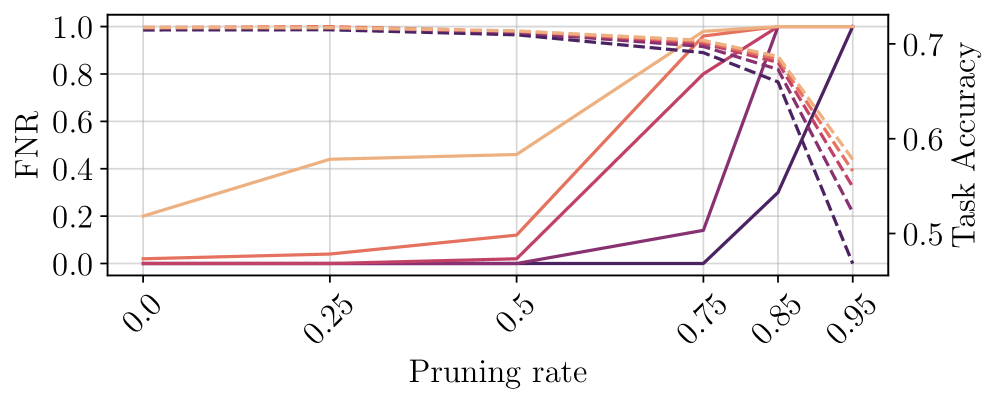

Watermark resilience is typically evaluated against a range of attacks, including model retraining, parameter pruning, quantization, and adversarial input generation. Retraining attacks attempt to remove the watermark by fine-tuning the model on a new dataset, while pruning and quantization aim to eliminate the watermark by reducing model size and precision, respectively. Adversarial attacks craft specific inputs designed to disrupt watermark detection without significantly impacting model accuracy. A robust watermarking scheme must maintain watermark detectability following these manipulations, ideally with minimal degradation in the model’s original performance metrics, such as accuracy and inference speed. Performance preservation is crucial to avoid usability issues that might discourage legitimate use of the watermarked model.

BlackCATT: Exposing the Shadows in Federated Learning

BlackCATT is a system designed to identify compromised or malicious actors within a Federated Learning environment. Traditional Federated Learning assumes honest participation, but real-world deployments are vulnerable to attacks where participants collude to steal model information or poison the global model. BlackCATT addresses this vulnerability by implementing a traitor tracing scheme, allowing the identification of specific clients responsible for malicious behavior. This is achieved without requiring knowledge of the internal workings of the compromised clients – hence the “black-box” designation – and operates effectively even when attackers attempt to conceal their actions through collusion. The system aims to provide a practical and scalable solution for maintaining the integrity and security of Federated Learning systems in the face of adversarial threats.

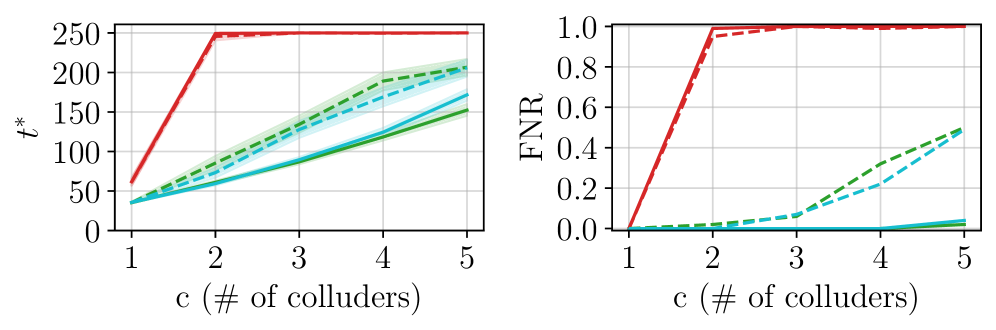

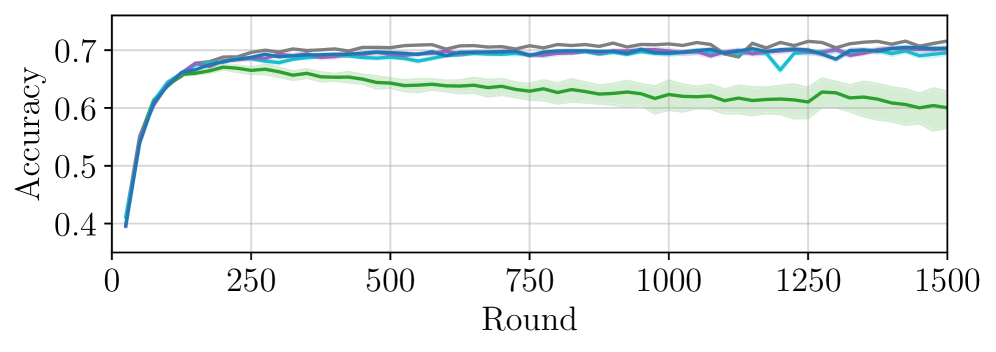

BlackCATT utilizes Tardos Codes as the foundational mechanism for identifying colluding malicious participants in a Federated Learning system. Tardos Codes enable the tracing of compromised models back to their source by embedding a unique fingerprint within each participant’s contribution. However, standard Tardos Code implementation can be susceptible to model drift caused by local model updates and potentially exacerbate collusion. To address this, BlackCATT incorporates Functional Regularization, a technique that constrains the local model updates to remain close to the global model while still allowing for effective learning. This regularization minimizes the impact of drift, maintains the integrity of the watermarks embedded by the Tardos Codes, and effectively limits the ability of colluding parties to mask their malicious behavior through coordinated model manipulation.

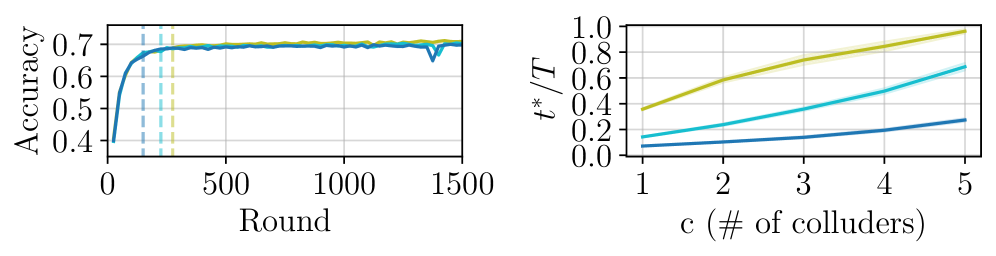

BlackCATT optimizes watermark embedding and detection through Adversarial Perturbation of the Trigger Set, a process designed to maximize the distinction between legitimate and malicious model updates. This is coupled with Task Arithmetic, a method for aggregating model updates from participating clients during Federated Learning. Performance evaluations demonstrate a False Positive Rate (FPR) of less than 0.01, indicating a low incidence of incorrectly identifying legitimate participants as malicious. This empirically observed FPR aligns closely with the theoretically established upper bound of 10^{-2}, validating the scheme’s robustness and accuracy in identifying colluding adversaries.

Validating the System: Benchmarks and Empirical Results

VGG16 and ResNet18 are commonly utilized convolutional neural network architectures employed as base models in the evaluation of watermarking scheme robustness. These models, pre-trained on ImageNet, provide a standardized and well-understood foundation for assessing watermark detectability and resilience against attacks. Their established performance characteristics allow for consistent comparisons between different watermarking techniques, and their relatively moderate size facilitates efficient experimentation. The use of these architectures ensures that performance variations observed are attributable to the watermarking scheme itself, rather than inherent differences in model capacity or training procedures.

Evaluation of the BlackCATT watermarking scheme utilizes the CIFAR-10 and CIFAR-100 datasets to establish a standardized performance baseline. CIFAR-10 consists of 60,000 32×32 color images in 10 classes, with 6,000 images per class. CIFAR-100 expands upon this with 100 classes, each containing 600 images. These datasets are widely adopted within the machine learning community for image classification tasks, enabling consistent and comparable results across different watermarking methodologies. The datasets’ defined structure and accessibility facilitate rigorous testing and validation of the BlackCATT scheme’s performance characteristics.

Experimental results demonstrate BlackCATT’s capacity for accurate identification of colluding attackers without significant degradation to model performance. Specifically, BlackCATT consistently achieves successful traitor tracing even in scenarios involving multiple colluding data owners, a capability lacking in prior watermarking schemes susceptible to collusion-based attacks. This resilience to collusion is a key differentiator, as previous methods often failed when a sufficient number of data owners cooperated to remove or disable the watermark, while BlackCATT maintains its tracing accuracy under these compromised conditions.

Beyond Protection: The Future of Collaborative Intelligence

Decentralized machine learning, while promising enhanced privacy and scalability, fundamentally relies on collaboration between numerous parties – a dynamic that necessitates robust mechanisms for trust and accountability. Watermarking and traitor tracing techniques address this critical need by embedding identifying signatures within shared models, allowing origin verification and the detection of malicious actors who attempt to redistribute or misuse intellectual property. These systems aren’t merely about protection; they actively enable collaboration by mitigating the risks associated with sharing valuable models in an open environment. Without such safeguards, the potential for model theft, unauthorized modification, and the erosion of confidence would severely limit the adoption of federated learning, particularly in sensitive domains like healthcare and finance, where data security and provenance are paramount. Consequently, advancements in these areas are not just technical refinements, but essential building blocks for realizing the full benefits of a truly collaborative and trustworthy machine learning ecosystem.

The capacity for secure model sharing and intellectual property protection fundamentally expands the applicability of federated learning to domains previously considered inaccessible. By embedding robust watermarks and utilizing traitor tracing, machine learning models can be distributed and collaboratively improved without exposing the underlying algorithms or training data. This is particularly crucial for sensitive applications like healthcare, finance, and national security, where data privacy and model ownership are paramount. Consequently, organizations can leverage the collective intelligence of decentralized datasets, fostering innovation while mitigating risks associated with data breaches and unauthorized model replication. This protective framework not only incentivizes participation in federated learning initiatives but also establishes a trustworthy ecosystem for collaborative artificial intelligence development.

The ongoing evolution of adversarial attacks necessitates a shift towards dynamic, rather than static, watermarking techniques for machine learning models. Current watermarking schemes, while offering initial protection, are vulnerable to increasingly sophisticated removal or forgery attempts. Future investigations should prioritize the development of adaptive watermarks – those capable of responding to detected attacks by altering their embedding strategy or strengthening their resilience. Crucially, these adaptive schemes must maintain model performance across a spectrum of architectures, from convolutional neural networks to transformers, and avoid introducing noticeable degradation in accuracy or efficiency. This requires exploring novel embedding functions, robust detection algorithms, and potentially, the integration of game-theoretic approaches where the watermark and attack strategies co-evolve, ultimately ensuring the long-term security and trustworthiness of decentralized machine learning systems.

The pursuit of robust federated learning, as demonstrated by BlackCATT, inherently involves challenging established boundaries. This work doesn’t simply accept the premise of secure aggregation; it actively probes for vulnerabilities, specifically collusion attacks. It’s a direct application of systematically testing limits to understand a system’s resilience. As John McCarthy observed, “It is perhaps a truism that the best way to understand something is to try and build it.” BlackCATT embodies this principle; by attempting to trace malicious participants, the researchers gain a deeper understanding of how collusion can manifest and, crucially, how to defend against it. The functional regularization component is not merely a safeguard, but an intellectual experiment-a deliberate alteration to observe the resulting behavior and refine the model’s stability.

What’s Next?

BlackCATT represents an exploit of comprehension – a successful imposition of traceability onto a system fundamentally designed to obscure it. Yet, the very success highlights the inevitable arms race. The current scheme, while addressing collusion, operates within a defined threat model. Future work must actively probe the boundaries of that model – what happens when the attackers understand the functional regularization, or begin to subtly manipulate the watermark embedding process itself? The elegance of a black-box approach is always tempered by the knowledge that every box has a surface, and surfaces can be analyzed.

A particularly intriguing direction lies in moving beyond simple detection to attribution. Tracing a colluding group is useful, but identifying the instigator – the source of the malicious intent – is far more potent. This demands a refinement of the watermark signal, perhaps leveraging differential privacy techniques to both protect individual contributions and allow for the isolation of the primary attacker. The challenge isn’t just embedding a signal, but embedding a forensic trail.

Ultimately, BlackCATT’s true value may lie not in its immediate defensive capabilities, but in its function as a stress test. Federated learning, by its very nature, invites adversarial thinking. Each successful defense simply reveals the next, more sophisticated, point of failure. The pursuit of perfect security is, of course, futile. The real goal is a continual cycle of exploitation and counter-exploitation – a constant refinement of understanding, achieved by systematically dismantling and rebuilding the system.

Original article: https://arxiv.org/pdf/2602.12138.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- Decoding Cause and Effect: AI Predicts Traffic with Human-Like Reasoning

- Landman Recap: The Dream That Keeps Coming True

- LINK PREDICTION. LINK cryptocurrency

- World of Warcraft Decor Treasure Hunt riddle answers & locations

- First Glance: “Wake Up Dead Man: A Knives Out Mystery”

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- Ragnarok X Next Generation Class Tier List (January 2026)

- 3 Best Movies To Watch On Prime Video This Weekend (Dec 13-14)

- The 1 Scene That Haunts Game of Thrones 6 Years Later Isn’t What You Think

2026-02-15 14:39