Author: Denis Avetisyan

Researchers are exploring how artificial intelligence can automatically simplify complex cybersecurity vulnerability descriptions, improving accessibility for a wider audience.

This review examines the application of automatic text simplification techniques, leveraging large language models, to enhance the clarity of Common Vulnerabilities and Exposures (CVE) reports while preserving critical meaning.

While cybersecurity literacy is increasingly vital, technical reports often present barriers to understanding for non-expert audiences. This paper, ‘Automatic Simplification of Common Vulnerabilities and Exposures Descriptions’, investigates the potential of automatic text simplification (ATS) – leveraging large language models – to enhance the accessibility of cybersecurity vulnerability reports. Our findings reveal that while LLMs can superficially simplify text, preserving meaning remains a significant challenge, necessitating rigorous human evaluation-demonstrated through a novel dataset of CVE descriptions and expert feedback. How can ATS techniques be refined to reliably communicate complex cybersecurity information without sacrificing critical technical accuracy?

The Erosion of Clarity in Cybersecurity Communication

A pervasive issue within cybersecurity is the reliance on highly technical language in threat reports and vulnerability assessments. This creates a significant barrier to understanding for individuals lacking specialized expertise, extending beyond the general public to include managers, policymakers, and even some IT professionals. The frequent use of acronyms, complex protocols, and nuanced technical details obscures the actual risk posed by vulnerabilities, hindering effective decision-making and proactive defense strategies. Consequently, organizations remain vulnerable not because of a lack of solutions, but because the severity and implications of threats are not clearly communicated, leading to delayed responses or, worse, complete inaction. This communication gap effectively transforms technical complexity into a security weakness, amplifying the potential impact of cyberattacks.

A robust cybersecurity posture relies heavily on the ability to effectively communicate potential threats, yet current practices frequently impede this crucial process. While technical details are essential for remediation, the presentation of these details often prioritizes specialist understanding over broad accessibility, leaving a significant portion of the population-and even many within organizations-unprepared to recognize or respond to emerging risks. This communication gap isn’t merely a matter of convenience; it directly undermines proactive defense strategies, as individuals unable to grasp the nature of a threat are less likely to adopt preventative measures or report suspicious activity. Consequently, vulnerabilities remain unaddressed, and the potential for successful attacks increases, highlighting the urgent need for translating complex cybersecurity information into clear, concise, and universally understandable language.

The sheer volume and technical depth of vulnerability disclosures, such as those cataloged in the Common Vulnerabilities and Exposures (CVE) list, present a substantial challenge to effective cybersecurity. While CVEs are invaluable for security professionals, their detailed, often highly technical descriptions are largely inaccessible to broader audiences – including decision-makers, end-users, and even many IT personnel. This complexity hinders proactive defense, as a lack of understanding prevents timely patching and mitigation efforts. Simplifying these reports-translating intricate technical details into clear, actionable language-is not merely a matter of convenience, but a critical step in empowering a wider range of stakeholders to participate in strengthening cybersecurity posture and reducing overall risk. Increased accessibility to vulnerability information fosters a more informed and resilient digital ecosystem, ultimately minimizing the potential impact of emerging threats.

Automated Simplification: A Logical Approach

Automatic Text Simplification utilizes Large Language Models (LLMs) to transform complex textual content into a more readily understandable form. This process involves LLMs analyzing source text and generating a rewritten version with reduced lexical and syntactic complexity. The core function relies on the LLM’s capacity for natural language generation and understanding, allowing it to identify and replace difficult words and phrases with simpler alternatives, shorten sentences, and restructure complex clauses. LLMs are trained on large datasets of both complex and simplified text, enabling them to learn patterns of simplification and apply those patterns to new input texts, ultimately aiming to improve accessibility for a wider range of readers.

Evaluation of automatic text simplification systems utilizes a combination of metrics focusing on both quality and readability. D-SARI, a commonly used metric, assesses simplification quality by measuring the overlap of simplified text with human-generated reference simplifications, factoring in deletion, addition, and substitution operations. BERTScore leverages contextual embeddings from BERT to evaluate semantic similarity between the original and simplified text. Readability is frequently quantified using the Flesch-Kincaid Grade Level, which estimates the years of education a reader needs to understand the text; lower scores indicate increased readability. These metrics, used in conjunction, provide a comprehensive assessment of a model’s ability to both simplify complex language and maintain its original meaning.

Current automatic text simplification systems frequently utilize large language models, such as GPT-4o, to reduce the complexity of source text. Empirical results demonstrate a measurable reduction in readability metrics; specifically, GPT-4o has been shown to decrease the Flesch-Kincaid Grade Level from 12.45 to 9.49. However, the success of these models is contingent on maintaining semantic equivalence between the original and simplified text. Assessment of semantic similarity is commonly performed using models like MeaningBERT and Sentence-BERT, which calculate the degree of overlap in meaning through vector embeddings and cosine similarity calculations.

GemmaAgent: A Specialized System for Cybersecurity

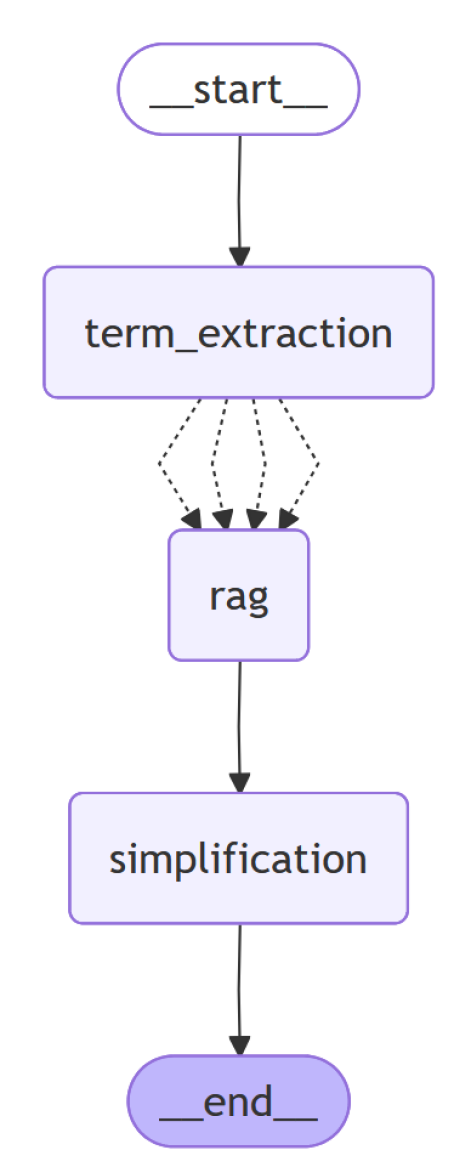

The GemmaAgent is an agent-based system designed for the specific task of simplifying cybersecurity text. It leverages the Gemma 3:4b language model as its core component, enabling automated text rewriting while aiming to reduce complexity. This agent-based approach allows for modularity and potential integration with other cybersecurity tools and datasets. The system is not a general-purpose simplification tool, but rather is tailored to the unique requirements and terminology present within cybersecurity documentation and reports, allowing for focused performance gains in this specific domain.

The GemmaAgent utilizes Named Entity Recognition (NER) through the AITSecNER module to specifically identify and retain crucial cybersecurity terminology during text simplification. AITSecNER is designed to detect entities such as vulnerability names (e.g., CVE-2023-1234), attack techniques (e.g., phishing, SQL injection), malware families (e.g., Emotet, WannaCry), and organizational references within the input text. By recognizing these entities, the system ensures they are not altered or generalized during the simplification process, maintaining the technical accuracy and relevance of the rewritten text for cybersecurity professionals and analysts. This preservation of key terms is critical for maintaining the informational integrity of the simplified content.

Retrieval Augmented Generation (RAG) is a core component of the GemmaAgent simplification process, functioning by incorporating information retrieved from cybersecurity-specific lexica and dictionaries during text rewriting. This grounding in specialized knowledge ensures that simplified outputs maintain technical accuracy and contextual relevance, avoiding misrepresentation of critical cybersecurity concepts. The implementation of RAG directly contributes to the system’s demonstrated performance, as measured by a D-SARI score of 0.14, indicating a quantifiable level of simplification quality while preserving essential information.

Evaluating the Impact on Cybersecurity Accessibility

To rigorously assess the performance of automatic text simplification systems specifically within the challenging domain of cybersecurity, a novel semi-synthetic dataset was meticulously constructed from Common Vulnerabilities and Exposures (CVE) descriptions. Recognizing the lack of standardized benchmarks for evaluating simplification techniques applied to technical documentation, this dataset provides a controlled environment for comparison. The approach involved carefully simplifying existing CVE text while preserving critical technical details, creating a corpus that balances readability with the accurate conveyance of security information. This allows for objective measurement of how well different systems can render complex vulnerabilities accessible to a broader audience – including security professionals and those with varying levels of technical expertise – without sacrificing crucial context needed for effective mitigation and patching.

Testing of the GemmaAgent against a newly constructed dataset of simplified Common Vulnerabilities and Exposures (CVE) descriptions reveals a notable capacity to generate text that balances readability with the preservation of critical cybersecurity details. This assessment utilized MeaningBERT, a metric designed to evaluate semantic similarity, and showed the GemmaAgent achieving a marginally improved score compared to GPT-4o. This suggests the agent effectively distills complex technical language without sacrificing the core meaning necessary for security professionals to understand and address vulnerabilities, offering a promising avenue for improving accessibility to crucial security information and potentially accelerating response times.

Initial assessments of automatically simplified cybersecurity vulnerability descriptions revealed a nuanced relationship between readability and accuracy. Of the first 40 descriptions generated, only five were immediately accepted by human evaluators, highlighting the challenges in balancing simplification with the preservation of critical technical details. The remaining 35 required revisions to ensure clarity and maintain the original meaning, demonstrating the need for iterative refinement in automated text simplification systems. Importantly, the semi-synthetic dataset used for training proved particularly effective in meaning preservation, consistently achieving higher scores on relevant metrics; this suggests that carefully constructed, benchmark datasets are crucial for developing tools that can make complex cybersecurity information accessible to a wider audience without sacrificing essential technical precision.

The pursuit of automatically simplifying complex cybersecurity descriptions, as detailed in this study, echoes a fundamental tenet of mathematical rigor. Andrey Kolmogorov once stated, “The most important thing in science is not to be afraid to ask questions.” This sentiment perfectly aligns with the paper’s core idea of challenging the accessibility of technical reports. The research isn’t merely about making information easier to digest; it’s about questioning the existing barriers to understanding. By employing large language models for text simplification, the work rigorously investigates the trade-off between brevity and meaning preservation, striving for solutions that are not just functional, but provably accurate – a principle Kolmogorov would undoubtedly champion. The focus on human evaluation further solidifies this commitment to verifiable results, ensuring that simplification doesn’t come at the cost of technical correctness.

What’s Next?

The pursuit of automated simplification, as demonstrated with respect to cybersecurity advisories, reveals a fundamental tension. The algorithms function-they produce shorter text-but whether that text means the same thing remains stubbornly resistant to algorithmic determination. Current metrics, focused on lexical overlap or surface-level comparisons, offer only a pale imitation of semantic equivalence. A truly robust evaluation necessitates formal methods – a provable preservation of logical consequence, not merely a high score on a readability assessment.

Retrieval Augmented Generation, while promising, merely shifts the burden of correctness. The system’s output is only as trustworthy as the source material it consults. Garbage in, simplified garbage out. The field must confront the inherent ambiguity within natural language itself. To claim ‘simplification’ implies a singular, objective ‘simple’ form, an illusion that belies the nuances of interpretation.

Future work must move beyond empirical evaluations, however numerous the human subjects. The goal is not to create text that appears more understandable, but text that is demonstrably, logically equivalent to its original. The elegance of an algorithm lies not in its performance on a benchmark, but in the mathematical certainty of its correctness. Only then will ‘simplification’ transcend mere expediency and approach a form of digital fidelity.

Original article: https://arxiv.org/pdf/2602.11982.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- My Favorite Coen Brothers Movie Is Probably Their Most Overlooked, And It’s The Only One That Has Won The Palme d’Or!

- First Glance: “Wake Up Dead Man: A Knives Out Mystery”

- Will there be a Wicked 3? Wicked for Good stars have conflicting opinions

- The 1 Scene That Haunts Game of Thrones 6 Years Later Isn’t What You Think

- LINK PREDICTION. LINK cryptocurrency

- Landman Recap: The Dream That Keeps Coming True

- Exclusive: First Look At PAW Patrol: The Dino Movie Toys

- 3 Best Movies To Watch On Prime Video This Weekend (Dec 13-14)

- Decoding Cause and Effect: AI Predicts Traffic with Human-Like Reasoning

- Hell Let Loose: Vietnam Gameplay Trailer Released

2026-02-15 13:09