Author: Denis Avetisyan

A new framework dramatically reduces data acquisition time for millimeter-wave and terahertz channel sounding, enabling more practical large-scale measurements for future 6G wireless systems.

This review details a sparse sampling approach and a refined algorithm for accurate multipath component extraction in integrated sensing and communication (ISAC) scenarios.

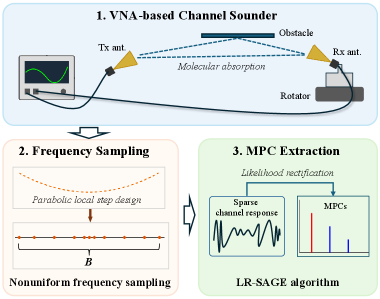

Traditional channel sounding techniques struggle to efficiently acquire the massive datasets required for realizing the full potential of artificial intelligence (AI) and integrated sensing and communication (ISAC) in 6G systems. This paper, ‘Enabling Large-Scale Channel Sounding for 6G: A Framework for Sparse Sampling and Multipath Component Extraction’, introduces a novel framework based on parabolic frequency sampling and a likelihood-rectified space-alternating generalized expectation-maximization (LR-SAGE) algorithm to dramatically reduce data acquisition time while maintaining accurate multipath parameter estimation. Specifically, this approach enables tens to hundreds of times more channel data to be collected within the same measurement duration, achieving significant reductions in data volume and post-processing complexity. Will this efficient channel sounding framework prove critical in constructing the massive datasets needed to fully unlock the capabilities of AI-native 6G systems and beyond?

The Inevitable Decay of Signal Fidelity

Terahertz (THz) communication systems are envisioned as a pivotal technology for achieving exceptionally high data rates, potentially exceeding those of current wireless standards. However, realizing this potential is significantly challenged by the unique characteristics of the THz spectrum itself. Specifically, THz signals are strongly affected by molecular absorption – certain molecules in the atmosphere readily absorb THz radiation, attenuating signal strength – and by severe multipath effects. These multipath effects arise because THz waves, due to their short wavelengths, are highly susceptible to reflection, diffraction, and scattering from surfaces and objects, creating numerous signal copies that interfere with one another. Accurately characterizing the communication channel – the path the signal takes – becomes extraordinarily difficult in this environment, hindering the development of reliable and efficient THz communication systems.

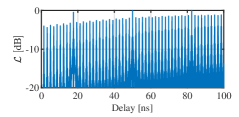

Delay ambiguity presents a significant challenge in terahertz (THz) communication systems due to the extremely short wavelengths involved. As signals propagate, they reflect off surrounding objects, creating multiple signal paths; when these reflected signals arrive at the receiver after exceeding the system’s maximum measurable time window, they are incorrectly interpreted as noise or, more critically, interfere with the intended signal. This phenomenon corrupts the accurate reconstruction of the transmitted data, as the receiver cannot distinguish between the direct signal and these delayed, yet valid, reflections. Consequently, channel estimation – the process of characterizing the propagation environment – becomes severely compromised, hindering the development of reliable THz communication links. The limited ability to capture these late-arriving reflections introduces substantial errors in channel models and ultimately restricts the achievable data rates and communication range.

Traditional channel sounding techniques rely on capturing and analyzing signal reflections to build accurate models of wireless environments. However, terahertz (THz) communication faces a significant limitation due to the restricted unambiguous delay range (UDR). This UDR defines the maximum time interval over which delayed signals can be correctly distinguished from each other; beyond this, reflections appear as aliases, corrupting the channel estimate. Because THz signals experience substantial atmospheric absorption and are prone to multipath propagation – creating numerous reflections – these delays can easily exceed the UDR of conventional systems. Consequently, channel models built using these techniques become inaccurate, potentially leading to suboptimal system design and performance. Addressing this limitation requires innovative approaches to either extend the UDR or develop methods to effectively mitigate the impact of ambiguous delays, which is crucial for realizing the full potential of THz communication.

The successful deployment of terahertz communication hinges on overcoming significant challenges to channel fidelity. Molecular absorption and multipath propagation introduce distortions that severely limit reliable data transmission, but these aren’t insurmountable obstacles. Accurate channel estimation-determining how a signal changes as it travels-is paramount, yet complicated by the very nature of terahertz waves. Researchers are actively developing advanced signal processing techniques and novel array architectures to combat these distortions and extend the range over which signals can be accurately reconstructed. Mitigation strategies include sophisticated algorithms for separating direct paths from reflections, as well as methods for characterizing and compensating for frequency-selective fading. Ultimately, resolving these issues will unlock the immense bandwidth available at terahertz frequencies, paving the way for data rates far exceeding those of current wireless technologies and enabling applications like real-time holographic communication and ultra-high-resolution imaging.

Sparse Sampling: A Necessary Reduction

Sparse sampling techniques, notably compressive sensing (CS), deviate from the Nyquist-Shannon sampling theorem by allowing accurate signal reconstruction from fewer samples than traditionally required. This is achieved by exploiting signal sparsity – the characteristic that most signals can be represented with only a small number of coefficients in a suitable basis. CS relies on signal models that ensure this sparsity, combined with specifically designed measurement matrices that promote incoherent sampling. Reconstruction algorithms, such as l_1 minimization, then identify the sparse representation from the limited set of measurements, effectively recovering the original signal with high fidelity despite the reduced sampling rate. This approach is particularly advantageous in applications where acquiring samples is costly or time-consuming, or where signals are inherently sparse or compressible.

THz channel sounding benefits from sparse sampling techniques due to the typically sparse nature of the channel impulse response; most THz signals propagate via a limited number of dominant paths, including direct line-of-sight and a few strong reflections. This inherent sparsity allows for accurate channel estimation with fewer samples than traditional methods, which assume a fully populated impulse response. Exploiting this characteristic requires specialized algorithms and waveform designs optimized for sparse recovery, rather than simply increasing the oversampling rate. The degree of sparsity is influenced by the environment – highly reflective spaces will exhibit lower sparsity – and therefore, adaptive sampling strategies are crucial for maximizing efficiency and minimizing the number of required measurements.

Coprime frequency sampling and nested frequency sampling are techniques used to broaden the effective frequency aperture in THz channel sounding, thereby increasing frequency resolution. Coprime sampling involves utilizing multiple frequency bands with relatively prime sampling rates, effectively oversampling the signal and mitigating aliasing. Nested frequency sampling builds upon this by employing a hierarchical structure of frequency bands, allowing for finer resolution at specific frequencies of interest. Both methods achieve improved resolution without necessarily increasing the total measurement bandwidth, which is crucial for practical THz implementations where bandwidth is limited and sampling rates can be constrained by hardware capabilities. This enhanced resolution is particularly important for accurately characterizing multipath components and resolving closely spaced frequency-selective fading effects in THz channels.

The integration of coprime and nested frequency sampling techniques with optimized algorithms yields substantial reductions in THz channel sounding measurement time and computational complexity. Traditional channel sounding requires a large number of frequency samples to adequately resolve multipath components; however, these sparse sampling methods effectively increase the frequency aperture without a proportional increase in the number of required samples. This is achieved by leveraging the inherent sparsity of the THz channel impulse response and employing reconstruction algorithms – such as l_1 minimization – that can accurately estimate the channel characteristics from a limited number of measurements. Consequently, data acquisition time is decreased, and the computational burden associated with channel estimation and processing is significantly lessened, making real-time or near-real-time THz channel characterization more feasible.

Parabolic Sampling: Extending the Reach of Measurement

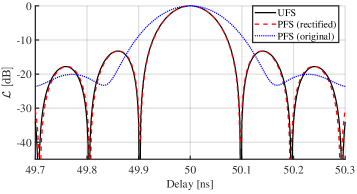

Parabolic frequency sampling (PFS) is a non-uniform sampling technique engineered to address the limitations of traditional uniform sampling in scenarios requiring extended delay range estimation. Conventional systems are constrained by the Nyquist rate, restricting the maximum unambiguous delay to \frac{1}{2f_s} , where f_s is the sampling frequency. PFS circumvents this limitation by strategically distributing frequency samples according to a parabolic function, effectively increasing the effective unambiguous delay range without requiring an increase in the total number of samples. This is achieved by creating a sampling pattern where the spacing between samples decreases as frequency increases, which allows for accurate reconstruction of signals with longer delays compared to uniformly spaced samples. Consequently, PFS enables reliable channel parameter estimation in environments with significant multipath propagation, where delayed reflections can introduce ambiguity if not properly resolved.

Parabolic frequency sampling (PFS) utilizes the Poisson summation formula to address the issue of delay ambiguity in channel sounding. This formula allows for the strategic aliasing of multipath components that would normally fall outside the desired frequency band. By carefully selecting the sampling frequencies according to a parabolic distribution, PFS effectively maps these distant, delayed replicas of the transmitted signal into the sampled spectrum. This process avoids the need for extremely high sampling rates, as it avoids directly resolving these distant components, and instead incorporates their energy into the desired signal bandwidth for accurate channel parameter estimation. The mathematical foundation of this technique ensures that the aliased components contribute constructively to the channel impulse response reconstruction, rather than introducing artifacts or inaccuracies.

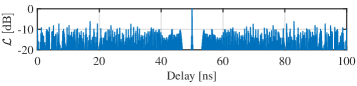

Parabolic frequency sampling (PFS) is effectively utilized with the Switching and Averaging GEometry (SAGE) algorithm to enable robust and accurate recovery of channel parameters in multipath environments. SAGE, a parameter estimation technique, benefits from the extended unambiguous delay range provided by PFS, allowing for precise determination of time-of-arrival, amplitude, and phase of individual multipath components. The combination facilitates reliable channel characterization, even in scenarios with significant delays or complex reflections, by effectively resolving and separating these components for accurate estimation. This approach offers a significant advantage over traditional uniform sampling methods, particularly in bandwidth-limited or computationally constrained applications.

The LR-SAGE algorithm builds upon the SAGE methodology to improve the accuracy of Terahertz (THz) channel sounding by addressing distortions introduced by molecular absorption. This extension enables comparable multipath channel estimation accuracy to that achieved with dense uniform sampling, but with a significant reduction in resource requirements. Specifically, LR-SAGE reduces the necessary frequency samples by 99.96% and achieves a 99.96% decrease in computational complexity, making it a highly efficient solution for THz channel characterization without compromising estimation performance.

Integrated Sensing and Communication: A Convergence of Systems

The evolution of wireless technology is increasingly focused on a unified approach, with Integrated Sensing and Communication (ISAC) rapidly becoming a foundational element of next-generation systems. This paradigm shift, prominently featured within the IMT-2030 framework-the blueprint for 6G-moves beyond simply transmitting data to simultaneously perceiving the environment. ISAC leverages the same radio frequency signals for both communication and sensing, enabling devices to ‘see’ their surroundings with unprecedented accuracy. This convergence promises a significantly enhanced user experience and unlocks a wealth of new applications, moving beyond connectivity to provide contextual awareness for everything from autonomous systems to precision healthcare and intelligent infrastructure. By merging these traditionally separate functionalities, ISAC is poised to redefine the capabilities of future wireless networks and drive innovation across multiple sectors.

The realization of Integrated Sensing and Communication (ISAC) relies heavily on a precise understanding of the wireless environment, particularly within the Terahertz (THz) frequency band. Accurate THz channel sounding, however, presents significant challenges due to the limited availability of hardware and the high bandwidth required for data acquisition. Recent advancements in sparse sampling and Phase Focusing techniques (PFS) offer a solution by dramatically reducing the number of required samples without compromising accuracy. These methods allow for efficient capture of critical channel characteristics, enabling ISAC systems to perform environmental perception – mapping surroundings to identify objects and obstacles – and precise location tracking. This capability is fundamental for applications ranging from autonomous vehicles navigating complex urban landscapes to smart city infrastructure monitoring environmental conditions, and for industrial automation systems requiring real-time object identification and positioning.

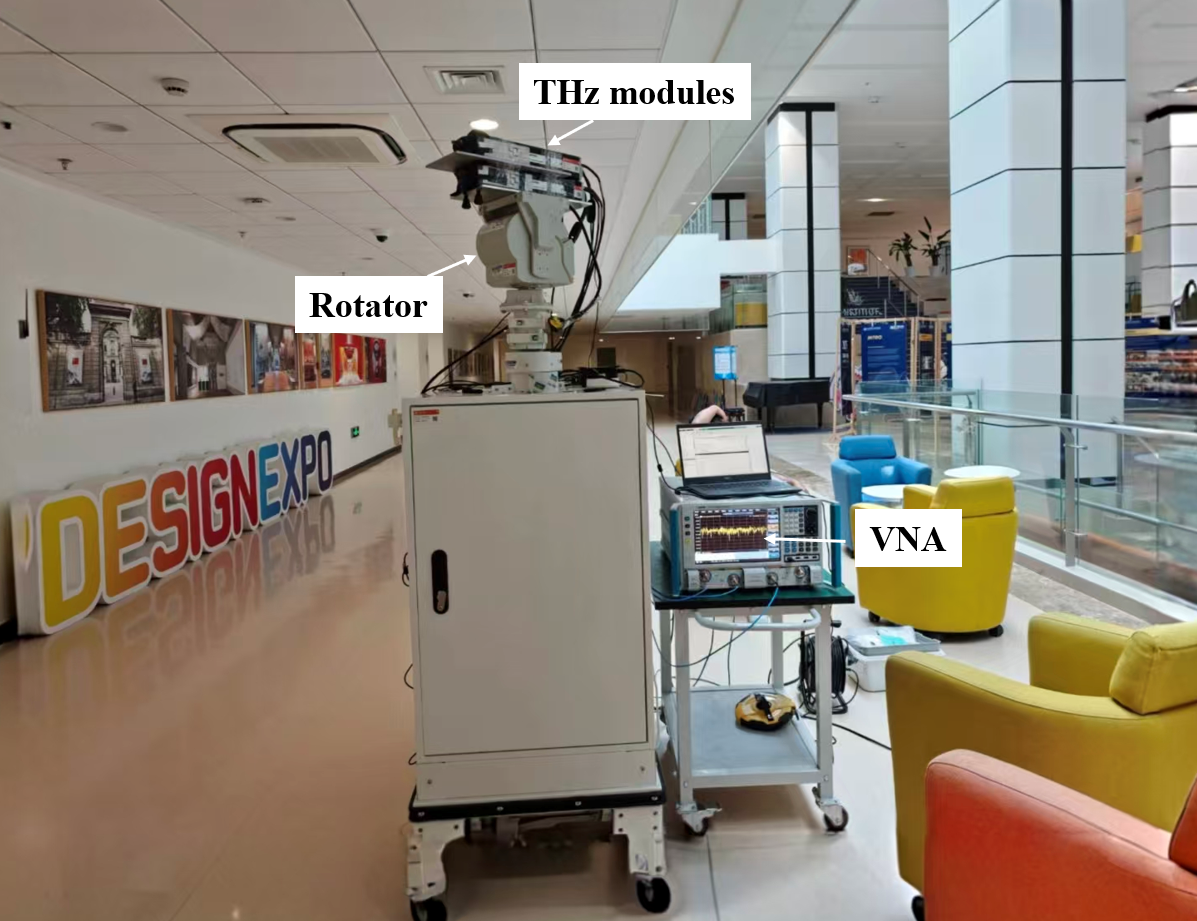

Traditional channel sounding for terahertz (THz) communication systems demands an exhaustive collection of frequency data, creating a substantial bottleneck in both time and computational resources. However, recent advancements utilizing Vector Network Analyzers (VNAs) coupled with sparse sampling and phase focusing strategies dramatically reduce this burden. These techniques allow for highly accurate THz channel estimation with only 2% of the frequency samples needed by conventional full bandwidth measurements. This reduction isn’t merely a matter of efficiency; it fundamentally alters the feasibility of deploying THz-based Integrated Sensing and Communication (ISAC) systems, paving the way for real-time environmental perception and precise location tracking in dynamic environments. By minimizing data acquisition, researchers and developers can accelerate the prototyping and implementation of ISAC applications critical to advancements in autonomous vehicles, smart infrastructure, and industrial automation.

The synergistic integration of sensing and communication technologies is poised to revolutionize several key sectors. Beyond simply transmitting data, future wireless networks will actively perceive and understand the surrounding environment, creating intelligent systems capable of unprecedented functionality. In autonomous vehicles, this convergence enables highly detailed real-time mapping and object detection, critical for safe and reliable navigation. Smart cities will benefit from enhanced environmental monitoring, optimized traffic flow, and improved public safety through pervasive sensing capabilities. Furthermore, industrial automation stands to gain from predictive maintenance, precise robotic control, and optimized resource allocation, all facilitated by the ability to simultaneously communicate and sense within a factory or warehouse environment. This unified approach promises not just incremental improvements, but a fundamental shift towards truly intelligent and responsive systems across a broad range of applications.

The pursuit of increasingly accurate channel models, as detailed in this work concerning sparse sampling for 6G, mirrors the inevitable evolution-and eventual obsolescence-of any architectural design. The presented framework, aiming to reduce data acquisition time while maintaining estimation accuracy, acknowledges that improvements themselves are transient. As Robert Tarjan aptly stated, “The most important things are always the things that are difficult.” The difficulty here lies not merely in the technical challenges of THz channel sounding, but in building systems resilient enough to accommodate the accelerating pace of technological advancement. The likelihood-rectified SAGE algorithm represents a step towards this resilience, allowing for more efficient data gathering even as the complexity of the wireless landscape increases. Every architecture lives a life, and this framework seeks to prolong its utility within an ever-changing environment.

What Lies Ahead?

The presented framework, while a demonstrable step toward tractable channel sounding in the terahertz band, merely reframes the inevitable. Reducing acquisition time through sparse sampling doesn’t negate entropy; it redistributes it. The challenge isn’t simply how to capture the channel, but acknowledging its fundamental impermanence. Each sounding is a snapshot, already obsolete upon completion, a fleeting approximation of a relentlessly evolving environment. The system doesn’t approach perfection; it accumulates a history of corrections.

Future work will inevitably focus on refining the likelihood rectification algorithm and expanding the applicability of sparse sampling to more complex scenarios. However, a more fruitful direction may lie in embracing the dynamic nature of the channel itself. Instead of striving for a definitive, static model, research could prioritize methods for continuous channel estimation and adaptive communication strategies. The goal shouldn’t be to know the channel, but to anticipate its deviations.

Ultimately, the pursuit of increasingly accurate channel models will yield diminishing returns. The true innovation won’t be in minimizing error, but in designing systems resilient to it. Incidents are not failures; they are system steps toward maturity, opportunities to refine the model, and recalibrate expectations. The system doesn’t age; it learns.

Original article: https://arxiv.org/pdf/2602.05405.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- KPop Demon Hunters Just Broke Another Big Record, But I Think Taylor Swift Could Stop It From Beating The Next One

- WhistlinDiesel teases update video after arrest, jokes about driving Killdozer to court

- Galaxy Digital Goes on a $302M SOL Shopping Spree! 🛍️🚀

- Rumored Assassin’s Creed IV: Black Flag Remake Has A Really Silly Title, According To Rating

- The Best Battlefield REDSEC Controller Settings

- Hunt for Aphelion blueprint has started in ARC Raiders

- Stuck on “Served with the main course” in Cookie Jam? Check out the correct answer

2026-02-08 12:37