Author: Denis Avetisyan

New research reveals how vulnerable common hydrological models are to subtle data manipulations, and surprisingly, which model proves more resilient.

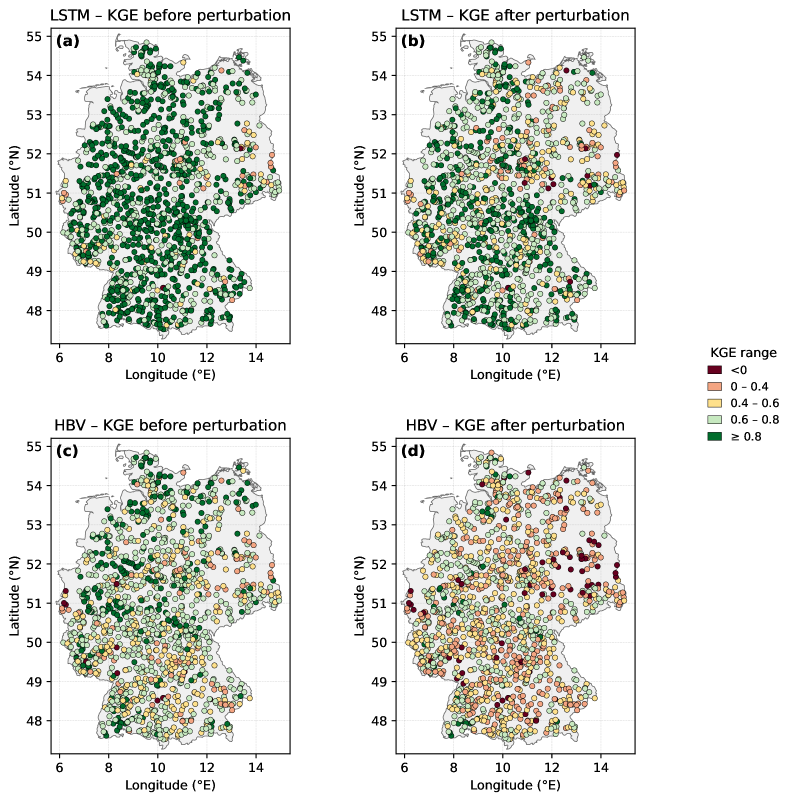

A comparative analysis of HBV and LSTM models demonstrates approximately linear responses to adversarial perturbations, with LSTM exhibiting greater robustness.

While hydrological models are essential for water resource management, simply matching predictions to observations offers limited insight into their behavioral reliability. This need for trustworthy models motivates the research presented in ‘On the Adversarial Robustness of Hydrological Models’, which investigates how sensitive both physical-conceptual (HBV) and deep learning (LSTM) models are to small, targeted changes in meteorological inputs. The study surprisingly finds that LSTMs generally exhibit greater robustness to these perturbations than HBV models, and that both model types demonstrate approximately linear responses to increasing perturbation magnitudes. Could these findings inform the development of more resilient hydrological forecasting systems and guide architectural improvements for enhanced model trustworthiness?

The Fragility of Forecasts: Unveiling Hidden Vulnerabilities

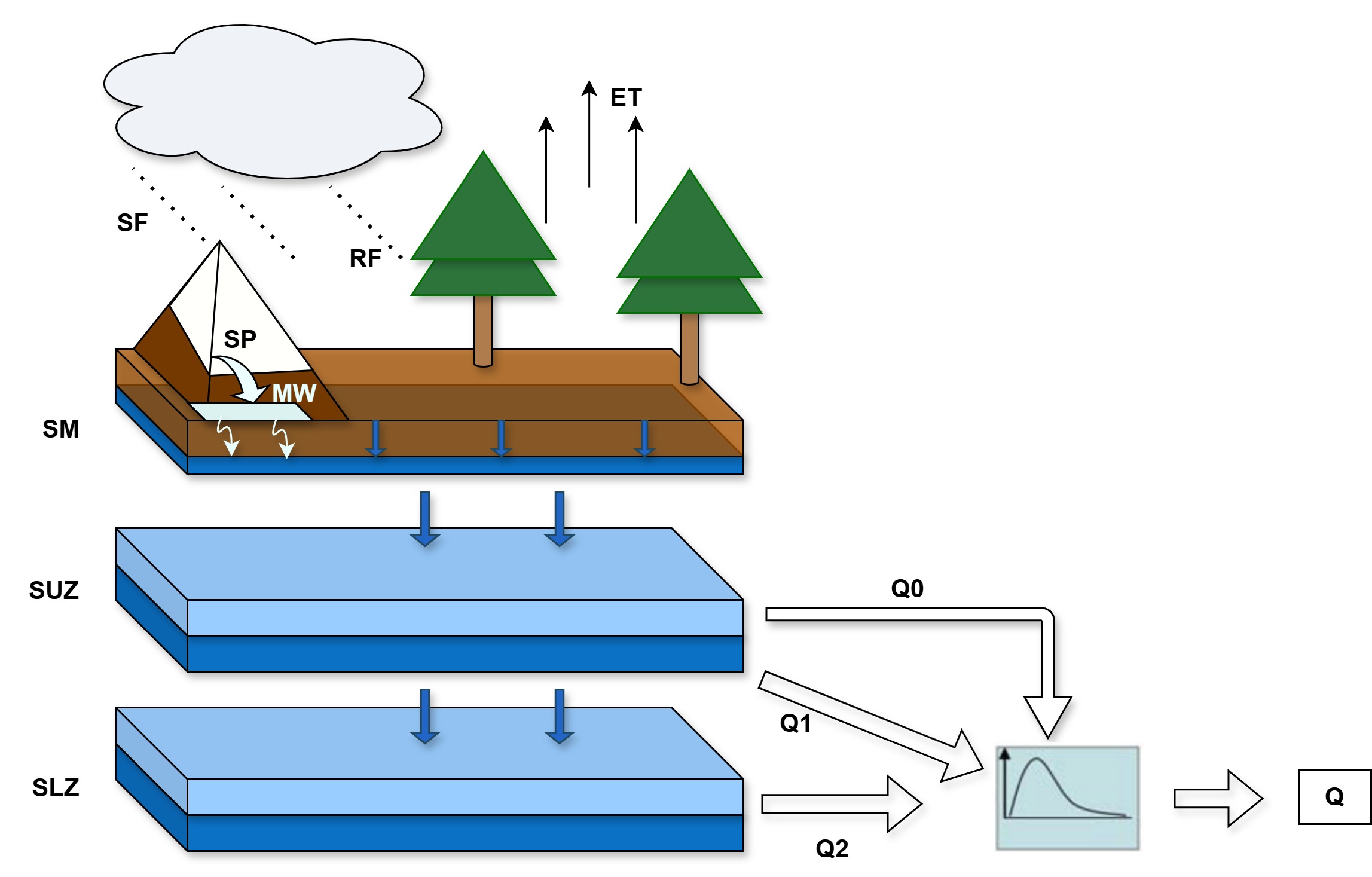

Hydrological models, such as the widely-used HBV model, now underpin critical decisions regarding water resource management globally. These models simulate rainfall-runoff processes to forecast water availability, inform irrigation schedules, guide reservoir operations, and mitigate flood risk. As populations grow and climate change intensifies water stress, reliance on these predictive tools has surged, extending to complex systems that integrate snowmelt, groundwater dynamics, and evapotranspiration. The increasing sophistication of water management strategies – including transboundary river basin governance and urban water supply planning – further amplifies the need for accurate hydrological forecasts, making these models indispensable for ensuring sustainable water resources for communities and ecosystems alike.

Hydrological models, despite their sophistication, aren’t impervious to manipulation. Subtle, carefully crafted alterations to input data – termed adversarial perturbations – can mislead these systems, generating inaccurate predictions about vital water resources. These perturbations, often imperceptible to human observation, exploit the complex algorithms within models like the HBV, causing disproportionately large errors in forecasted streamflow, reservoir levels, or flood risks. The danger lies in the fact that these alterations don’t necessarily reflect genuine changes in the environment; instead, they represent a deliberate attempt to deceive the model. Consequently, even highly-trusted forecasts can become unreliable, highlighting a growing vulnerability in water management systems that increasingly depend on these predictive tools.

The dependability of water forecasting systems is increasingly paramount given escalating global challenges like drought and flood risk, making the vulnerability of hydrological models a critical concern. Subtle manipulations of input data-even those seemingly insignificant-can propagate through these complex systems, yielding inaccurate predictions with potentially severe consequences for water resource management. This susceptibility isn’t necessarily a flaw in the model’s core mechanics, but rather a characteristic of its reliance on real-world data, which is inherently subject to errors and, increasingly, deliberate interference. Recognizing and quantifying this vulnerability is therefore essential; proactive measures, such as enhanced data validation techniques and the development of more robust modeling algorithms, are vital to safeguarding the accuracy and trustworthiness of these crucial forecasting tools, ensuring informed decision-making for communities and ecosystems dependent on reliable water supplies.

Exposing Weaknesses: The Anatomy of Adversarial Attacks

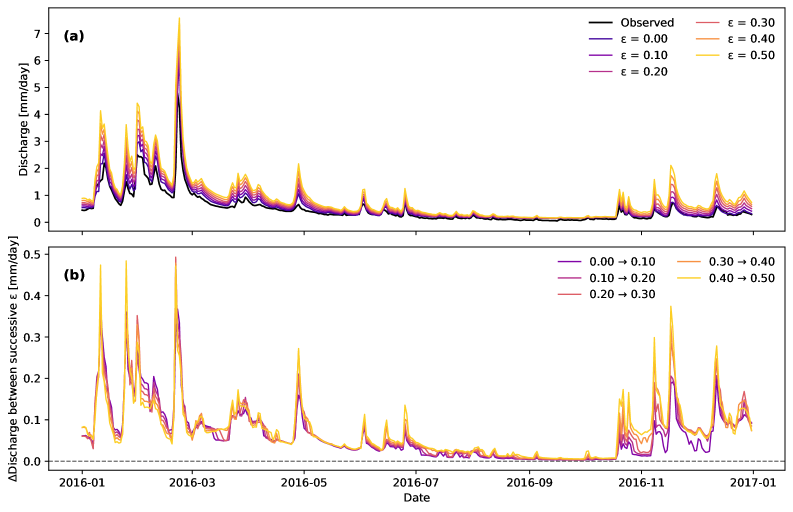

The Fast Gradient Sign Method (FGSM) was utilized to create adversarial perturbations in both the HBV model, a process-based hydrological model, and a Deep Learning model. FGSM operates by calculating the gradient of the loss function with respect to the input data, then adding a small perturbation in the direction of the sign of the gradient. This method efficiently identifies input modifications that maximize the loss, effectively “fooling” the model. The magnitude of the perturbation, denoted by ε, was kept small to ensure the adversarial examples remained imperceptible, while still causing a measurable change in model output. This approach allowed for systematic testing of model robustness against intentionally crafted, subtle input variations across both modeling frameworks.

The methodology involved applying computationally generated perturbations to time series data sourced from the CAMELS-DE Dataset. This dataset provides observed hydrological data for a range of catchments. The intent of these perturbations was not random noise, but rather carefully crafted modifications designed to systematically influence model outputs in a quantifiable manner. By analyzing the resulting errors, we sought to understand how sensitive the HBV Model and Deep Learning Model were to specific changes in input data, and to determine if these changes could predictably alter the accuracy of hydrological forecasts. This approach allowed for controlled experimentation to expose potential vulnerabilities in model behavior.

Experimental results demonstrate that even minimal perturbations applied to input time series data can lead to substantial reductions in the accuracy of hydrological predictions. Specifically, the impact of these perturbations is not uniform across all catchments; certain configurations exhibit a heightened sensitivity, resulting in disproportionately large errors in predicted outputs. This suggests that the hydrological models, while generally robust, possess vulnerabilities tied to specific catchment characteristics – such as size, shape, or dominant hydrological processes – which amplify the effect of even minor input distortions.

The Linear Response: Unraveling Model Behavior

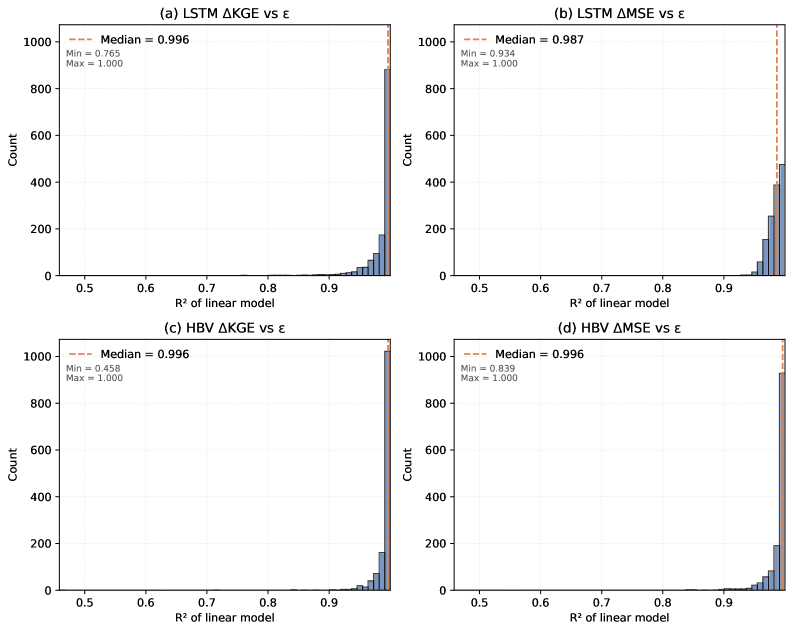

Analysis revealed an approximately linear correlation between the magnitude of input perturbations and the corresponding changes observed in model output for both the Deep Learning Model and the HBV Model. This relationship was quantified using the coefficient of determination, R^2, which consistently exceeded 0.95 across a substantial proportion of evaluated time steps and geographical catchments. This high R^2 value indicates that a large percentage of the variance in model output can be explained by variations in the input parameters, suggesting a predictable response within the observed ranges.

The observed approximate linearity between input perturbations and model output changes indicates a degree of predictability in model responses. This means that, within the tested ranges, a given change in an input variable will consistently result in a proportionally similar change in the model’s prediction. This predictability is not absolute – the relationship is approximate and subject to the limitations of the linear approximation – but it allows for a degree of inference regarding model behavior under different input scenarios. Quantitatively, this is supported by the high R² values (≥ 0.95) observed across many time steps and catchments, demonstrating a strong correlation between input variation and output change.

The Deep Learning Model’s architecture incorporates Rectified Linear Unit (ReLU) activation functions, which introduce non-linearity into the model despite the observed approximate linearity between input perturbations and output changes. ReLU functions output the input directly if positive, and zero otherwise. This piecewise linear behavior can contribute to the overall model’s sensitivity or, conversely, act as a limiting factor, preventing unbounded amplification of small input variations. Investigating how the distribution of ReLU activations – specifically the proportion of active versus inactive neurons – correlates with the magnitude of output changes is crucial for understanding and enhancing the model’s robustness. A higher proportion of inactive neurons may indicate a degree of input filtering, while consistently active neurons could suggest a more direct propagation of input perturbations.

The Cost of Deception: Evaluating Performance Under Attack

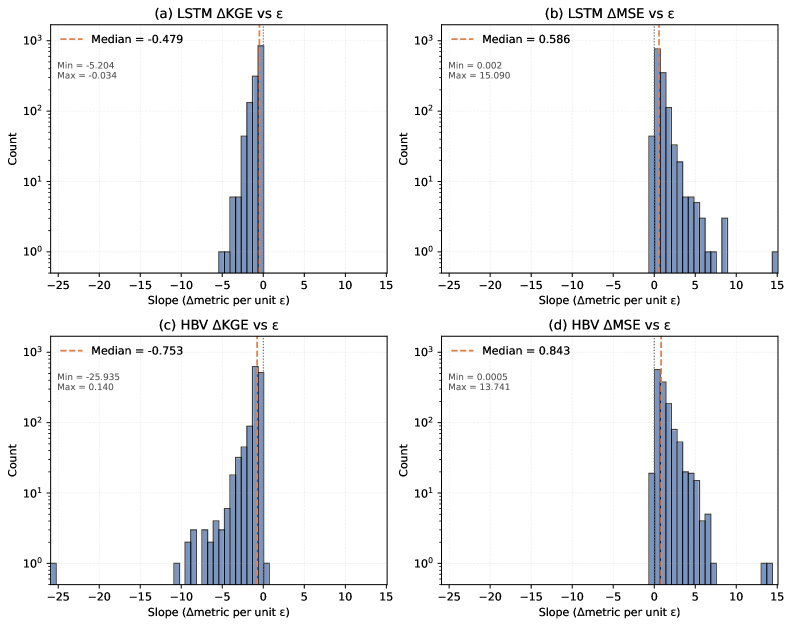

To rigorously evaluate how adversarial perturbations affect predictive capabilities, the study employed two complementary metrics: Mean Squared Error (MSE) and the Kling-Gupta Efficiency (KGE). MSE quantifies the average squared difference between predicted and observed values, providing a straightforward measure of overall error magnitude. However, MSE can be sensitive to outliers and doesn’t fully capture the similarity in distributions. Therefore, KGE was also utilized; this metric offers a more holistic assessment by evaluating the correlation, bias, and variability between predicted and observed hydrological time series. By considering both MSE and KGE, researchers gained a comprehensive understanding of how even subtle, intentionally crafted disturbances impacted the models’ ability to accurately represent complex hydrological processes.

Evaluations revealed a notable decline in predictive accuracy for both the Deep Learning Model and the HBV Model when exposed to even minimal adversarial perturbations. While both models exhibited vulnerability, the HBV Model demonstrated a comparatively larger decrease in the Kling-Gupta Efficiency (KGE) statistic than the LSTM-based Deep Learning Model under identical perturbation conditions. This suggests the HBV Model is comparatively more susceptible to shifts in input data caused by adversarial attacks, potentially due to its underlying structural assumptions or parameter sensitivity; the LSTM, with its inherent capacity to learn complex relationships, appears to exhibit marginally greater robustness against these subtle manipulations of input variables.

Adversarial robustness is demonstrably linked to the scale of introduced perturbations; research indicates that even subtle alterations to input data can substantially diminish the predictive capabilities of hydrological models. This sensitivity underscores a critical vulnerability, as the degree to which a model maintains accuracy directly correlates with the magnitude of the disruptive influence. Consequently, the findings highlight an urgent need for the development and implementation of robust defense mechanisms-techniques designed to mitigate the impact of these adversarial attacks and ensure the reliable performance of critical forecasting systems. Without such safeguards, the integrity of model outputs-and the decisions informed by them-remains susceptible to manipulation, potentially leading to inaccurate predictions and flawed water resource management.

The study’s findings regarding the approximately linear responses of both LSTM and HBV models to perturbations resonate with a sentiment expressed by Lev Landau: “The only way to do great work is to love what you do.” This ‘love’, in the context of model building, translates to a deep understanding of the underlying principles – in this case, the predictable, linear behavior observed even under stress. The research demonstrates an elegance in the models’ responses, suggesting a harmonious relationship between their structure and function. This predictable linearity, while perhaps not initially sought, allows for a more robust and interpretable system – a hallmark of good design where simplicity and understanding prevail. The observed greater sensitivity of the HBV model highlights the importance of architectural choices in achieving this balance, confirming that a nuanced appreciation of these details is crucial for building effective and resilient hydrological forecasts.

The Horizon Beckons

The observation of approximate linearity in both LSTM and HBV models, even when subjected to deliberate perturbation, is less a revelation than a necessary reckoning. It suggests that the complexity often lauded in deep learning architectures doesn’t necessarily translate to fundamentally different behaviors – merely different scales of predictable response. The finding that LSTMs demonstrate a marginally greater resilience than the comparatively simple HBV model is interesting, yet it feels akin to observing a polished stone withstand a blow better than a roughly hewn one. Both will eventually yield.

The true challenge, then, isn’t chasing ever-more-intricate model designs. Rather, it lies in understanding why these systems fail so predictably, and what minimal structural changes could yield genuinely robust performance. Current adversarial training methods often feel like treating the symptoms, not the disease. A useful direction would be to investigate model architectures explicitly designed for stability, perhaps drawing inspiration from control theory or dynamical systems – where elegance isn’t a flourish, but a necessity.

Ultimately, the goal shouldn’t be to build models that merely appear to function across a range of conditions, but those that reveal the underlying hydrological truths with clarity. Code structure is composition, not chaos; beauty scales, clutter does not. The path forward demands a shift in focus – from complexity for its own sake, to a relentless pursuit of fundamental principles.

Original article: https://arxiv.org/pdf/2602.05237.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- Rumored Assassin’s Creed IV: Black Flag Remake Has A Really Silly Title, According To Rating

- James Cameron Gets Honest About Avatar’s Uncertain Future

- New survival game in the Forest series will take us to a sci-fi setting. The first trailer promises a great challenge

- Marvel Studios Confirms Superman 2025 Villain Actor Will Join the MCU

- What time is It: Welcome to Derry Episode 8 out?

- Will Floki Survive the Market Madness? Find Out! 😂🚀

- The dark side of the AI boom: a growing number of rural residents in the US oppose the construction of data centers

2026-02-07 21:27