Author: Denis Avetisyan

Researchers are leveraging the power of large language models to automatically generate complex neural network architectures, creating a valuable resource for improving AI reliability and adaptability.

This paper details the creation of a 608-architecture dataset generated by GPT-5, designed to advance research in neural network verification, static analysis, and symbolic tracing.

Despite the growing reliance on neural networks across critical applications, systematic evaluation of tools designed to ensure their reliability and adaptability remains hampered by a lack of diverse, publicly available datasets. This paper, ‘On the use of LLMs to generate a dataset of Neural Networks’, addresses this gap by introducing a novel dataset comprising 608 neural network architectures automatically generated using large language models. Rigorously validated through static analysis and symbolic tracing, this resource offers a benchmark for advancing research in neural network verification, refactoring, and migration. Will this dataset accelerate the development of more robust and trustworthy neural network systems?

The Challenge of Automated Design

The construction of neural networks, despite recent advancements, frequently relies on painstaking manual design, a process demanding both expertise and substantial computational resources. Achieving optimal performance across a spectrum of tasks-from image recognition to natural language processing-requires careful consideration of network depth, layer types, and connection patterns, all of which are often determined through trial and error. This manual approach presents a significant bottleneck, hindering the rapid deployment of tailored neural network solutions and limiting the exploration of potentially superior architectures. Consequently, researchers and engineers often find themselves constrained by the time-intensive nature of network design, impacting innovation and delaying the realization of advanced artificial intelligence applications.

Conventional neural network design often presents a frustrating trade-off between achieving high performance, maintaining computational efficiency, and ensuring adaptability to different kinds of data. Historically, engineers have relied on manual tuning and expert intuition, a process that proves both time-consuming and limited in its ability to explore the vast architectural space of possible networks. This manual approach frequently results in models optimized for a specific task and dataset, exhibiting poor generalization to new or slightly altered conditions. The inherent difficulty in simultaneously maximizing accuracy and minimizing resource consumption – factors often at odds with one another – underscores the need for innovative design methodologies that move beyond these limitations and offer more robust, versatile solutions.

The escalating complexity of modern applications is driving a critical need for automated neural network (NN) generation, as manual design struggles to keep pace with increasingly demanding performance requirements. Recognizing this challenge, researchers have compiled a comprehensive dataset comprising 608 distinct NN architectures, each representing a unique configuration of layers, connections, and activation functions. This resource aims to facilitate the development of algorithms capable of automatically constructing effective and reliable NNs tailored to specific tasks, effectively shifting the focus from painstaking manual design to data-driven optimization and accelerating progress in areas like image recognition, natural language processing, and predictive modeling. The availability of such a diverse collection promises to unlock new possibilities in automated machine learning and enable the creation of more adaptable and efficient artificial intelligence systems.

Automated Construction Through Language

GPT-5 introduces a paradigm shift in neural network (NN) creation by leveraging text-based prompts to directly synthesize functional network code. This process bypasses traditional graphical user interface-driven or hand-coded development methods. Users articulate NN requirements in natural language, which are then converted into specific instructions for the GPT-5 model. The model then autonomously generates source code representing the NN architecture, effectively translating high-level descriptions into executable code. This approach allows for rapid prototyping and exploration of diverse NN designs without requiring extensive manual coding or deep expertise in specific NN frameworks.

The automated network generation process initiated by GPT-5 relies on a two-stage input methodology. Initially, precise and comprehensive Requirements Definition documents are created, detailing the desired functionality, input/output specifications, and performance metrics of the target neural network. These requirements are then converted into specific, structured instructions – termed Prompt Construction – formatted as natural language prompts designed to guide GPT-5’s code synthesis capabilities. The quality and detail within both the requirements definition and prompt construction phases are critical determinants of the generated network’s suitability and performance; ambiguous or incomplete specifications will likely result in suboptimal or inaccurate network designs.

GPT-5’s automated generation capabilities resulted in the creation of 608 distinct neural network designs. These architectures were not pre-defined, but dynamically synthesized by the model based on the provided task description and the characteristics of the input data. This process allowed for a broad exploration of possible network configurations, moving beyond human-defined limitations and potentially uncovering novel solutions optimized for specific problem domains. The generated networks varied in layer types, connection patterns, and hyperparameter settings, demonstrating the model’s capacity to produce a diverse range of architectures without explicit programming of each design.

Validating Reliability Through Analysis

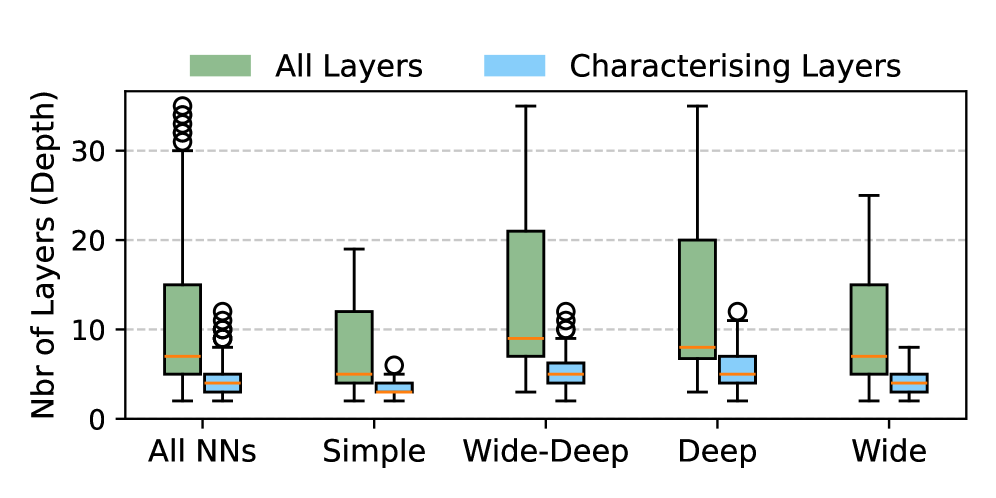

Rigorous validation of generated neural network (NN) architectures is crucial for ensuring functional correctness and optimal performance. The process involves systematic testing to detect structural defects, logical errors, or inefficiencies that may arise during the automated generation phase. This validation process, applied to a set of 608 generated networks, revealed that 8 architectures did not meet defined compliance criteria and required regeneration. Analysis of the 6842 total layers across these networks identified 38 unique layer types, providing data for assessing architectural diversity and potential areas for optimization during subsequent generation cycles.

Static analysis and symbolic tracing represent complementary techniques for verifying the structural correctness of generated neural networks. Static analysis examines the network’s architecture without executing it, identifying potential issues like disconnected nodes or invalid layer configurations. Symbolic tracing, conversely, involves executing the network with symbolic inputs, allowing verification of data flow and operation correctness across the entire graph. By combining these approaches, the validation process achieves more comprehensive coverage than either technique could provide independently, ensuring adherence to specified architectural constraints and facilitating the identification of errors before deployment.

Rigorous validation was performed on the 608 neural network architectures generated, resulting in the identification of 8 non-compliant designs that were subsequently regenerated. This process involved analyzing a total of 6842 layers across all generated networks, and utilized 38 unique layer types. The validation techniques successfully flagged inconsistencies and errors within the generated designs, ensuring adherence to specified architectural constraints before deployment and contributing to overall system reliability.

Architectural Diversity and Broad Applicability

The generated neural network architectures exhibit remarkable flexibility, seamlessly processing diverse data types without requiring substantial modification. This adaptability extends to image data, where convolutional layers can effectively extract spatial features; text data, benefiting from recurrent or transformer-based processing for sequential understanding; time series data, where models capture temporal dependencies; and tabular data, utilizing fully connected layers for feature combination. This inherent modality agnosticism is achieved through a carefully designed search space and optimization process, allowing the system to identify network structures that generalize well across different input representations and ultimately reducing the need for specialized architectures tailored to specific data formats.

The system’s architectural flexibility is a key strength, providing support for a wide array of neural network designs including Multilayer Perceptrons (MLPs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs). This diversity isn’t simply about offering choices; it’s about enabling task-specific optimization. Different data types and problem structures benefit from different architectural approaches – CNNs excel at spatial data like images, RNNs are well-suited to sequential data like time series, and MLPs provide a robust foundation for various tasks. By supporting these varied architectures, the system allows for configurations precisely tailored to the demands of a given problem, ultimately improving performance and efficiency across a broad range of applications.

The system’s versatility is demonstrated through its capacity to tackle a wide range of machine learning tasks. Built upon an analysis of 608 distinct neural network architectures, it effectively performs binary classification – discerning between two distinct categories – as well as regression, predicting continuous values based on input data. Beyond these predictive capabilities, the system also excels in representation learning, automatically discovering useful patterns and features within data that can then be used for downstream analysis. This broad applicability suggests the underlying approach is not limited to specific problem domains, offering a generalized framework for automated machine learning across diverse tasks and datasets.

The pursuit of a comprehensive dataset, as demonstrated by this work on neural networks, echoes a fundamental principle of elegant design. One strives not for maximal complexity, but for minimal sufficient structure. As Donald Knuth observed, “Premature optimization is the root of all evil.” This dataset, generated through LLMs, isn’t about creating the most networks, but rather a carefully considered collection-608 diverse architectures-intended to rigorously test verification and migration techniques. The value lies in the reduction of unnecessary elements, yielding a focused resource for meaningful research on neural network reliability and adaptability. It’s a testament to the idea that clarity, in this case through a streamlined dataset, is indeed a form of mercy for those seeking to understand and improve these complex systems.

Further Refinements

The generation of neural networks via large language models presents, at first glance, an exercise in elegant automation. Yet, the true challenge does not reside in producing architectures, but in discerning their inherent qualities. This work offers a substantial dataset, but a dataset, however large, is merely a collection of questions awaiting answers. Future effort must prioritize not simply the quantity of networks, but the rigor with which they are tested – a pursuit of demonstrable reliability, not combinatorial novelty.

Current static analysis and symbolic tracing techniques, while valuable, represent approximations. The leap to formal verification – a definitive statement of network behavior – remains a substantial undertaking. The limitations of these tools, when applied to LLM-generated complexity, deserve particular scrutiny. A network’s apparent functionality is not proof of its robust behavior under unforeseen circumstances.

Ultimately, the value of this approach will be measured not by the ingenuity of the generative model, but by the insights gained into the fundamental properties of neural networks themselves. The goal is not to automate network design, but to illuminate the principles that govern their performance. Simplicity, after all, is the ultimate arbiter of good design, and remains the elusive target.

Original article: https://arxiv.org/pdf/2602.04388.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- YouTuber streams himself 24/7 in total isolation for an entire year

- Gold Rate Forecast

- What does Avatar: Fire and Ash mean? James Cameron explains deeper meaning behind title

- James Cameron Gets Honest About Avatar’s Uncertain Future

- These are the last weeks to watch Crunchyroll for free. The platform is ending its ad-supported streaming service

- Rumored Assassin’s Creed IV: Black Flag Remake Has A Really Silly Title, According To Rating

- Southern Charm Recap: The Wrong Stuff

2026-02-05 15:24