Author: Denis Avetisyan

As Large Language Models become increasingly integrated into complex systems, a robust framework for identifying and mitigating potential hazards is critical.

This review introduces the LLM Risk Assessment Framework (LRF) to systematically evaluate and classify risks associated with integrating Large Language Models into systems engineering processes, linking autonomy and impact levels to guide safe and scalable deployment.

Despite the transformative potential of generative artificial intelligence, its integration into critical engineering workflows introduces novel risks demanding systematic evaluation. This paper, ‘Generative AI in Systems Engineering: A Framework for Risk Assessment of Large Language Models’, addresses this challenge by introducing a structured approach to assess the risks associated with deploying Large Language Models throughout the systems engineering lifecycle. The proposed LLM Risk Assessment Framework (LRF) classifies applications based on levels of autonomy and potential impact, enabling organizations to determine appropriate validation strategies and mitigation measures. Will this framework facilitate responsible innovation and establish a foundation for standardized AI assurance practices within complex engineering domains?

The Inevitable Cascade: LLMs and the Systems They Remake

Large Language Models are increasingly integrated into systems engineering workflows, promising a substantial leap in automation and efficiency. These models are no longer confined to text generation; they are being utilized for tasks such as code synthesis, requirements analysis, and even automated testing, accelerating development cycles and reducing manual effort. Applications range from aerospace, where LLMs assist in generating complex system documentation, to automotive, where they aid in designing and validating control algorithms. This rapid deployment is driven by the models’ ability to process vast quantities of data, identify patterns, and generate solutions with minimal human intervention, fundamentally reshaping how complex systems are conceived, built, and maintained. However, realizing this potential requires careful consideration of the inherent limitations and associated risks.

The increasing deployment of Large Language Models presents novel safety challenges due to their inherent limitations. Unlike traditional software with deterministic outputs, LLMs are sensitive to even subtle changes in input prompts, meaning a seemingly minor alteration can yield drastically different-and potentially unsafe-responses. This susceptibility, coupled with the models’ propensity to generate plausible but factually incorrect information – often referred to as “hallucinations” – renders conventional safety validation techniques, designed for predictable systems, largely ineffective. Traditional methods focusing on code review and exhaustive testing struggle to account for the vast and often unpredictable range of possible LLM outputs, necessitating the development of new approaches specifically tailored to address these emergent risks and ensure reliable performance in critical applications.

Charting the Decay: A Framework for LLM Risk Assessment

The LLM Risk Assessment Framework (LRF) provides a standardized methodology for evaluating the potential hazards of systems utilizing Large Language Models (LLMs). This framework is designed to categorize risks based on a system’s capabilities and the potential consequences of its failures. By systematically assessing LLM-powered applications, the LRF aims to facilitate informed decision-making regarding development, deployment, and mitigation strategies. The framework’s output is a risk profile allowing stakeholders to understand the level of scrutiny and safeguards necessary for each specific implementation. It is intended to be adaptable across diverse use cases and scalable to accommodate evolving LLM technologies.

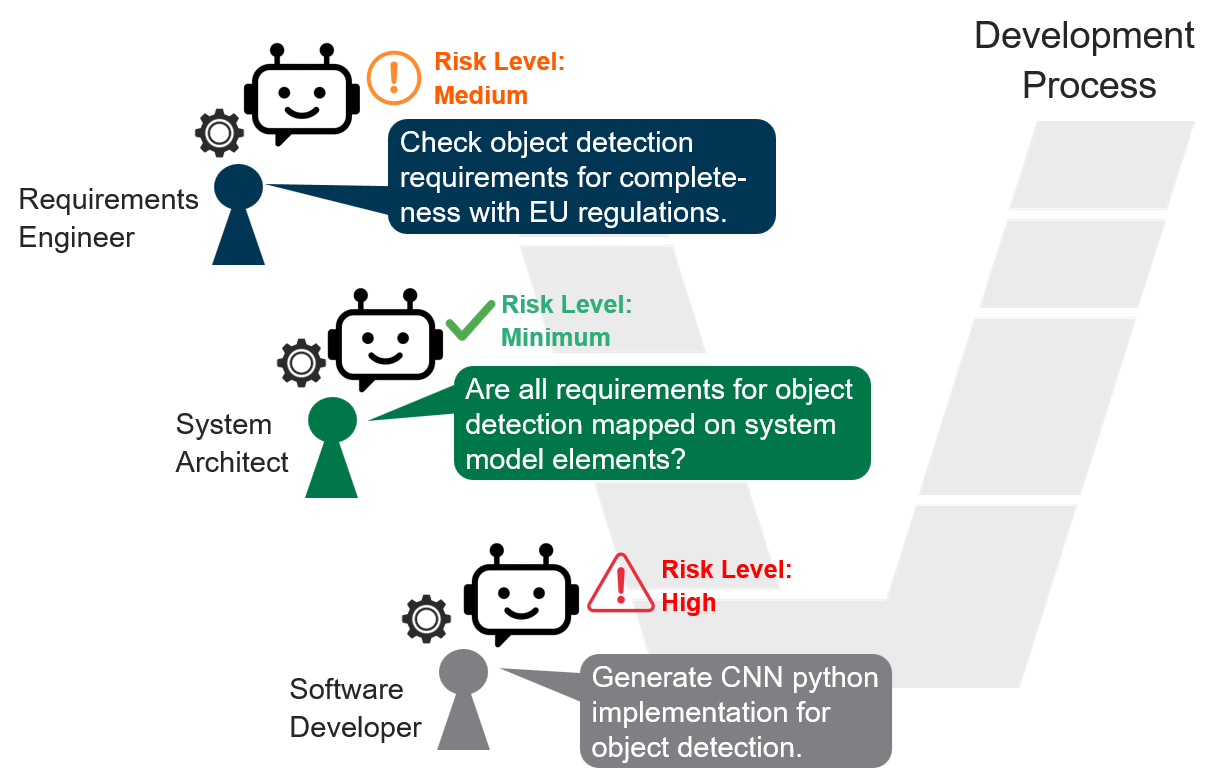

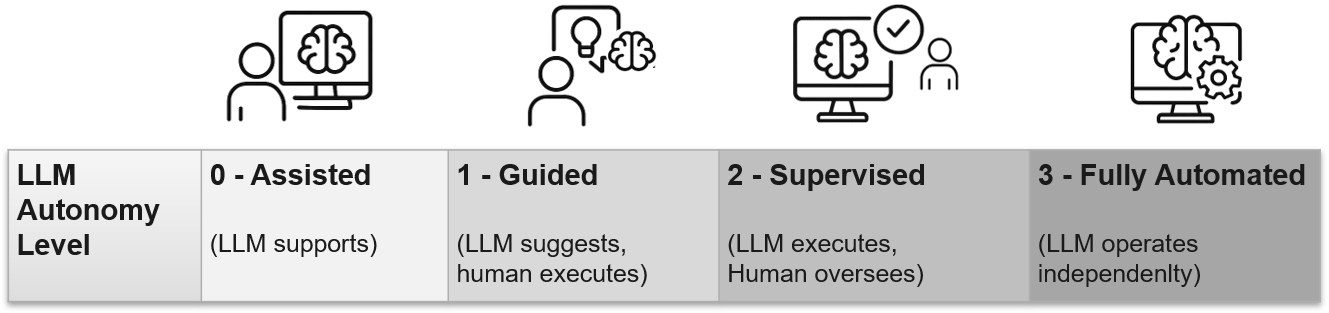

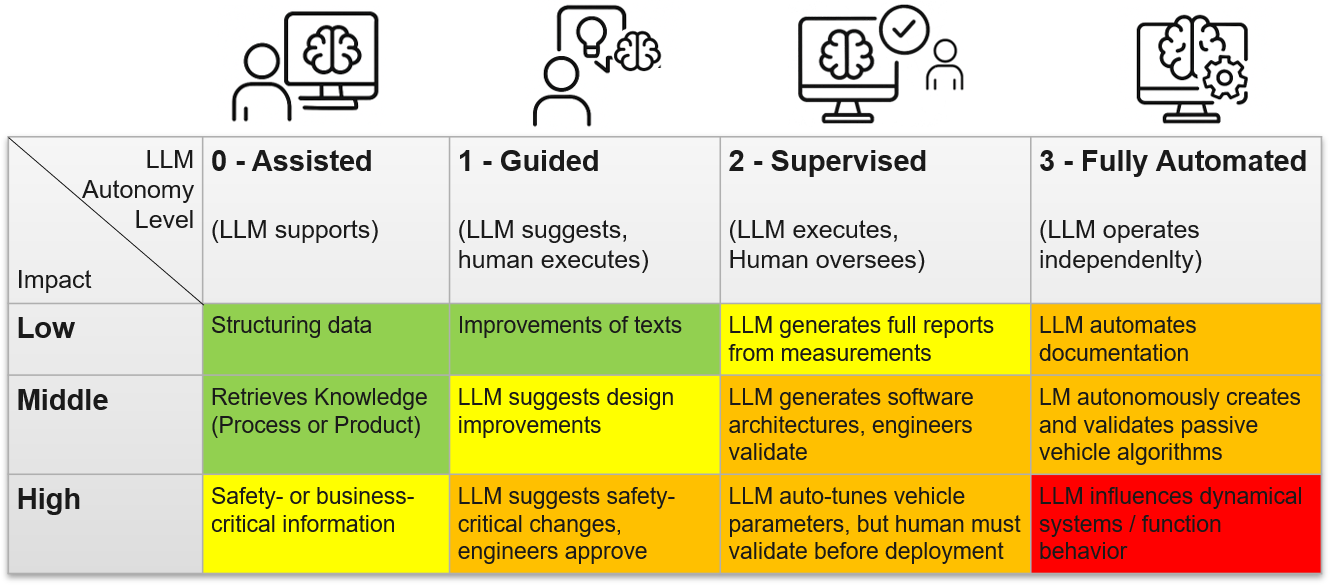

The LLM Risk Assessment Framework (LRF) employs a two-dimensional model for hazard characterization. This model assesses LLM-powered applications based on their Autonomy Level and Impact Level. Autonomy Level defines the degree to which the LLM operates independently, while Impact Level quantifies the potential severity of consequences stemming from incorrect or misleading LLM outputs. By cross-referencing these two dimensions, the LRF facilitates a structured approach to risk evaluation, allowing for categorization of applications based on their inherent hazard profile and enabling prioritization of mitigation strategies.

The Autonomy Level within the LLM Risk Assessment Framework classifies the degree to which an LLM operates independently, utilizing a four-level scale. Level 1, ‘Assisted’, denotes systems where the LLM provides suggestions or completes tasks with significant human oversight and intervention. Level 2, ‘Partially Automated’, indicates systems capable of handling defined tasks autonomously but requiring human monitoring and the ability to revert to human control. Level 3, ‘Highly Automated’, describes systems that manage most functions independently, although human intervention may be required in exceptional circumstances. Finally, Level 4, ‘Fully Automated’, represents systems capable of operating without human intervention under defined operational design domains.

The Impact Level within the LLM Risk Assessment Framework categorizes potential harm resulting from inaccurate or misleading LLM outputs. This dimension employs three levels: ‘Low’, indicating consequences primarily limited to inconvenience or minor data errors; ‘Moderate’, representing scenarios causing noticeable operational disruption, financial loss, or reputational damage; and ‘High’, denoting situations leading to severe financial loss, safety hazards, legal repercussions, or significant and widespread harm to individuals or organizations. Assignment to each level is determined by a qualitative assessment of the likely magnitude and scope of negative outcomes stemming from LLM failures.

Validation in the Current: Demonstrating the Framework’s Utility

The LLM Risk Framework (LRF) is designed for integration into diverse systems engineering workflows, extending beyond simple LLM evaluation. Current applications include a Requirements Checker, which validates that LLM outputs adhere to specified functional and safety requirements, and a Legal Case Assessment tool, utilized to analyze legal documents and identify potential risks or inconsistencies. These implementations demonstrate the framework’s adaptability to tasks requiring complex information processing and risk identification, and can be extended to other areas such as policy compliance verification and failure mode analysis, providing a standardized approach to managing LLM-related risks across multiple engineering disciplines.

The LLM Risk Framework (LRF) facilitates risk identification and categorization by analyzing specific LLM functionalities against potential failure modes. This process involves detailing how an LLM’s capabilities – such as text generation, summarization, or code execution – could lead to undesirable outcomes, like the production of biased content, exposure of sensitive information, or inaccurate decision-making. Identified risks are then categorized based on severity and likelihood, enabling prioritization of mitigation strategies. The framework achieves this through a structured approach that links LLM functions to potential hazards, providing a clear audit trail and supporting comprehensive risk assessment for diverse applications.

The LLM Risk Framework (LRF) is designed to integrate with established functional safety standards, specifically IEC 61508, applicable to general safety-related systems, and ISO 26262, focused on automotive safety. This alignment allows organizations already compliant with these standards to readily incorporate the LRF into their existing safety lifecycle processes. By referencing established hazard analysis techniques and safety integrity levels (SIL) defined within IEC 61508 and Automotive Safety Integrity Levels (ASIL) in ISO 26262, the LRF facilitates a consistent and auditable approach to managing risks associated with LLM deployments. This compatibility reduces the barrier to adoption and ensures traceability between LLM risk assessments and pre-existing safety documentation.

Retrieval Augmented Generation (RAG) addresses limitations inherent in Large Language Models (LLMs) by supplementing the LLM’s parametric knowledge with information retrieved from external knowledge sources. This process mitigates issues like factual inaccuracies, knowledge cut-off dates, and hallucination, as the LLM bases its responses on verified, up-to-date data. Implementation involves indexing relevant documents and, at inference time, retrieving pertinent passages based on the user query before formulating a response. By grounding the LLM’s output in external evidence, RAG demonstrably improves the reliability and trustworthiness of generated text, particularly in applications demanding factual correctness and verifiable information.

The System Endures: LLMs Integrated into the Engineering Lifecycle

The LLM Risk Framework (LRF) isn’t intended to disrupt established engineering practices, but rather to augment them. Recognizing the maturity and effectiveness of models like the V-Model – a sequential design and verification approach – the LRF is deliberately structured for compatibility. This allows engineers to seamlessly incorporate LLM-based components into existing workflows without requiring a complete overhaul of their development processes. By mapping LLM-specific risks onto familiar lifecycle stages – from requirements gathering and design, through implementation and testing, to deployment and maintenance – the LRF promotes a systematic and predictable integration, ensuring that safety and reliability considerations are addressed at each step, mirroring the rigor of traditional engineering methodologies.

The seamless integration of Large Language Models (LLMs) into established engineering lifecycles represents a shift towards proactive, rather than reactive, system development. Rather than requiring a complete overhaul of existing processes, this approach allows engineers to leverage familiar frameworks – such as the V-Model – to systematically incorporate LLM-based components. This means defining clear requirements, designing with LLM capabilities in mind, rigorously testing LLM interactions, and continually validating performance throughout the development process. By mapping LLM functionalities to specific lifecycle stages, engineers can maintain control, ensure traceability, and mitigate risks associated with these powerful, yet complex, technologies. This systematic approach fosters confidence in LLM-powered systems, enabling their reliable and safe deployment across various engineering domains.

Engineers developing systems powered by Large Language Models (LLMs) must prioritize safety and reliability throughout the entire development process, not as an afterthought. Proactive risk assessment, integrated at each stage of the engineering lifecycle, allows for the identification and mitigation of potential hazards before they manifest as system failures. This approach moves beyond reactive troubleshooting, fostering a preventative mindset where potential issues – stemming from unpredictable LLM behavior, data biases, or unforeseen interactions – are systematically addressed. By anticipating risks related to autonomy and potential impact, developers can implement robust safeguards, validation procedures, and fallback mechanisms, ultimately building more trustworthy and dependable LLM-powered applications.

The Language Reliability Framework (LRF) systematically evaluates potential hazards associated with Large Language Models through a meticulously designed risk matrix. This matrix classifies risks based on two key dimensions: the level of autonomy granted to the LLM and the potential impact should a failure occur. Each dimension is graded on a scale of one to four, resulting in a 4×4 grid where risks are categorized from ‘Minimal’ – representing low autonomy and limited impact – to ‘High’ – indicating substantial autonomy coupled with potentially severe consequences. This granular categorization allows engineering teams to prioritize mitigation efforts, focusing resources on the most critical vulnerabilities within LLM-powered systems and ensuring a proportionate response to identified threats.

The pursuit of integrating Large Language Models into systems engineering, as detailed in the LLM Risk Assessment Framework, inherently acknowledges the transient nature of technological systems. It’s a process of continual adaptation, recognizing that initial designs will inevitably require refinement. As Andrey Kolmogorov observed, “The most important thing in science is not to be afraid of making mistakes.” This resonates deeply with the framework’s emphasis on systematically identifying and mitigating risks – accepting that imperfections will emerge, but establishing processes to manage them proactively. The LRF doesn’t promise absolute safety, but rather a measured approach to navigating the inherent uncertainties of complex systems, allowing for graceful evolution rather than catastrophic failure. It’s a pragmatic acceptance of system decay, coupled with a commitment to resilience.

What Lies Ahead?

The introduction of the LLM Risk Assessment Framework represents a necessary, if provisional, step. Systems, by their nature, absorb novelty, and the integration of large language models is no exception. The framework doesn’t eliminate risk – few things do – but instead offers a means of charting its contours as the landscape shifts. The true challenge lies not in predicting every possible failure mode, an exercise in futility, but in building systems that learn to age gracefully around them.

A critical area for future work resides in the dynamic calibration of autonomy levels. The framework correctly identifies the interplay between autonomy and impact, but establishing robust, real-time adjustments based on evolving model behavior will be paramount. Static assessments offer a snapshot; a living system demands continuous observation.

Perhaps, though, the most fruitful path lies in accepting that some risks are inherent. The pursuit of absolute safety often stifles innovation. Sometimes, observing the process of adaptation-how systems respond to unexpected inputs, how they degrade and compensate-is more valuable than attempting to accelerate the path toward an illusory state of perfection. The system will, ultimately, find its equilibrium; the question is whether it does so with resilience or collapse.

Original article: https://arxiv.org/pdf/2602.04358.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Gold Rate Forecast

- What does Avatar: Fire and Ash mean? James Cameron explains deeper meaning behind title

- Rumored Assassin’s Creed IV: Black Flag Remake Has A Really Silly Title, According To Rating

- Shiba Inu’s Comedy of Errors: Can This Dog Shake Off the Doom?

- The Best Battlefield REDSEC Controller Settings

- Landman Recap: The Dream That Keeps Coming True

2026-02-05 10:19