Author: Denis Avetisyan

Researchers are integrating advanced mathematical tools with machine learning to forecast critical transitions in complex systems before they occur.

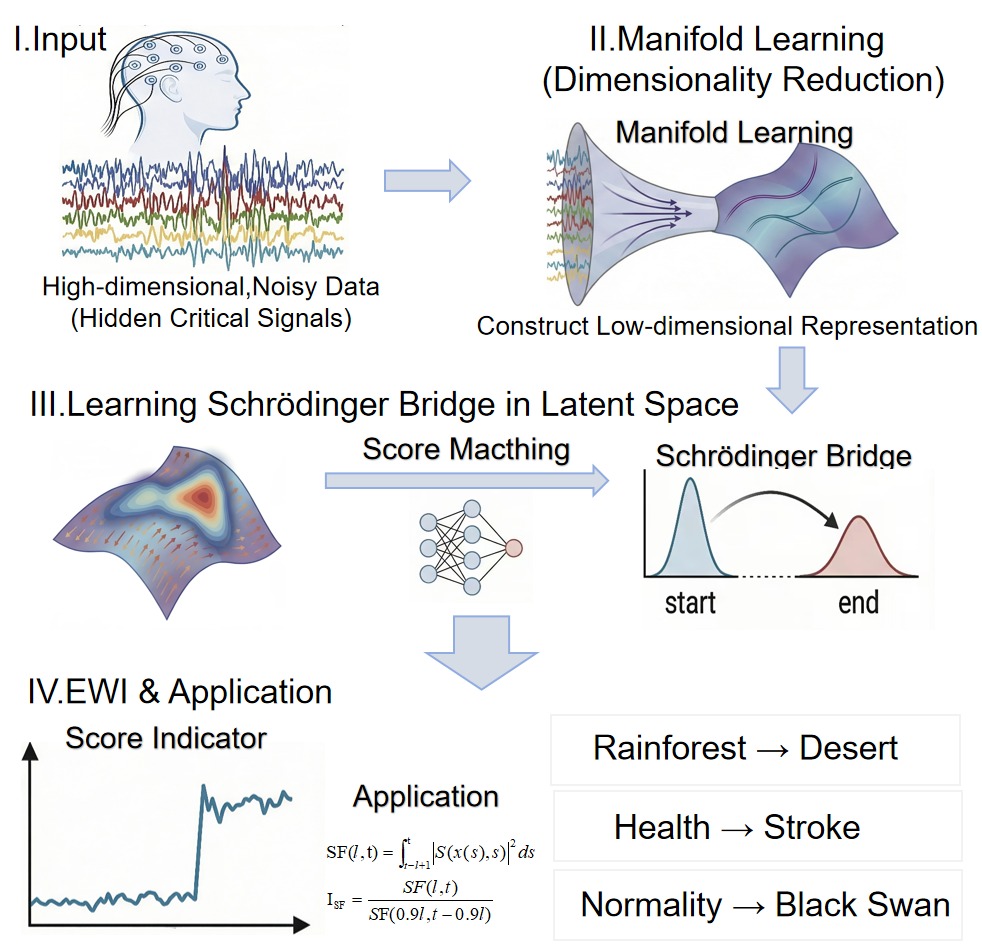

This review details a novel framework combining manifold learning, stochastic differential equation analysis, and Schrödinger bridge theory for improved early warning of critical transitions, demonstrated through epilepsy seizure prediction.

Predicting critical transitions in complex systems remains a significant challenge due to high dimensionality and subtle precursory signals. This study, titled ‘Early warning prediction: Onsager-Machlup vs Schrödinger’, introduces a novel framework integrating manifold learning with stochastic system identification and, crucially, Schrödinger bridge theory to enhance early warning signal detection. By comparing methods like diffusion maps, the authors develop a data-driven approach to estimate the probability evolution-quantified through a new Score Function indicator-and demonstrate superior performance in epilepsy seizure prediction. Could this framework offer a broadly applicable solution for identifying impending critical shifts across diverse complex systems, beyond neurological applications?

The Inevitable Cracks: Recognizing Systemic Warning Signs

Many systems, whether ecological, financial, or even social, don’t simply fail – they undergo critical transitions, shifting abruptly to a new state. However, these dramatic changes are rarely instantaneous; instead, they are frequently foreshadowed by subtle shifts in the system’s behavior, collectively known as Early Warning Indicators (EWIs). These indicators aren’t necessarily obvious; they manifest as changes in statistical properties like increased variance or autocorrelation, or as slowing down of recovery rates after small disturbances. Detecting these precursor signals is akin to identifying a hairline crack before a complete structural failure, offering a crucial window for intervention and potentially averting catastrophic outcomes. The challenge lies in discerning these meaningful changes from the inherent ‘noise’ within complex systems, but successful EWI identification represents a paradigm shift from reactive crisis management to proactive resilience building.

Conventional approaches to system monitoring often falter when attempting to predict critical transitions, largely due to the inherent difficulty in discerning meaningful signals from pervasive noise and intricate interactions. These methods, frequently reliant on established thresholds or linear projections, struggle to capture the subtle, often nonlinear, changes that precede a shift in system state. Consequently, systems remain vulnerable to unexpected failure, as the early warning signs – slight decelerations, increased variance, or altered correlations – are dismissed as random fluctuations or are simply undetected within the complex web of normal operational behavior. This limitation underscores the need for innovative techniques capable of filtering noise and revealing the faint precursors to systemic collapse, allowing for proactive intervention before a critical threshold is breached.

The ability to anticipate critical transitions is paramount to effective management and enhanced resilience in a wide range of complex systems. Identifying robust Early Warning Indicators (EWIs) isn’t merely an academic pursuit; it’s a foundational requirement for proactive intervention. In climate science, EWIs could signal impending regime shifts like abrupt changes in ocean currents or rainforest dieback, allowing for mitigation strategies. Similarly, in financial markets, these indicators might foreshadow systemic risks or market crashes, enabling preemptive regulatory action. Beyond these domains, EWIs are increasingly vital in fields like ecology – tracking biodiversity loss – and even social systems – anticipating political instability. The power of EWIs lies in shifting the focus from reactive crisis management to preventative strategies, ultimately fostering greater stability and sustainability across interconnected systems.

Modeling the Unfolding: A Dynamical Systems View

A Dynamical Systems Framework analyzes systems by representing their state as points in a phase space, with evolution described by differential equations defining trajectories. This approach moves beyond static analysis by explicitly modeling how a system’s current state influences its future behavior, allowing for the identification of attractors, repellers, and saddle points which dictate long-term trends. The framework emphasizes the system’s inherent tendencies – its natural inclination toward certain states – and its sensitivities to initial conditions and parameter changes, formalized through concepts like Lyapunov exponents. By quantifying these sensitivities, the framework enables prediction of system behavior and assessment of stability, even in the presence of complex, nonlinear interactions. \frac{dx}{dt} = f(x) represents a basic form of this modeling, where x is the state vector and f defines the system’s dynamics.

Modeling a dynamical system as a trajectory within its state space-defined by the system’s relevant variables-allows for the identification of regions where the system’s behavior becomes sensitive to initial conditions. These regions, often characterized by bifurcations or chaotic dynamics, represent points of potential instability. By analyzing the vector field governing the trajectory, and calculating quantities like Lyapunov exponents, it becomes possible to predict qualitative changes in system behavior, including transitions between stable states. Specifically, a trajectory’s proximity to a bifurcation point indicates an increased likelihood of a transition, while positive Lyapunov exponents suggest the system is entering a chaotic regime where long-term prediction becomes fundamentally limited. This approach enables the forecasting of shifts in system dynamics without necessarily knowing the exact timing of the transition.

Stochastic Differential Equations (SDEs) extend the Dynamical Systems framework by incorporating random noise, represented as a Wiener process or Brownian motion, into the system’s equations of motion. This allows for the modeling of systems subject to unpredictable external forces or inherent internal fluctuations. The addition of a diffusion term to the equations introduces probabilistic behavior, meaning that trajectories are no longer deterministic but follow a probability distribution. Analyzing SDEs involves techniques such as the Fokker-Planck equation, which describes the evolution of this probability distribution, and simulations like the Euler-Maruyama method to approximate stochastic trajectories. Consequently, the impact of random fluctuations on system behavior can be quantified through metrics like the mean first passage time, the probability of exceeding a certain threshold, or the long-term distribution of states, enabling assessment of system resilience and predictability in the presence of noise.

Distilling Complexity: Manifold Learning and Dimensionality Reduction

Datasets with a large number of dimensions, often exceeding the number of observations, present challenges for analysis and modeling due to the “curse of dimensionality”. This phenomenon causes data to become sparse, distances between points to become less meaningful, and computational complexity to increase exponentially. Consequently, the core relationships and underlying drivers of system behavior become obscured by irrelevant or redundant features. Dimensionality reduction techniques address this by transforming the high-dimensional data into a lower-dimensional representation while preserving essential information, enabling more effective visualization, analysis, and the development of predictive models. These techniques aim to identify and retain the most salient features, reducing noise and simplifying the data without significant information loss.

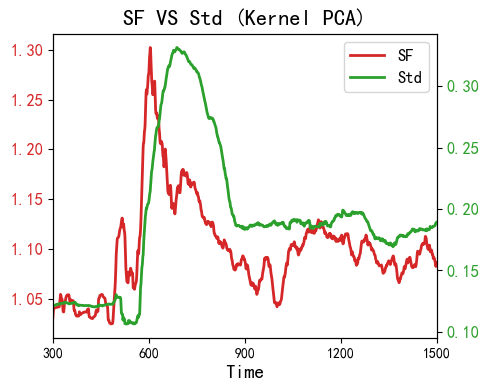

Manifold learning techniques operate on the premise that many high-dimensional datasets are not uniformly distributed in the full space, but instead concentrate near a lower-dimensional manifold. Methods like Principal Component Analysis (PCA) identify orthogonal linear combinations of the original features – termed principal components – that capture the maximum variance in the data, effectively projecting it onto a reduced dimensionality. Kernel PCA extends this by employing kernel functions to map data into a higher-dimensional space where linear separation – and thus dimensionality reduction – becomes possible. Spectral Embedding, conversely, utilizes the eigenvectors of a similarity matrix derived from the data to represent points in a lower-dimensional space, preserving local neighborhood relationships. These techniques do not simply select a subset of the original features; they create new, lower-dimensional representations designed to capture the essential structure of the data while discarding noise or redundant information.

Dimensionality reduction techniques facilitate the identification of early warning signals by transforming high-dimensional system dynamics into a lower-dimensional, more interpretable space. This simplification allows for the detection of subtle changes in system behavior that might be obscured in the original data. By projecting data onto a reduced set of dimensions – often representing the most significant modes of variation – analysts can more easily monitor key indicators and identify deviations from normal operating conditions. These lower-dimensional representations reduce computational complexity, enabling real-time analysis and faster response to potential instabilities, and provide a clearer visualization of the system’s state space, improving the accuracy of predictive models.

Catching the Improbable: Rare Events and Path Probabilities

Large Deviations Theory (LDT) offers a mathematical formalism for assessing the probability of events that occur with exponentially small frequency. Unlike traditional probability calculations focused on typical behavior, LDT specifically addresses scenarios where outcomes deviate significantly from the expected. This is achieved by analyzing the scaling behavior of the probability as the deviation increases, often expressed through an associated rate function – or action – which quantifies the cost of observing such an improbable event. The theory is particularly relevant for systems undergoing critical transitions, where rare fluctuations can trigger shifts in macroscopic behavior. By characterizing these rare events, LDT enables the prediction and potential control of instabilities in diverse fields including physics, engineering, and finance, providing a means to move beyond analyzing average behavior and address extreme, yet possible, outcomes.

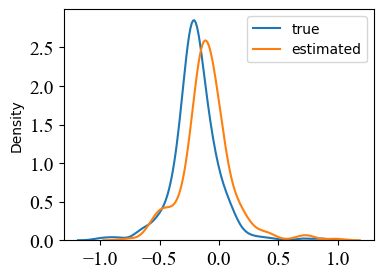

Estimating the probability of trajectories leading to system instability utilizes computational techniques such as Monte Carlo simulation in conjunction with theoretical frameworks like the Onsager-Machlup Action and the Schrödinger Bridge Problem. The Onsager-Machlup Action provides a method for calculating the probability of observing a particular path over time, particularly those deviating significantly from the system’s most probable behavior; this involves computing a functional dependent on the path itself and a small parameter related to the magnitude of the deviation. The Schrödinger Bridge Problem, rooted in stochastic calculus, offers an alternative formulation by framing the calculation as finding the conditional probability density of a path given its endpoints, allowing for the evaluation of trajectory likelihoods. Monte Carlo methods are employed to numerically integrate over the path space, approximating the probabilities that are analytically intractable for complex systems.

Score matching and diffusion models represent alternative probabilistic frameworks for analyzing rare event dynamics. These methods estimate probability distributions by learning the score function – the gradient of the log probability density – circumventing the need for direct probability normalization, which is often intractable for rare events. Applied to epilepsy seizure prediction, this framework has demonstrated enhanced performance, identifying the critical transition point to seizure with greater temporal advance and clarity compared to traditional state-space methods and bifurcation analysis. Specifically, the diffusion modeling approach enables more accurate characterization of the pre-seizure state, leading to improved early warning capabilities and potentially reducing false alarm rates.

A Networked View: Resilience in Interconnected Systems

The inherent interconnectedness of many real-world systems – from ecological communities and social groups to the human brain and global infrastructure – necessitates a modeling approach that moves beyond isolated components. Complex network theory provides precisely this framework, representing systems as collections of nodes – the individual components – linked by edges that signify relationships or dependencies. This perspective allows researchers to shift focus from analyzing individual elements in isolation to understanding how interactions between those elements give rise to emergent behaviors and overall system dynamics. By mapping these connections, it becomes possible to quantify systemic properties like robustness, fragility, and the propagation of disturbances, offering insights unattainable through traditional reductionist approaches. The power of this methodology lies in its ability to capture not just what is connected, but how those connections influence the system’s response to change and its capacity to maintain function in the face of disruption.

Master Stability Function (MSF) analysis provides a powerful mathematical framework for understanding the collective behavior of complex networks. This technique doesn’t require detailed knowledge of individual node dynamics; instead, it focuses on the network’s topology and the stability of its ‘Jacobian matrix’ – a representation of how the system changes in response to perturbations. By analyzing the eigenvalues of this matrix, researchers can determine a critical coupling strength beyond which the network transitions from a stable state to instability. Crucially, the MSF allows prediction of how disturbances will propagate throughout the network, identifying which nodes are most vulnerable and how quickly a disruption might cascade. This capability is particularly valuable in fields like neuroscience and epidemiology, where understanding the spread of activity or disease is paramount, and offers a means to proactively manage and mitigate systemic risks before they escalate.

An innovative approach to predicting critical transitions in complex, interconnected systems centers on the development of Early Warning Signal Networks. This methodology systematically identifies subtle, precursory fluctuations – dynamic changes occurring before a system shifts into a new state – and has demonstrated notable success in capturing these signals in the context of neurological seizures. The network’s predictive capacity is underpinned by a rigorous mathematical framework, establishing a high-probability error bound – quantified as ≤ C_1(ε_{man} + ε_b + ε_{σ}) – that defines the limits of potential false positives and provides a quantifiable measure of reliability for the early warning potential. This precision is crucial for applications ranging from anticipating epileptic events to managing critical infrastructure and potentially even forecasting financial crises, offering a proactive rather than reactive strategy for managing complex systems.

The pursuit of predictive frameworks, as demonstrated by this integration of manifold learning and Schrödinger bridge theory, invariably encounters the limits of idealization. This paper attempts to anticipate critical transitions – epilepsy seizures, specifically – with a mathematical elegance that feels…optimistic. It reminds one that even the most sophisticated models are ultimately approximations of messy reality. As Immanuel Kant observed, “All our knowledge begins with the senses.” This work builds an elaborate structure, but the true test, predictably, will be found in the noise of production data. The system will inevitably encounter edge cases, unforeseen variables, and the simple fact that perfect prediction is a fiction. It’s an expensive way to complicate everything, but someone will inevitably deploy it.

Sooner or Later, It Breaks

The integration of Schrödinger bridge theory with manifold learning, as demonstrated, offers a mathematically pleasing route to early warning systems. However, the inherent assumption of a smoothly evolving, underlying manifold feels… optimistic. Production systems rarely adhere to such elegant constraints. The true test, predictably, will be how gracefully this framework degrades when confronted with data that isn’t neatly differentiable, or when the ‘critical transition’ isn’t a transition at all, but a particularly noisy fluctuation.

Future work will inevitably focus on robustness – specifically, on mitigating the effects of model misspecification and high-dimensional noise. A deeper exploration of the score function’s sensitivity to these factors is warranted. More broadly, this approach sidesteps the thorny issue of defining a ‘critical transition’ in the first place. Is it a bifurcation? A sudden change in statistical properties? The math works either way, but the practical interpretation remains… fluid.

Ultimately, the field circles back to the age-old problem: everything new is old again, just renamed and still broken. The promise of ‘early warning’ is alluring, but one suspects the most valuable output will be a more sophisticated catalog of false positives. Production is, after all, the best QA-and it will find a way to prove the theory wrong.

Original article: https://arxiv.org/pdf/2602.00143.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- YouTuber streams himself 24/7 in total isolation for an entire year

- These are the last weeks to watch Crunchyroll for free. The platform is ending its ad-supported streaming service

- What time is It: Welcome to Derry Episode 8 out?

- Elizabeth Olsen’s Love & Death: A True-Crime Hit On Netflix

- Amanda Seyfried “Not F***ing Apologizing” for Charlie Kirk Comments

- Jane Austen Would Say: Bitcoin’s Turmoil-A Tale of HODL and Hysteria

- Southern Charm Recap: The Wrong Stuff

2026-02-03 14:46