Author: Denis Avetisyan

A new approach dynamically adjusts regularization to improve the accuracy and robustness of time series predictions.

DropoutTS introduces sample-adaptive dropout, leveraging spectral analysis to modulate model capacity and reduce overfitting in time series forecasting.

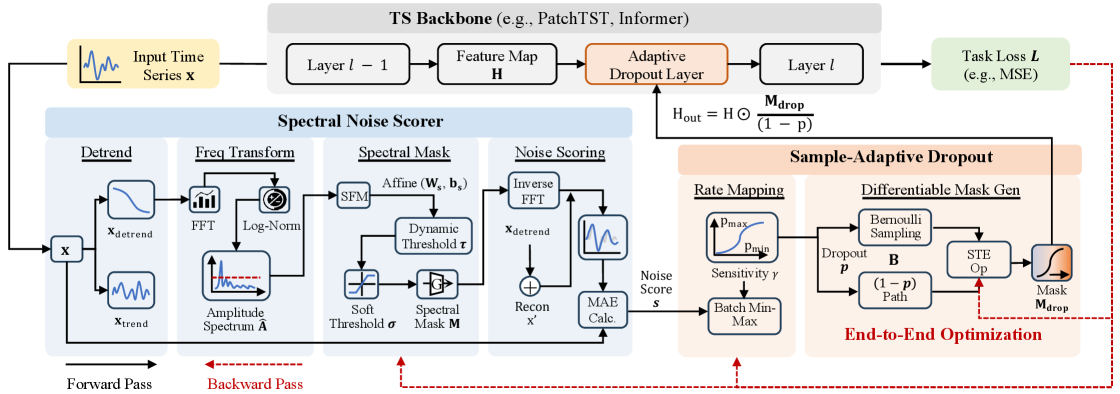

Deep time series models, despite their increasing sophistication, remain vulnerable to the pervasive noise inherent in real-world data. Addressing this limitation, we introduce ‘DropoutTS: Sample-Adaptive Dropout for Robust Time Series Forecasting’, a model-agnostic technique that dynamically calibrates learning capacity via a novel sample-adaptive dropout mechanism. By leveraging spectral analysis to quantify instance-level noise, DropoutTS selectively suppresses spurious fluctuations while preserving critical fidelity, boosting performance without architectural modifications or costly prior quantification. Could this approach to adaptive regularization unlock new levels of robustness across diverse time series forecasting applications?

The Inevitable Noise: Why Time Series Forecasting Remains a Sisyphean Task

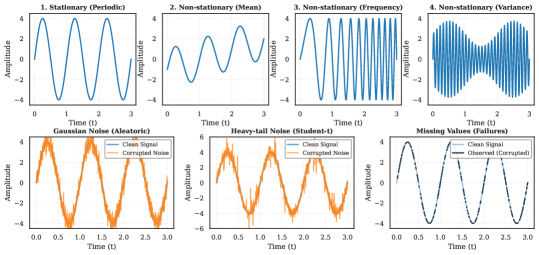

The ability to accurately predict future values in a time series – a sequence of data points indexed in time order – underpins critical decision-making across a remarkably diverse range of fields. From forecasting stock prices and managing energy grids to predicting weather patterns and tracking disease outbreaks, reliable time series analysis is paramount. However, real-world datasets are rarely pristine; they are invariably contaminated by noise – random variations that obscure the underlying signal. This noise can originate from measurement errors, unobserved influencing factors, or inherent randomness within the system itself. Consequently, developing forecasting models robust to these imperfections is a significant challenge, as even small amounts of noise can drastically reduce predictive accuracy and limit the practical applicability of these techniques. The pervasiveness of noisy data necessitates sophisticated methodologies capable of distinguishing genuine trends from spurious fluctuations.

The efficacy of many time series forecasting techniques hinges on the assumption of consistent noise levels within the data, but this assumption frequently breaks down in real-world applications. When noise isn’t stationary – meaning its statistical properties change over time – or is heteroscedastic, exhibiting varying levels of intensity, traditional forecasting models often struggle to generalize beyond the training data. This is because models optimized for a specific noise profile become unreliable when confronted with unseen data exhibiting different noise characteristics. Consequently, forecasts can be significantly skewed, leading to inaccurate predictions and potentially flawed decision-making, particularly in fields like finance, weather prediction, and resource management where reliable forecasting is paramount.

A prevalent technique for preventing overfitting in time series forecasting models is dropout, where nodes are randomly deactivated during training. However, standard, or fixed dropout applies a uniform deactivation rate irrespective of the noise present in the data. This approach proves insufficient when dealing with heteroscedastic time series – those exhibiting varying levels of noise. When noise is low, fixed dropout unnecessarily restricts model capacity, hindering the learning of intricate patterns. Conversely, during periods of high noise, a constant dropout rate may be inadequate to effectively regularize the model, leading to overfitting and poor generalization performance. Consequently, a static dropout rate fails to adapt to the dynamic noise landscape inherent in real-world time series, ultimately limiting the model’s ability to accurately forecast future values.

DropoutTS: A Pragmatic Response to the Inevitable Messiness of Data

DropoutTS introduces a regularization technique differing from traditional methods by dynamically adjusting model capacity on a per-instance basis. Instead of applying a fixed regularization strength across all data, DropoutTS analyzes the characteristics of each individual time series segment to determine the appropriate level of regularization. This is achieved by assessing instance-level noise, allowing the model to apply stronger regularization to noisier segments and weaker regularization to cleaner segments. This adaptive approach aims to prevent overfitting to noisy data while maintaining model performance on reliable data, ultimately improving generalization capabilities and robustness.

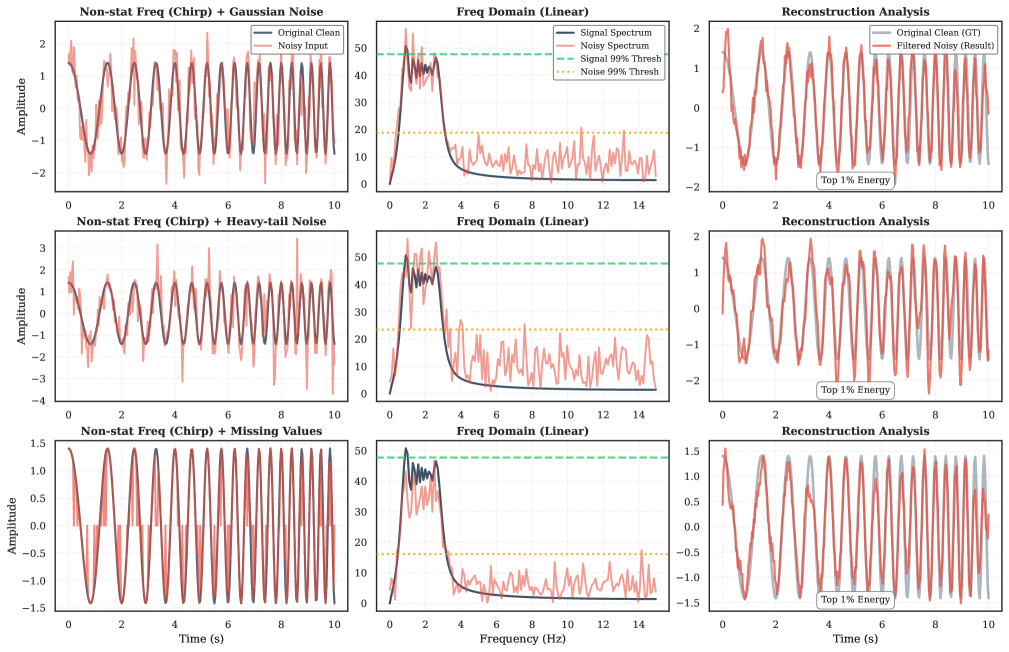

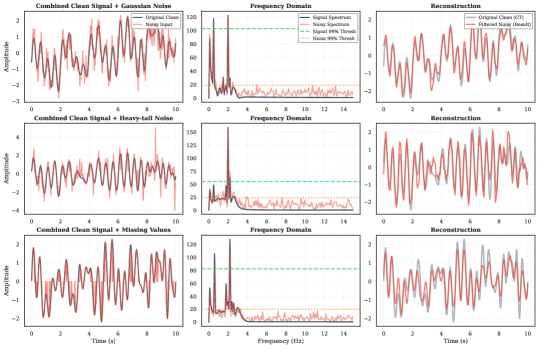

DropoutTS utilizes spectral analysis to quantify noise within time series data by examining the frequency content of individual segments. This approach is based on the principle that noisy signals exhibit a broader, more diffuse spectral distribution compared to clean, coherent signals. Specifically, the method computes the power spectral density (PSD) of each time series segment and analyzes its characteristics. Segments with a flatter PSD – indicating energy distributed across a wider range of frequencies – are identified as potentially noisy. This provides a quantifiable metric for noise levels, enabling the system to differentiate between complex, but valid, signals and those corrupted by noise, and thereby facilitating adaptive regularization strategies.

Sample-adaptive dropout in DropoutTS utilizes a spectral flatness measure to dynamically adjust regularization strength on a per-sample basis. Spectral flatness, calculated as the geometric mean of the power spectrum divided by the arithmetic mean, provides an indication of signal complexity; lower values suggest concentrated spectral energy indicative of simpler, potentially more reliable signals, while higher values indicate broader spectral distribution and increased complexity. This measure is then used to calibrate the dropout probability – lower spectral flatness results in reduced dropout (less regularization), preserving information from potentially reliable segments, and conversely, higher spectral flatness triggers increased dropout, mitigating the influence of complex or noisy segments. This adaptive approach contrasts with traditional dropout, which applies a fixed regularization strength across all samples.

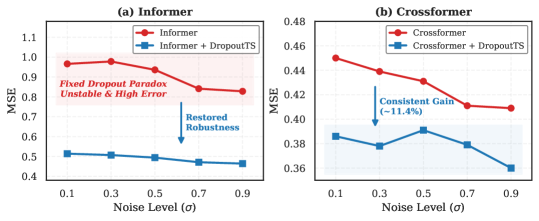

DropoutTS demonstrates improved model robustness and reduced generalization error through adaptive regularization. Evaluations across diverse datasets indicate gains of up to 46.0% in adversarial robustness, signifying a substantial improvement in performance when subjected to intentionally perturbed inputs. This enhancement stems from the system’s ability to dynamically adjust regularization strength based on instance-level noise characteristics, effectively mitigating the impact of noisy data and enhancing the model’s ability to generalize to unseen examples. The observed gains represent an average improvement; specific performance increases vary depending on the dataset and adversarial attack employed.

Deconstructing the Noise: How Spectral Analysis Reveals the Signal’s True Form

The spectral noise scorer, central to DropoutTS’s noise assessment, operates by transforming time series segments from the time domain into the frequency domain using techniques such as the Fast Fourier Transform. This decomposition isolates the signal’s constituent frequencies, allowing for an analysis of energy distribution across those frequencies. By examining the magnitude of each frequency component, the scorer identifies patterns indicative of noise; higher energy in certain frequency bands, or a generally erratic distribution, can suggest the presence of noise. This frequency-based approach enables the quantification of noise independent of the time-series’ overall magnitude or trend, providing a more robust and granular noise profile than methods operating solely in the time domain.

Global linear detrending is a preprocessing step applied to time series data before spectral decomposition to mitigate the influence of non-stationary trends on noise estimation. This process involves fitting a linear regression model to the entire time series and subtracting the fitted trend line, effectively removing systematic increases or decreases over time. By isolating fluctuations around a zero mean, detrending ensures that the subsequent spectral analysis accurately reflects the characteristics of the noise component, rather than being dominated by the trend. This is crucial because non-stationary trends can artificially inflate the apparent energy at lower frequencies, leading to an overestimation of noise levels and inaccurate noise scoring.

The DropoutTS method utilizes spectral decomposition to isolate noise by calculating the residual signal after reconstructing the time series from its dominant frequency components. This process effectively differentiates noise from the underlying signal; any energy remaining in the residual spectrum after reconstruction is attributed to noise. Quantification is achieved by measuring the magnitude of these spectral residuals, providing a direct assessment of noise levels within each time series instance. This allows for a granular, instance-specific noise profile, enabling precise identification and characterization of noise components independent of the overall signal strength.

Analysis across seven real-world datasets indicates a high degree of spectral sparsity in the time series data. This is evidenced by a Pearson Correlation Coefficient exceeding 0.92 between the original time series and a reconstruction created by retaining only the top 10% of spectral energy. This strong correlation confirms that a substantial portion of the signal can be accurately represented by a limited number of dominant frequencies, suggesting that much of the spectral content consists of negligible noise or redundant information. The consistently high correlation across multiple benchmarks validates the robustness of this spectral sparsity characteristic within the evaluated data.

Beyond the Horizon: The Potential, and Inevitable Limitations, of Adaptive Time Series Modeling

DropoutTS represents a significant advancement in time series modeling, moving beyond traditional regularization techniques to offer a comprehensive framework for enhanced model reliability. Unlike methods that simply aim to prevent overfitting, DropoutTS actively adapts to the inherent noise characteristics within time series data, building models demonstrably more resilient to unpredictable variations. This is achieved through a spectral-based approach that identifies and mitigates noise, leading to improved performance and generalization capabilities. The framework isn’t merely about reducing error metrics; it’s about creating models that consistently deliver trustworthy predictions, even when faced with the complexities of real-world time series – a crucial attribute for applications ranging from financial forecasting to energy demand prediction.

DropoutTS distinguishes itself from conventional regularization techniques through its ability to dynamically adjust to the fluctuating noise levels inherent in many time series datasets – a phenomenon known as heteroscedasticity. Traditional methods often apply uniform regularization, proving suboptimal when noise variance changes over time. This novel approach quantifies noise using spectral analysis, enabling it to selectively regularize different parts of the time series based on their noise characteristics. Demonstrated on the Electricity dataset using the Informer model, this adaptive regularization yielded a significant reduction in Mean Squared Error – up to 68.0% – showcasing its potential to substantially improve forecasting accuracy and model reliability in real-world applications where noise patterns are non-stationary.

The methodology underpinning DropoutTS extends beyond time series analysis, offering a novel approach to noise quantification applicable across diverse signal processing and data analysis fields. By characterizing noise through its spectral properties – specifically, analyzing the frequency distribution of unwanted signal components – researchers can move beyond simplistic assumptions of noise as purely random. This spectral lens allows for a more nuanced understanding of noise characteristics, enabling the development of targeted filtering and regularization techniques optimized for specific noise profiles. Consequently, this approach isn’t limited to improving model accuracy; it also facilitates more efficient data processing by allowing algorithms to focus on relevant signals and discard noise with greater precision, potentially accelerating convergence and reducing computational costs in various applications beyond time series forecasting.

The principles underpinning DropoutTS extend beyond time series analysis, offering a potentially valuable adaptive regularization technique for diverse machine learning applications. Investigations into other domains characterized by noisy or variable data suggest that the method’s spectral filtering capabilities can significantly accelerate model training. Initial projections indicate a possible reduction of up to 31% in training time, stemming from the refined signal processing that allows for faster convergence. This accelerated learning is achieved by focusing computational resources on the most informative features, effectively mitigating the impact of noise and enhancing the efficiency of optimization algorithms across a broader spectrum of data-driven tasks.

The pursuit of ever-more-complex time series forecasting models feels a bit like building sandcastles against the tide. This paper, with its DropoutTS, attempts to fortify those structures against spectral noise – a predictable source of erosion, one might add. It’s amusing to see researchers meticulously crafting adaptive regularization; it’s essentially admitting that even the most elegant algorithms require a healthy dose of controlled chaos to survive production. As Robert Tarjan once observed, ‘Complexity is not a virtue.’ DropoutTS appears to be adding complexity, but with the stated goal of increasing robustness. One suspects, however, that it’s merely delaying the inevitable accumulation of tech debt. The system will eventually crash, it always does; at least this approach offers a slightly more graceful failure mode.

The Road Ahead

DropoutTS, with its spectral noise-driven regularization, represents a predictable refinement, not a revolution. The pursuit of ‘robustness’ in time series forecasting will inevitably reveal that today’s outlier is tomorrow’s commonplace. The elegance of dynamically adjusting dropout rates based on frequency content will, in production, encounter unforeseen harmonic distortions and non-stationary noise sources – problems always lurking beyond the validation set. It is a solid step, certainly, but one anticipates a future landscape littered with increasingly complex noise models attempting to capture realities the current formulation simply cannot.

The emphasis on spectral analysis is noteworthy. However, focusing solely on frequency-domain characteristics risks overlooking the subtle, yet pervasive, influence of temporal dependencies that defy simple Fourier decomposition. One suspects the next iteration will involve hybrid approaches, perhaps combining spectral dropout with attention mechanisms or learned representations capable of capturing long-range temporal dynamics. The question isn’t whether these methods will improve accuracy on benchmark datasets – they almost certainly will – but whether they will genuinely generalize to the messy, unpredictable data encountered outside the laboratory.

Ultimately, the field will circle back to a familiar truth: perfect forecasting is an illusion. The goal will shift, as it always does, from minimizing error to managing uncertainty. Methods that gracefully degrade in the face of unforeseen circumstances, rather than catastrophically failing, will prove the most valuable. If all tests pass, it’s because they test nothing of real-world complexity.

Original article: https://arxiv.org/pdf/2601.21726.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Gold Rate Forecast

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- 2026 Upcoming Games Release Schedule

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- Save Up To 44% on Displate Metal Posters For A Limited Time

- Amanda Seyfried “Not F***ing Apologizing” for Charlie Kirk Comments

- Best Doctor Who Comics (October 2025)

2026-02-02 06:39