Author: Denis Avetisyan

As open-source AI models proliferate, a growing concern is the collective environmental impact of their development and derivative works.

Tracking the cumulative carbon and water footprint of AI models, and accounting for the rebound effect, is vital for ensuring long-term sustainability in the open-source ecosystem.

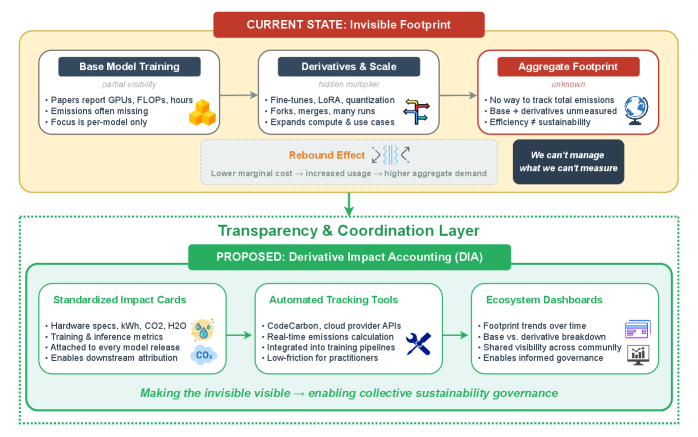

Despite gains in computational efficiency, the escalating scale of open-source artificial intelligence threatens to offset sustainability benefits through accelerated experimentation and deployment. This paper, ‘Sustainable Open-Source AI Requires Tracking the Cumulative Footprint of Derivatives’, argues that comprehensive accounting of energy and water usage across model lineages-including fine-tunes, adapters, and forks-is crucial for responsible AI development. We propose Data and Impact Accounting (DIA), a transparency layer designed to standardize reporting and aggregate impacts, making derivative costs visible and fostering ecosystem-level accountability. Will coordinated tracking of these cumulative footprints enable a truly sustainable trajectory for the rapidly expanding open-source AI landscape?

The Unseen Costs of Exponential Growth

The burgeoning open-source artificial intelligence ecosystem, driven by increasingly accessible models such as Llama 3 and platforms like Hugging Face, is generating a substantial environmental impact often obscured by the rapid pace of innovation. While democratizing access to AI technology, the proliferation of these models necessitates considerable computational resources – and consequently, energy – for both training and operation. Each new iteration, and each user leveraging these tools, contributes to a growing demand on global infrastructure, exceeding the gains made in energy efficiency. This expansion isn’t simply about powering more servers; it represents a fundamental shift in energy consumption patterns, creating a previously underestimated carbon footprint that requires urgent attention to ensure sustainable development within the field.

The proliferation of increasingly powerful artificial intelligence models demands a considerable and growing allocation of resources, notably energy and water. While advancements in model efficiency are being made, the very nature of training these large systems requires immense computational power, driving a substantial surge in global data centre electricity consumption. Current trends reveal a 15% annual increase in this demand – a rate four times exceeding overall electricity growth – placing significant strain on power grids and freshwater supplies used for cooling. This escalating resource intensity presents a critical challenge to the sustainability of AI development, demanding proactive strategies to mitigate environmental impact and ensure responsible innovation in the field.

The unchecked expansion of artificial intelligence carries significant environmental risks that threaten to undermine its long-term viability. Failing to address the escalating energy and water demands of increasingly complex AI models could accelerate climate change, creating a paradoxical situation where a technology intended to solve global challenges inadvertently exacerbates them. As models grow in size and computational requirements, so too does their carbon footprint, potentially offsetting any positive impact they might offer. This necessitates a proactive shift towards sustainable AI development, prioritizing energy efficiency, responsible resource management, and innovative cooling technologies to ensure that progress doesn’t come at the expense of a habitable planet.

Data centers, the physical infrastructure powering the artificial intelligence boom, are experiencing an exponential surge in energy demand, a trend recently underscored by the International Energy Agency. Current estimates place US AI server electricity consumption between 53 and 76 terawatt-hours in 2024 alone – a substantial figure equivalent to the annual power consumption of millions of homes. Projections indicate this demand will escalate dramatically, potentially reaching 165 to 326 terawatt-hours by 2028. This rapid increase necessitates proactive mitigation strategies, including improvements in data center energy efficiency, the adoption of renewable energy sources, and the development of more computationally efficient AI models, to ensure sustainable growth within the field and avoid exacerbating global energy challenges.

Accounting for the Whole System: A Framework for Impact Assessment

Data and Impact Accounting (DIA) provides a structured methodology for evaluating the environmental effects of AI models throughout their complete lifecycle, from initial development and training to deployment and eventual decommissioning. This framework seeks to mitigate the “tragedy of the commons” – where shared resources are depleted due to individual self-interest – by establishing a common language and standardized metrics for assessing resource consumption. The core principle of DIA is to facilitate coordinated, responsible resource utilization within the AI community without imposing limitations on access or innovation; it aims to create a system where environmental costs are transparently quantified and can be factored into model development and deployment decisions, ultimately promoting sustainability alongside continued progress in artificial intelligence.

Accurate measurement of carbon emissions from AI model training and deployment relies on specialized tools such as CodeCarbon, ML CO2 Impact, and Carbontracker. These tools function by profiling hardware utilization – specifically, energy consumption of CPUs, GPUs, and memory – during the various stages of the AI lifecycle. CodeCarbon, for example, provides an automated approach to estimate the carbon footprint by integrating with training scripts and utilizing publicly available carbon intensity data for different geographic regions. ML CO2 Impact focuses on quantifying the energy usage and associated emissions, while Carbontracker offers detailed tracking of resource consumption during model training, allowing developers to identify and address inefficiencies. The data collected from these tools is typically reported in kilograms of carbon dioxide equivalent (kgCO2eq) or tonnes of carbon dioxide equivalent (tCO2eq), providing a standardized metric for comparison and accountability.

Accurate assessment of AI model environmental impact requires consideration beyond direct emissions measurements. System effects, such as rebound effects and the Jevons Paradox, can significantly alter the net environmental outcome. A rebound effect occurs when efficiency gains lead to increased consumption, partially or fully offsetting the emissions reductions. The Jevons Paradox specifically describes how technological progress increasing resource efficiency can increase resource consumption overall. Failing to account for these systemic impacts can lead to an inaccurate portrayal of a model’s sustainability; a seemingly efficient model may ultimately contribute to greater environmental harm if its use encourages increased activity or consumption patterns.

The potential for increased consumption to negate the environmental benefits of efficiency gains necessitates a comprehensive accounting system for AI model development. While improvements in algorithmic or hardware efficiency can reduce the carbon footprint of training or inference, these gains may be offset by a corresponding increase in model usage or the development of even larger models. For example, the pretraining phase of Meta’s Llama 3, encompassing both the 8B and 70B parameter models, resulted in a total carbon footprint of 2,290 metric tons of carbon dioxide equivalent (tCO2eq). This demonstrates that even relatively efficient models can have a substantial environmental impact when deployed at scale, highlighting the need to track not only direct emissions but also the broader systemic effects of AI adoption.

The Art of Subtraction: Techniques for Sustainable AI Design

Green Software Engineering (GSE) is a systematic approach to application development focused on reducing the environmental impact of software throughout its lifecycle. This encompasses principles such as minimizing energy consumption, reducing hardware requirements, and optimizing resource utilization. Applied to Artificial Intelligence (AI) models, GSE involves evaluating the energy efficiency of training processes, deploying models on energy-efficient infrastructure, and employing techniques to reduce model size and complexity without significant performance degradation. Key practices include workload management, efficient data storage, and selecting appropriate algorithms and programming languages. GSE aims to integrate sustainability considerations into all stages of software development, from initial design to ongoing maintenance and eventual decommissioning.

Model compression techniques address the substantial computational demands of artificial intelligence by reducing model size and complexity. Pruning identifies and removes unnecessary weights from a neural network, decreasing both memory footprint and processing requirements. Distillation transfers knowledge from a large, complex “teacher” model to a smaller, more efficient “student” model, maintaining performance with fewer parameters. Mixed-precision training utilizes lower-precision data formats – such as 16-bit floating point instead of 32-bit – during training, reducing memory bandwidth and accelerating computations without significant accuracy loss. These methods collectively lower energy consumption and hardware costs associated with both training and inference, enabling deployment on resource-constrained devices and reducing the overall environmental impact of AI systems.

Model compression techniques directly address the resource intensity of artificial intelligence by enabling deployment of smaller, computationally less expensive models without substantial performance degradation. Methods such as pruning, distillation, and quantization reduce model size and complexity, leading to decreased energy consumption during both training and inference. Quantization, specifically, reduces the precision of numerical representations within the model; utilizing 8-bit integer representation instead of the standard 32-bit floating-point format can reduce model memory footprint by approximately four times, directly impacting storage and bandwidth requirements. This reduction in resource demand allows for more efficient model deployment on edge devices and reduces the overall environmental impact of AI systems.

Widespread adoption of model optimization techniques within the open-source artificial intelligence ecosystem is crucial for scalability and sustainability. Utilizing frameworks and pre-trained models that incorporate techniques like pruning, quantization, and knowledge distillation allows for broader accessibility and reduced computational demands. For example, DistilBERT, a distilled version of the BERT model, achieves 97% of BERT’s performance on the GLUE benchmark while demonstrating significantly faster inference speeds and a reduced model size; this allows deployment on lower-resource hardware and lowers energy consumption across numerous applications. Open-source distribution of these optimized models and tools facilitates collaborative development and accelerates the implementation of efficient AI practices, maximizing the environmental benefits.

Beyond Algorithms: Governing a Sustainable AI Future

The burgeoning Open Source AI ecosystem, while innovative, lacks established governance structures, necessitating a systematic approach to institutional analysis and development. This framework moves beyond simply evaluating technical capabilities and instead focuses on the rules, norms, and organizations that shape AI development and deployment. By dissecting the existing landscape – encompassing developer communities, funding mechanisms, and data access protocols – researchers can pinpoint vulnerabilities and opportunities for intervention. Such analysis allows for the intentional design of institutions that foster sustainability, encouraging practices like responsible data sourcing, energy-efficient algorithms, and equitable access to AI technologies. Ultimately, a robust institutional framework isn’t about stifling innovation, but rather about channeling it towards outcomes that benefit both humanity and the environment, ensuring the long-term viability of this rapidly evolving field.

A rigorous institutional analysis reveals that sustainable AI development isn’t solely hampered by technological limitations, but by deeply embedded systemic barriers within the open-source ecosystem. These obstacles range from funding models that prioritize rapid innovation over long-term environmental impact, to a lack of standardized metrics for assessing the energy consumption and carbon footprint of AI models. Applying this analytical framework allows researchers and policymakers to pinpoint these challenges – such as the concentration of computational resources in the hands of a few large corporations – and design targeted interventions. Addressing these systemic issues requires fostering greater transparency in data sourcing and model training, incentivizing the development of energy-efficient algorithms, and establishing clear governance structures that prioritize sustainability alongside performance. Ultimately, a proactive, institutionally-informed approach is essential to unlock the full potential of AI while mitigating its environmental consequences.

The widespread adoption of sustainable artificial intelligence hinges on fostering a culture of collaboration, transparency, and accountability within the AI community. Current development often occurs within siloed organizations, hindering the sharing of best practices and limiting the potential for collective problem-solving regarding environmental impact and resource consumption. Increased transparency in data sourcing, model training, and energy usage allows for critical evaluation and the identification of inefficiencies. Crucially, establishing clear lines of accountability – defining who is responsible for the ethical and environmental consequences of AI systems – incentivizes the development of genuinely sustainable solutions and discourages practices that prioritize short-term gains over long-term planetary health. Without these foundational elements, the potential for AI to contribute to a more sustainable future remains largely unrealized, as innovation may occur without regard for its broader implications.

Effective governance of artificial intelligence necessitates a forward-looking and comprehensive strategy, moving beyond reactive measures to anticipate and address potential societal and environmental impacts. This proactive approach demands consideration of AI’s entire lifecycle – from data sourcing and model training to deployment and eventual decommissioning – to minimize harm and maximize benefits. A truly holistic framework acknowledges that AI is not merely a technological challenge, but a socio-technical system interwoven with existing power structures, ethical considerations, and planetary boundaries. Consequently, successful governance requires interdisciplinary collaboration, encompassing technical experts, policymakers, ethicists, and community stakeholders, all working in concert to ensure AI serves as a catalyst for sustainable development and equitable outcomes, rather than exacerbating existing inequalities or contributing to ecological degradation.

The pursuit of efficiency within open-source AI, as detailed in the paper, frequently overlooks the systemic consequences of derivative works. Each model spawned creates a lineage, and with it, a compounding environmental impact – a reality the study rightly frames as a potential tragedy of the commons. This echoes Brian Kernighan’s observation: “Complexity adds cost. Simplicity adds value.” While not directly about environmental cost, the sentiment applies; each layer of complexity in model derivation, unburdened by impact accounting, increases the overall footprint. Stability, in this context, is merely an illusion that caches well – a temporary reprieve before the cumulative effects manifest. The paper proposes a necessary shift toward acknowledging that growth, not control, defines such ecosystems.

What Lies Ahead?

The pursuit of efficiency in artificial intelligence feels increasingly like rearranging deck chairs. Each optimization, each algorithmic refinement, offers a fleeting reduction in resource demand, swiftly absorbed by the escalating scale of deployment. This work highlights a critical, and often overlooked, truth: the true cost isn’t in the initial training, but in the long tail of derivative models, each inheriting and amplifying the environmental impact of its predecessors. The open-source ethos, while commendable, accelerates this process, creating a distributed tragedy of the commons where individual contributions, however well-intentioned, collectively overwhelm any localized gains.

The call for data and impact accounting isn’t a plea for better metrics-it’s an acknowledgement that complete control is an illusion. Attempts to legislate sustainability will likely founder on the shoals of innovation, driving development underground or simply shifting the burden elsewhere. Instead, the focus must turn towards cultivating awareness – a systemic understanding of how each model contributes to a larger, unmanageable network. Expect to see the rise of “shadow footprints,” unintended consequences that no single actor can fully anticipate or mitigate.

Perhaps the most unsettling implication is the realization that documentation, in this context, serves little purpose. No one writes prophecies after they come true. The field needs to move beyond simply measuring impact, towards developing tools that allow developers to anticipate-and perhaps even accept-the inevitable failures embedded within these complex, evolving systems. The question isn’t how to build sustainable AI, but how to live with its consequences.

Original article: https://arxiv.org/pdf/2601.21632.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Gold Rate Forecast

- Ragnarok X Next Generation Class Tier List (January 2026)

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- Silent Hill f: Who is Mayumi Suzutani?

- 9 TV Shows You Didn’t Know Were Based on Comic Books

- Best Doctor Who Comics (October 2025)

2026-02-01 07:22