Author: Denis Avetisyan

A new approach harnesses the power of artificial intelligence to predict complex fluid dynamics with unprecedented accuracy and efficiency.

This paper introduces LLM4Fluid, a framework leveraging large language models and physics-informed techniques for generalizable spatio-temporal prediction of fluid flows, exceeding the performance of existing methods.

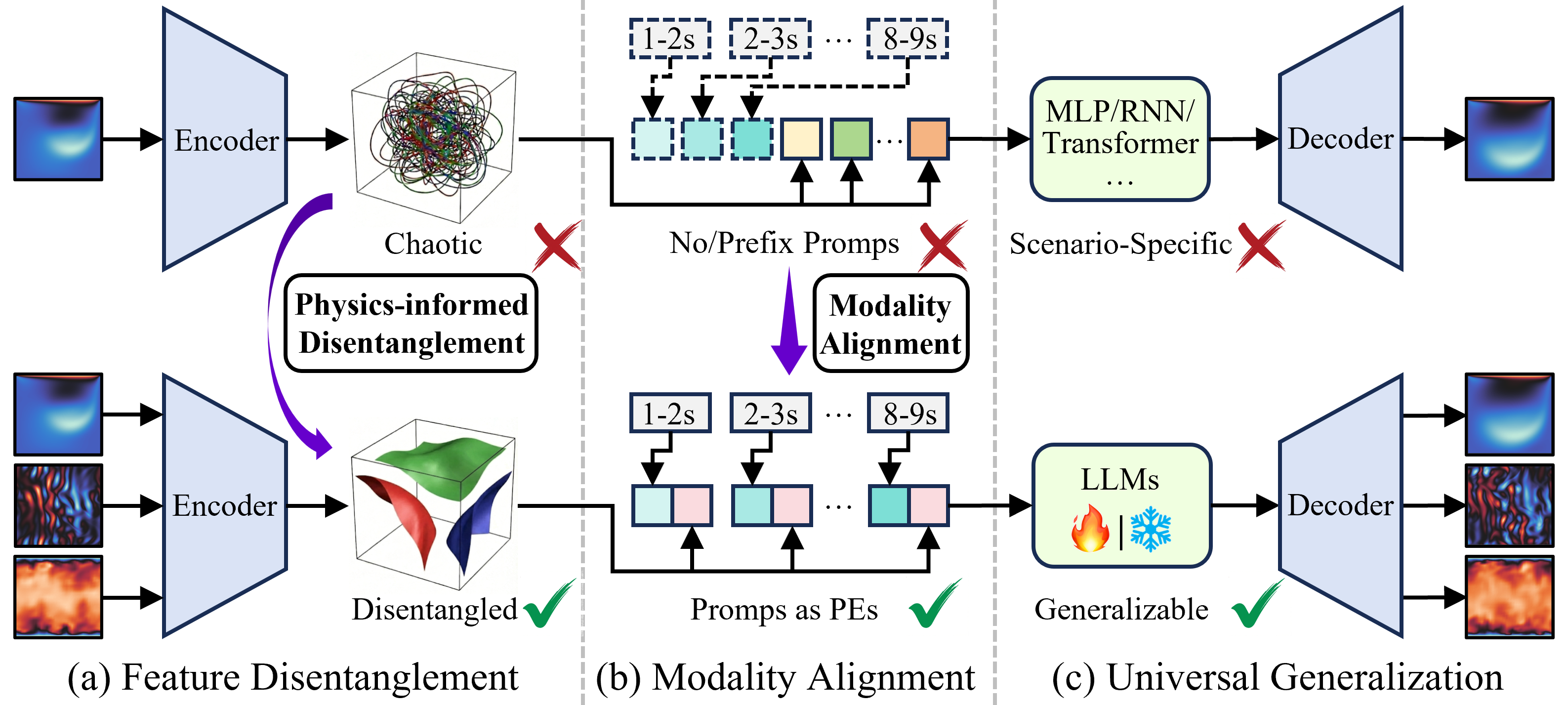

Predicting fluid dynamics remains challenging due to the difficulty of generalizing across unseen flow conditions and the computational cost of retraining models for new scenarios. This limitation motivates the development of ‘LLM4Fluid: Large Language Models as Generalizable Neural Solvers for Fluid Dynamics’, a novel framework that leverages pretrained large language models for accurate and robust spatio-temporal prediction. By compressing flow fields into a disentangled latent space and aligning modalities for effective temporal processing, LLM4Fluid achieves state-of-the-art performance without retraining, demonstrating powerful zero-shot and in-context learning capabilities. Could this approach unlock a new paradigm for physics-informed machine learning, enabling truly generalizable solutions for complex fluid simulations and beyond?

Turbulence and the Limits of Computation

Computational fluid dynamics, while a cornerstone of modern engineering, faces a persistent challenge in accurately simulating turbulent flows. The inherent chaotic nature of turbulence creates a cascade of eddies spanning a vast range of scales – from the macroscopic down to the microscopic – demanding computational grids and processing power that quickly become prohibitive. Existing methods often rely on approximations and simplifications, such as Reynolds-Averaged Navier-Stokes (RANS) equations or Large Eddy Simulation (LES), to reduce the computational burden. However, these approaches inevitably introduce inaccuracies, particularly when resolving fine-scale turbulent structures critical for precise flow prediction. Consequently, even relatively simple turbulent flows can require supercomputers and significant simulation time, hindering real-time applications and detailed analysis of complex fluid phenomena like those found in atmospheric modeling or advanced aerospace designs.

Simulating rapidly changing fluid phenomena, such as the catastrophic release of water in dam-break scenarios or the intricate energy cascade within Kolmogorov flow, presents persistent challenges for computational fluid dynamics. Dam-break flows involve highly complex free-surface interactions, shock waves, and turbulent mixing, demanding extremely high-resolution simulations to accurately capture the dynamic progression of the event. Similarly, Kolmogorov flow-characterized by energy transferring from large eddies to progressively smaller scales until dissipated by viscosity-requires resolving an enormous range of length scales, a task that quickly overwhelms even the most powerful supercomputers. The difficulty arises because these transient events are inherently multi-scale and require capturing both the large, dominant structures and the small-scale turbulence that governs energy dissipation; current models often rely on approximations that compromise accuracy when dealing with these dynamic and complex systems.

The inability of current computational fluid dynamics to fully capture turbulent flow presents a tangible obstacle to advancement across diverse scientific and engineering disciplines. Precise weather forecasting, reliant on accurately modeling atmospheric currents, suffers from limitations in predicting localized events and long-term trends. Similarly, aerodynamic design – crucial for everything from fuel-efficient aircraft to high-performance vehicles – is constrained by the difficulty of simulating airflow with sufficient fidelity, hindering optimization for drag reduction and lift enhancement. Beyond these applications, industries such as biomedical engineering, which depend on understanding blood flow, and civil engineering, concerned with predicting flood patterns and structural loads, all face comparable challenges stemming from the inherent complexities of fluid behavior and the computational expense of resolving them. Ultimately, overcoming these hurdles is paramount to unlocking innovations and achieving more reliable predictions in any field governed by fluid dynamics.

Distilling Complexity: Reduced-Order Modeling

Disentangled Reduced-Order Modeling (DROM) addresses the challenge of representing complex, high-dimensional fluid flow data with significantly fewer variables. Traditional dimensionality reduction techniques often result in entangled latent spaces where individual physical phenomena are mixed, hindering interpretability and predictive accuracy. DROM, however, aims to map the high-dimensional flow field onto a lower-dimensional “latent space” while preserving essential physical information. This is accomplished by identifying and isolating independent modes or features within the flow, allowing each mode to be represented by a single variable in the latent space. By decoupling these modes, DROM enables efficient data compression, reduced computational cost for simulations and analysis, and improved understanding of the underlying physics governing the flow.

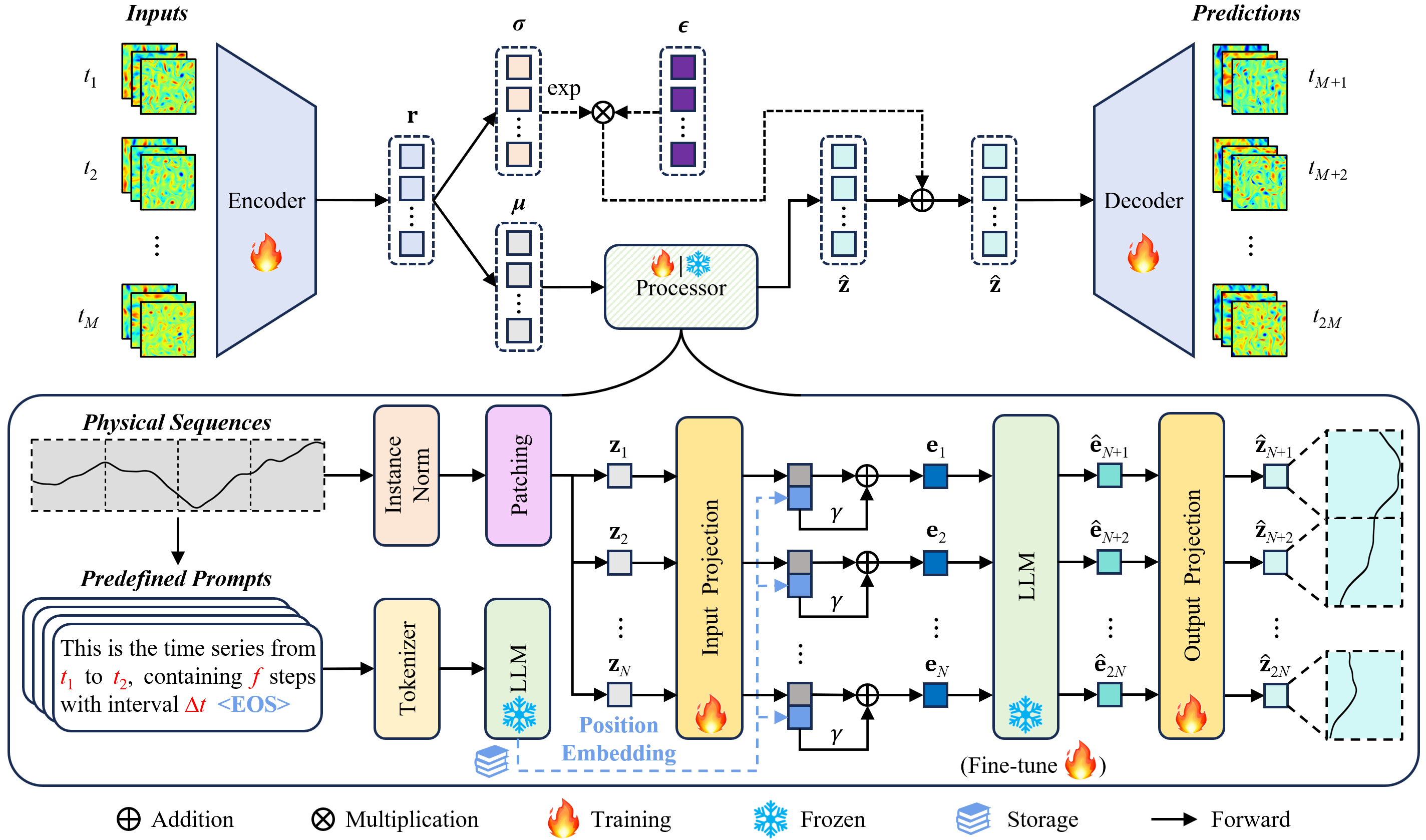

Autoencoders are neural network architectures utilized for dimensionality reduction and feature learning in Disentangled Reduced-Order Modeling. These networks consist of an encoder and a decoder; the encoder maps the high-dimensional flow data into a lower-dimensional latent space, while the decoder reconstructs the original data from this compressed representation. The network is trained to minimize the reconstruction error, forcing it to learn an efficient encoding that captures the most salient features of the flow field. This learned latent space provides a compressed representation of the flow, significantly reducing computational cost while retaining essential information for downstream analysis or prediction.

Physics-Informed Disentanglement within Disentangled Reduced-Order Modeling (DROM) enforces a specific organization of the latent space achieved through autoencoders. This is accomplished by incorporating physical constraints – derived from governing equations or known flow characteristics – into the autoencoder’s training process. Rather than allowing the autoencoder to learn an arbitrary mapping to a lower-dimensional space, these constraints guide the learning process to ensure that individual dimensions, or combinations of dimensions, within the latent space correspond to physically interpretable quantities, such as distinct flow modes, characteristic frequencies, or spatial structures. This structured latent space facilitates downstream tasks like prediction, control, and analysis by allowing manipulation and interpretation of the flow based on these physically meaningful variables.

Predictive Power Through Temporal Awareness

LLM4Fluid employs a novel framework centered around an LLM-based Temporal Processor designed to forecast the progression of fluid flow fields. This processor operates within a learned Latent Space, a reduced-dimensional representation of the flow physics, allowing for efficient temporal modeling. The system doesn’t directly predict physical quantities; instead, it predicts how the latent representation of the flow will evolve over time. This predicted latent representation is then decoded to reconstruct the corresponding flow field at future time steps. The use of an LLM for temporal processing enables the capture of complex temporal dependencies inherent in fluid dynamics, moving beyond the limitations of traditional methods that often rely on simpler, less expressive models for time evolution.

Modality Alignment within LLM4Fluid addresses the inherent disconnect between the Large Language Model’s (LLM) processing of abstract semantic information and the physical characteristics of fluid flow data. This alignment is achieved by establishing a correspondence between the LLM’s latent space representation and the learned features of the flow field. Specifically, the framework maps the LLM’s token embeddings to the fluid dynamics’ physical variables (velocity components u, v, and vorticity ω), enabling the LLM to ‘understand’ the physical meaning of the data it is processing. This bidirectional mapping facilitates accurate forecasting by allowing the LLM to leverage its reasoning capabilities on the encoded physical information, translating semantic understanding into precise predictions of flow behavior.

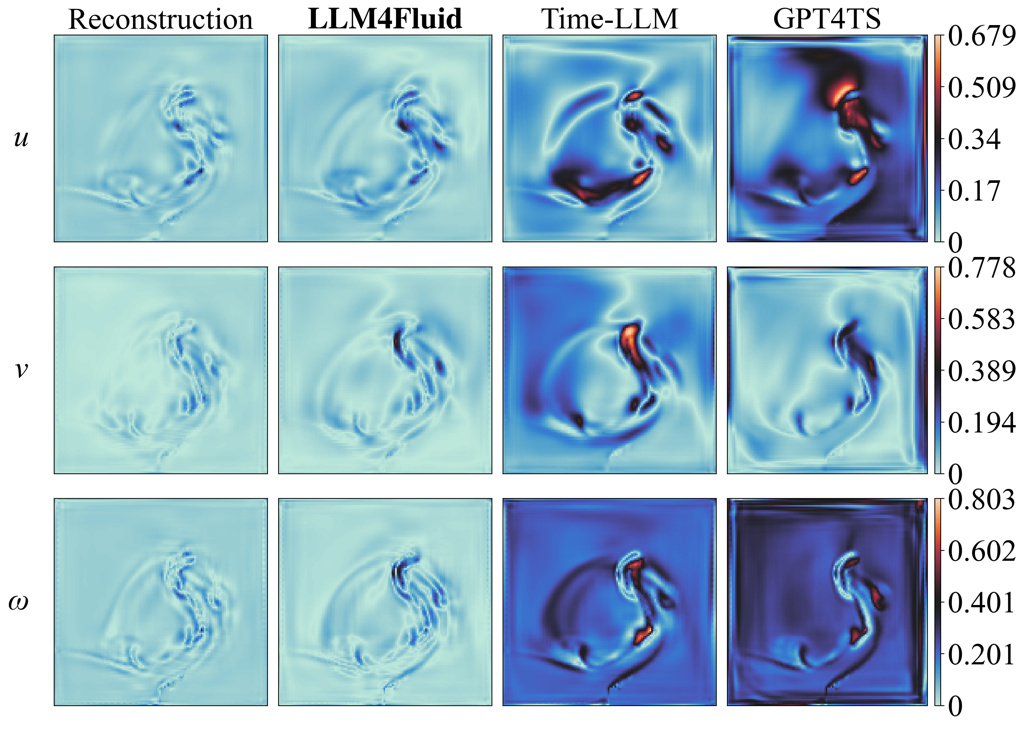

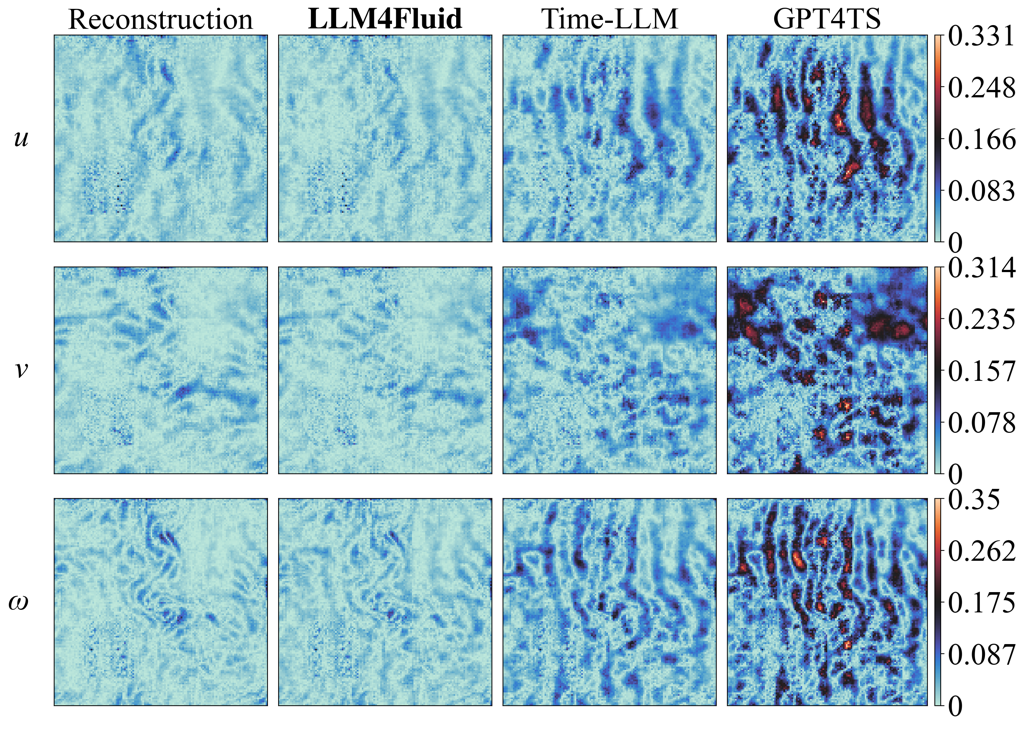

The LLM4Fluid framework demonstrates improved predictive accuracy in fluid dynamics simulations through the integration of Deep Learning Reduced Order Modeling (DROM) with a Large Language Model (LLM). Evaluations across five datasets, utilizing benchmark flows including Lid-Driven Cavity and Channel Flow, consistently yielded the lowest Mean Squared Error (MSE) compared to traditional methods. Furthermore, analysis of predictive drift indicates that LLM4Fluid exhibits the slowest rate of error accumulation over time, suggesting enhanced long-term stability and reliability in forecasting fluid flow evolution. These results quantitatively demonstrate the efficacy of the combined DROM-LLM approach for accurate and sustained prediction of complex fluid dynamics.

Quantitative evaluation demonstrates LLM4Fluid’s superior performance in flow field prediction, consistently achieving the highest R2 (Coefficient of Determination) values across all tested datasets. The R2 metric, ranging from 0 to 1, quantifies the proportion of variance in the ground truth flow fields explained by the model; LLM4Fluid’s consistently higher values indicate a stronger statistical relationship between predicted and actual flow behavior. Furthermore, LLM4Fluid exhibited a reduction in Mean Absolute Error (MAE) compared to baseline models, signifying a lower average magnitude of error in its predictions. This combination of high R2 and low MAE confirms LLM4Fluid’s improved ability to accurately represent and forecast the dynamics of fluid flow.

LLM4Fluid demonstrates enhanced prediction stability, as quantified by the Standard Deviation of Absolute Errors (σ) across velocity components u, v, and vorticity ω. Lower σ values indicate a tighter clustering of errors around the mean absolute error, signifying reduced variability in predictions. Across all evaluated variables, LLM4Fluid consistently achieved the smallest σ compared to baseline models, implying fewer instances of large, localized prediction errors and a more reliable overall forecast of the flow field’s temporal evolution. This improved stability is critical for applications sensitive to prediction outliers, such as control systems and accurate simulation of turbulent flows.

Beyond the Horizon: Implications and Future Trajectories

The confluence of large language models and reduced-order modeling is forging a new paradigm for tackling intricate fluid dynamics challenges. Traditional computational fluid dynamics, while accurate, often demands excessive computational resources, hindering real-time predictions. By leveraging LLMs, researchers are now able to learn the underlying physics from limited datasets and extrapolate to unseen conditions with remarkable speed. Reduced-order modeling techniques distill the essential dynamics of the flow, creating a compact representation that the LLM can efficiently process. This synergistic approach doesn’t merely offer a faster solution; it inherently incorporates physics-based constraints, ensuring predictions remain plausible and reliable, even when extrapolating beyond the training data. Consequently, applications requiring immediate analysis – such as aerodynamic control, weather forecasting, and optimization of industrial processes – stand to benefit significantly from this advancement, promising a future where complex fluid behavior can be predicted and managed in real time.

The accuracy of predictions generated by the LLM4Fluid framework hinges significantly on minimizing reconstruction loss. This loss, essentially a measure of the difference between the original, high-fidelity fluid dynamics data and the LLM’s reconstructed approximation, dictates how faithfully the model captures essential physical features. A substantial reconstruction loss indicates the LLM has failed to learn the underlying dynamics, leading to inaccurate predictions of future flow states. Consequently, sophisticated training strategies are employed to aggressively reduce this loss, often involving specialized loss functions and careful data weighting. Lowering reconstruction loss not only enhances the model’s ability to represent the current fluid state with precision but also improves its capacity to extrapolate and predict behavior in novel, unseen scenarios – a critical requirement for real-world applications like aerodynamic design and weather forecasting.

The development of LLM4Fluid is not reaching a plateau, but rather laying the groundwork for increasingly sophisticated simulations. Current research endeavors are geared towards expanding the model’s capabilities to accurately represent fluid behavior in far more intricate geometrical configurations and across a broader spectrum of flow regimes – from laminar flow to highly turbulent conditions. Beyond mere prediction, investigations are also underway to leverage LLM4Fluid’s predictive power for active control of fluid systems, potentially optimizing designs for enhanced efficiency and performance. This includes exploring applications in areas like aerodynamic shaping for reduced drag, or the precise manipulation of microfluidic devices, opening avenues for innovation in diverse fields ranging from aerospace engineering to biomedical technology.

The pursuit of predictive accuracy in fluid dynamics, as demonstrated by LLM4Fluid, often leads to models of increasing complexity. However, the framework subtly advocates for a different path-one where generalization emerges not from intricate design, but from distilling core principles. This aligns with the observation of Blaise Pascal: “The eloquence of the body is in the muscles; the eloquence of the mind is in clarity.” LLM4Fluid seeks to achieve efficient temporal prediction and disentanglement through a focused architecture, suggesting that true power resides in removing superfluous detail, not adding to it. Clarity, in this context, is the minimum viable kindness.

What Remains?

The pursuit of predictive fidelity in fluid dynamics has, for decades, accumulated layers of complexity. LLM4Fluid offers a necessary subtraction. The model’s capacity to distill physical principles into a learned representation is not, however, a destination. Rather, it highlights the enduring challenge of disentanglement – not merely of variables, but of scale. Current architectures still grapple with extending predictive horizons without succumbing to the inevitable drift inherent in any approximation. The true test lies not in matching existing benchmarks, but in gracefully degrading performance – in knowing how it fails, and why.

Further refinement necessitates a shift in evaluation metrics. Accuracy, while useful, obscures the crucial question of robustness. A model that excels on curated datasets, but falters with even minor perturbations, offers little practical utility. The field requires challenges that deliberately introduce ambiguity, forcing models to reason – to extrapolate beyond the precisely defined boundaries of the training data. The goal is not a perfect mirror of reality, but a reliably informative abstraction.

Ultimately, the value of this work resides in its suggestion: that powerful, general-purpose models can be sculpted to address specific scientific problems, not by adding more layers, but by carefully removing the superfluous. The path forward demands a continued commitment to parsimony – to recognizing that, in the end, what’s left is what matters.

Original article: https://arxiv.org/pdf/2601.21681.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- YouTuber streams himself 24/7 in total isolation for an entire year

- Ragnarok X Next Generation Class Tier List (January 2026)

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- Gold Rate Forecast

- Best Doctor Who Comics (October 2025)

- 2026 Upcoming Games Release Schedule

- All Songs in Helluva Boss Season 2 Soundtrack Listed

- 15 Lost Disney Movies That Will Never Be Released

2026-01-31 00:56