Author: Denis Avetisyan

This review details a new mathematical approach to efficiently allocate resources in edge computing networks, ensuring reliable performance for demanding applications like video analytics.

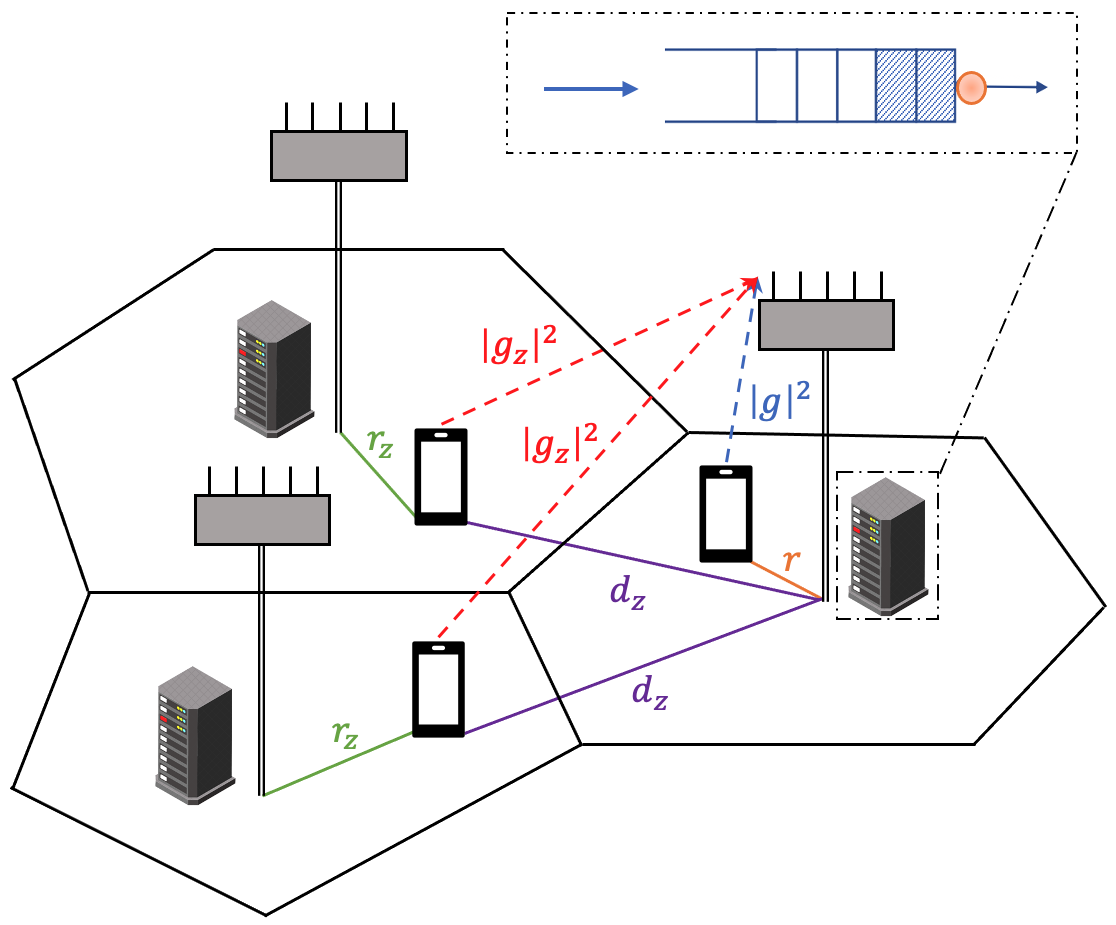

A stochastic geometry framework optimizes resource dimensioning in multi-cellular edge intelligent systems to guarantee quality of service.

Efficiently deploying edge intelligence requires balancing competing demands on wireless and computational resources, yet current approaches often decouple these or rely on simplified models. This paper, ‘Stochastic Modeling and Resource Dimensioning of Multi-Cellular Edge Intelligent Systems’, introduces a unified stochastic framework to jointly optimize resource allocation in multi-cell edge systems, capturing spatial uncertainty and workload dynamics. By combining stochastic geometry, queueing theory, and empirical AI profiling, we derive tractable expressions for end-to-end offloading delay and demonstrate a cost-efficient design through convex optimization. How can these findings be extended to accommodate more complex AI workloads and heterogeneous edge environments?

The Inevitable Shift: Addressing Latency at the Network Edge

Conventional cloud-based systems, while powerful, increasingly face limitations when serving applications demanding near-instantaneous response times. The inherent distance between a user’s device and centralized cloud servers introduces unavoidable delays – known as latency – which become critical bottlenecks for emerging technologies. Applications like augmented reality, autonomous vehicles, and real-time industrial control require processing data within milliseconds, a feat simply unattainable with data constantly traveling to and from distant data centers. This challenge isn’t merely about bandwidth; even with faster connections, the laws of physics impose a minimum delay. Consequently, a fundamental shift is underway, recognizing that pushing computational power closer to the source of data – to the ‘edge’ of the network – is no longer optional, but essential for unlocking the full potential of these next-generation technologies.

The increasing demand for instantaneous data processing is driving a fundamental shift in computing architecture, prioritizing the deployment of intelligence at the network edge. This approach recognizes that applications such as real-time video analytics – encompassing areas like autonomous vehicles, smart city surveillance, and industrial automation – cannot tolerate the delays inherent in transmitting data to centralized cloud servers. By processing video streams directly on devices like cameras, base stations, or local servers, critical insights can be extracted and acted upon with minimal latency. This localized processing not only enhances responsiveness but also reduces bandwidth consumption and improves data privacy, paving the way for a new generation of intelligent, connected systems that react in real-time to the physical world.

Successfully implementing edge intelligence demands a fundamental reassessment of how network resources are provisioned and managed. Traditional centralized approaches prove inadequate when dealing with the geographically distributed nature of edge devices and the stringent latency requirements of applications like autonomous vehicles or augmented reality. Consequently, network architectures must evolve towards greater decentralization and dynamic resource allocation, intelligently distributing computational workloads closer to the data source. This involves developing sophisticated algorithms for predicting demand, prioritizing critical tasks, and efficiently sharing resources across the edge network. Furthermore, quality of service (QoS) guarantees require robust mechanisms for monitoring network performance, detecting congestion, and proactively adjusting resource allocation to maintain optimal responsiveness and reliability – a shift from simply providing bandwidth to actively managing performance at the edge.

Resource Dimensioning: The Foundation of Scalable Edge Networks

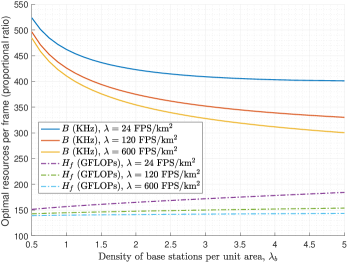

Efficient resource dimensioning is critical for edge network deployments due to the finite and often costly nature of both wireless spectrum and computational resources at the edge. This process involves determining the appropriate quantity of resources – including bandwidth, processing power, memory, and storage – needed to meet specified quality-of-service (QoS) requirements for applications and users. Under-provisioning leads to performance degradation and user dissatisfaction, while over-provisioning results in unnecessary capital expenditure and operational costs. Effective dimensioning must consider factors such as user density, application demands, data rates, latency constraints, and the heterogeneous nature of edge environments, balancing performance with economic viability to ensure scalable and sustainable network operation.

Effective resource dimensioning in edge networks requires careful consideration of the interdependent relationship between wireless spectrum availability, edge server computational capacity, and resulting interference. The amount of available radio frequency spectrum directly impacts the data rates achievable by user equipment, while edge server capacity dictates the volume of data that can be processed and the latency experienced by applications. Critically, increasing network density – to improve coverage and capacity – exacerbates inter- and intra-cell interference, reducing the signal-to-interference-plus-noise ratio (SINR) and necessitating strategies such as frequency reuse and power control. Insufficient computational resources at the edge can create bottlenecks, even with adequate wireless connectivity, leading to packet loss and increased latency. Conversely, ample computational resources are ineffective if wireless spectrum is limited or severely impacted by interference. Therefore, a holistic approach to dimensioning must jointly optimize these three critical elements to meet performance targets and ensure cost-effective operation.

Modeling and optimizing edge networks necessitates the application of advanced mathematical tools due to the inherent stochasticity and complexity of wireless environments. Stochastic geometry provides a framework for analyzing the spatial distribution of nodes and interference, allowing for performance evaluation and prediction in randomly deployed networks. Specifically, tools like point process theory are used to model node locations and derive statistical properties of interference. Simultaneously, queueing theory is crucial for assessing server capacity and latency, particularly in relation to computational demands at the edge. By modeling incoming requests as a stochastic process, queueing models – such as M/M/1 or more complex variations – enable the calculation of key performance indicators like average waiting time and server utilization, ultimately informing resource allocation decisions and ensuring quality of service.

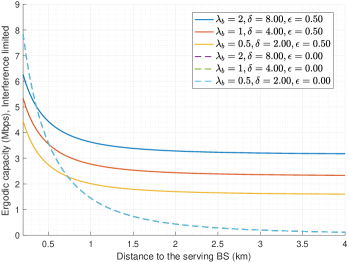

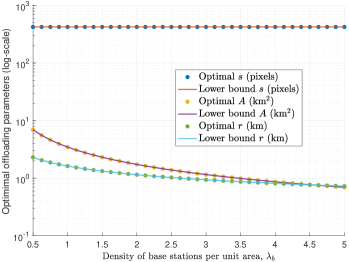

A stochastic framework for dimensioning edge resources has been developed, revealing a key relationship between frequency reuse and network density for cost-efficient operation in interference-limited systems. This framework models the random spatial distribution of edge nodes and users to analyze interference characteristics. Results demonstrate that maintaining a proportional scaling between frequency reuse distance and network density – specifically, reducing the reuse distance as density increases – minimizes the total cost of deployment while satisfying quality-of-service requirements. This approach avoids over-provisioning resources to combat interference, leading to significant reductions in infrastructure expenses. The framework utilizes tools from stochastic geometry to derive analytical expressions for key performance indicators, such as the average interference level and the probability of successful transmission, enabling optimized resource allocation strategies.

Mathematical Foundations for Optimal Network Performance

Defining resource allocation as an optimization problem involves representing the system’s constraints – such as bandwidth limitations, computational capacity, and quality of service (QoS) requirements – as mathematical equations or inequalities. The objective function, which seeks to be maximized or minimized (e.g., throughput, latency, cost), is then defined in terms of the controllable resource allocation variables. This formulation allows for the application of mathematical optimization techniques to systematically search the solution space and identify the configuration of resources that yields the best performance according to the defined objective, rather than relying on heuristic or manual approaches. The result is a quantifiable and repeatable method for determining optimal resource assignments.

Convex optimization provides a robust methodology for resource allocation problems by transforming them into a mathematically tractable form. Specifically, formulating the problem with convex objective functions and convex constraint sets guarantees that any locally optimal solution is also the global optimum; this eliminates the risk of converging to suboptimal configurations. This is achieved by leveraging well-established algorithms like gradient descent or interior-point methods, which are proven to efficiently converge to the global optimum for convex problems. The benefit lies in the certainty of achieving the best possible resource allocation given defined constraints, which is particularly crucial for maintaining Quality of Service (QoS) and minimizing operational costs in complex systems. Furthermore, the mathematical properties of convex optimization allow for rigorous analysis of solution sensitivity and scalability.

System signal analysis leverages the Laplace Transform to convert time-domain functions into the frequency domain, facilitating the characterization of system response and stability. This analysis informs the optimization process by providing data on transient behavior and steady-state characteristics. Concurrently, queueing theory, specifically employing the Erlang Function C(ρ, k) = \frac{ρ^k}{k!} \frac{1}{1 - ρ/k}, models waiting times in systems with probabilistic arrival and service rates, where ρ represents the traffic load and k denotes the number of servers. The Erlang Function allows for the calculation of key performance indicators such as the probability of waiting, average queue length, and expected waiting time, providing crucial data for resource allocation optimization and ensuring Quality of Service (QoS) requirements are met.

Effective interference management is integral to resource optimization in multi-user systems. Techniques such as frequency reuse, where radio frequencies are assigned and reused across different cells, are necessary to limit inter-cell interference and maintain signal quality. The optimization process must account for the constraints imposed by these mitigation strategies; for example, frequency reuse factors dictate spatial separation requirements between base stations to avoid co-channel interference. Failing to incorporate interference models into the optimization can lead to unrealistic resource allocations and ultimately, unacceptable levels of service degradation, even if theoretical bandwidth or compute costs appear optimal in isolation. Accurate modeling of interference, including path loss, shadowing, and fading, is therefore a prerequisite for achieving practical and sustainable performance gains.

Analysis indicates that a trade-off parameter of \beta_1 = 0.5 represents the optimal balance between bandwidth allocation and associated compute costs within the system. This value was determined through modeling and simulation, resulting in a Pareto-optimal solution; further adjustments to \beta_1 would necessitate a reduction in one performance metric to improve the other. Specifically, this parameter setting minimizes the overall cost function while satisfying defined Quality of Service (QoS) constraints, effectively maximizing resource utilization and system efficiency. The Pareto-optimal solution, achieved with \beta_1 = 0.5, represents a point on the Pareto frontier where no further improvement in one objective is possible without compromising another.

Maintaining a minimum server load, denoted as \rho_{min} = 0.528, is a critical operational parameter for upholding the defined Quality of Service (QoS) requirements. This threshold represents the lower bound on server utilization necessary to prevent instability and ensure consistent performance under anticipated workloads. Falling below this value introduces a heightened risk of service degradation, as the system may be unable to efficiently process incoming requests and maintain acceptable response times. The value was determined through system-level simulations and analysis of key performance indicators, specifically latency and throughput, under various load conditions, and represents the point at which the system transitions from stable, responsive operation to potentially unreliable behavior. Consistent monitoring and dynamic resource allocation strategies are therefore essential to guarantee that the server load remains at or above this specified minimum.

Realizing the Promise: Guaranteed QoS and Future Applications

Mission-critical applications, ranging from remote surgery to industrial automation and public safety communications, demand consistently reliable performance, and achieving this necessitates statistical Quality of Service (QoS) guarantees. Unlike deterministic guarantees – which promise performance levels with absolute certainty – statistical QoS acknowledges inherent network variability and instead assures a specified probability of meeting defined performance targets, such as latency, bandwidth, or packet loss. This probabilistic approach is far more practical for wireless networks, where conditions fluctuate rapidly due to interference, mobility, and dynamic traffic loads. By quantifying the likelihood of successful performance, system designers can tailor resource allocation and network protocols to satisfy application requirements with a pre-defined level of confidence, thereby enabling dependable operation even under challenging conditions and unlocking the potential for truly robust and responsive services.

Achieving reliable Quality of Service (QoS) fundamentally relies on effective resource dimensioning – the precise allocation of network resources in response to fluctuating conditions and application demands. This isn’t simply about having enough capacity, but about intelligently distributing it where and when it’s needed most. By dynamically adjusting resource allocation – bandwidth, processing power, and storage – networks can proactively address congestion, minimize latency, and maintain consistent performance levels even under peak loads. Sophisticated algorithms analyze real-time network data – traffic patterns, user density, and application requirements – to optimize resource distribution, ensuring critical applications receive the necessary support. This granular control is paramount for supporting latency-sensitive services and guaranteeing a specified probability of meeting performance targets, effectively transforming network capacity from a static asset into a responsive, adaptable infrastructure.

Accurate network planning relies on realistic modeling of base station deployment, and treating their distribution as a Poisson Point Process (PPP) offers a significant advantage. This mathematical approach assumes base stations are randomly and independently scattered across a given area, mirroring real-world scenarios where perfect, pre-determined placement is impractical. By leveraging the properties of PPP, researchers can analytically derive the probability of interference and coverage, crucial metrics for resource allocation. This allows for the development of algorithms that dynamically adjust resource assignments – bandwidth, power, and frequency – to maximize network performance while minimizing costs. The use of PPP isn’t simply a theoretical exercise; it allows for a more precise understanding of signal propagation and interference patterns, ultimately leading to more efficient and robust wireless networks capable of supporting increasingly demanding applications.

Research indicates that precise frequency reuse scaling is paramount for cost-effective network provisioning, particularly in environments plagued by interference. Specifically, a scaling factor of \lambda_b/\delta = 0.25 represents a critical threshold; maintaining this ratio effectively mitigates the need for excessive resource allocation. Deviations towards higher values introduce unnecessary provisioning costs as interference intensifies, while lower values compromise spectral efficiency. This finding highlights the sensitivity of network economics to frequency reuse strategies and underscores the importance of optimized scaling for sustainable and efficient operation in dense deployments, ultimately enabling broader adoption of bandwidth-intensive applications.

The convergence of guaranteed Quality of Service (QoS) and precise resource allocation is poised to fully realize the capabilities of edge intelligence. This technological shift promises to move data processing and decision-making closer to the source, dramatically reducing latency and bandwidth demands – critical improvements for emerging applications. Specifically, autonomous driving systems will benefit from near real-time responsiveness for object recognition and path planning, enhancing safety and reliability. Augmented reality experiences will become more immersive and seamless, delivering complex visual information without lag or disruption. By enabling these demanding applications, advancements in network architecture and resource management are not simply improving connectivity; they are fundamentally reshaping what is possible at the intersection of the digital and physical worlds, fostering innovation and creating new paradigms for interactive technology.

The pursuit of optimized resource allocation, as detailed in this study of edge-intelligent systems, often obscures a fundamental truth. The work elegantly demonstrates a stochastic framework for guaranteeing quality of service, yet the inherent complexity of these systems begs a critical question: what can be removed? Blaise Pascal observed that, “The eloquence of angels is no more than the silence of geometricians.” This sentiment resonates deeply; the true intelligence lies not in elaborating intricate models, but in distilling them to their essential components. The statistical guarantees offered by this framework are valuable, but only if achieved with a parsimonious approach, prioritizing clarity over exhaustive detail. Simplicity, after all, is not limitation, but intelligence.

Beyond the Horizon

The presented framework, while offering statistical guarantees for resource dimensioning, inherently simplifies the complexities of genuine edge deployments. The assumption of static network topology and predictable video analytics demands represents a necessary abstraction, but one that ultimately limits the scope of practical applicability. Future work must address the dynamic nature of wireless channels-the inevitable fluctuations and interference-not as stochastic variables to be modeled, but as fundamental properties to be accommodated within the resource allocation itself. The pursuit of perfect models is, after all, a distraction from the imperfections of reality.

A more pressing challenge lies in extending this analysis beyond single-application scenarios. Real-world edge systems will host a multiplicity of services, each with unique quality-of-service requirements and resource demands. The current paradigm, focused on video analytics, offers a useful proving ground, but ultimately represents a specialized case. The true test will be the development of algorithms capable of achieving Pareto optimality across heterogeneous workloads – a task that demands not merely clever mathematics, but a fundamental re-evaluation of what constitutes ‘efficiency’ in a resource-constrained environment.

Emotion, as a side effect of structure, dictates that further refinement is inevitable. However, the path forward isn’t necessarily one of increasing mathematical sophistication. Clarity is compassion for cognition; perhaps the most valuable contribution future research can offer is a reduction in complexity-a distillation of these stochastic models into actionable insights for system designers. The elimination of unnecessary variables, not their addition, will ultimately define progress.

Original article: https://arxiv.org/pdf/2601.16848.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Ragnarok X Next Generation Class Tier List (January 2026)

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- Gold Rate Forecast

- Best Doctor Who Comics (October 2025)

- ‘That’s A Very Bad Idea.’ One Way Chris Rock Helped SNL’s Marcello Hernández Before He Filmed His Netflix Special

- 2026 Upcoming Games Release Schedule

- How to Complete the Behemoth Guardian Project in Infinity Nikki

- 15 Lost Disney Movies That Will Never Be Released

2026-01-27 04:20