Author: Denis Avetisyan

New research details a framework for optimizing security investments by modeling the dynamic interplay between attackers and defenders in interconnected systems.

This review presents a game-theoretic model for analyzing optimal cybersecurity investment under heterogeneous risk profiles and the propagation of cyberattacks.

While increasingly interconnected digital systems offer unprecedented opportunities, they simultaneously amplify the potential for cascading cyberattacks and systemic financial risk. This paper, ‘Network Security under Heterogeneous Cyber-Risk Profiles and Contagion’, introduces a novel framework for optimizing cybersecurity investments by modeling strategic interactions between attackers and defenders within a network context. The core finding demonstrates that optimal defense strategies are critically shaped by heterogeneous valuations of network nodes-where attacker and defender priorities diverge-and the propagation of risk along contagion paths. How can these insights be leveraged to design more resilient digital infrastructures and proactively mitigate evolving cyber threats?

The Inevitable Cascade: Understanding Network Risk

Contemporary networks, increasingly interwoven into critical infrastructure and daily life, experience a rapidly expanding threat landscape. The proliferation of interconnected devices, coupled with the growing sophistication of malicious actors, necessitates a shift from reactive security measures to proactive risk mitigation. No longer sufficient is simply responding to breaches after they occur; organizations must anticipate potential vulnerabilities and implement strategies to minimize the impact of inevitable attacks. This demand is fueled by the escalating costs associated with data breaches, reputational damage, and operational disruptions – costs that extend far beyond immediate financial losses. Consequently, a comprehensive understanding of network vulnerabilities and the potential pathways for attack propagation is paramount for safeguarding digital assets and ensuring the resilience of modern systems.

Assessing the potential damage from security breaches – known as Cyber Risk quantification – represents a foundational step toward robust network investment strategies. This work introduces a security game framework designed to move beyond qualitative assessments of risk and towards measurable impact. The framework models potential attacker behaviors and their corresponding consequences, allowing for the calculation of expected losses stemming from successful exploits. By translating vulnerabilities into quantifiable financial terms, organizations can prioritize security investments based on a clear understanding of potential return on investment. This approach facilitates informed decision-making, enabling proactive resource allocation to mitigate the most significant threats and bolster overall network resilience. The resulting metrics offer a standardized method for comparing the effectiveness of different security measures and tracking improvements in an organization’s security posture over time.

Accurately predicting how security flaws spread through interconnected networks presents a significant analytical hurdle, particularly as network designs grow increasingly complex. This research addresses this challenge by introducing a suite of quantifiable metrics designed to map vulnerability propagation across diverse ‘Network Topology’ configurations. The methodology moves beyond simple node-level assessments, instead modeling the cascading effects of breaches as they traverse pathways determined by network architecture. By calculating metrics such as ‘mean time to compromise’ and ‘expected impact radius’, the work provides a means to assess not only where vulnerabilities exist, but also how quickly and how far they can spread, offering a crucial step towards proactive risk management and informed investment in network security.

The Contagion Within: Modeling Breach Propagation

The Contagion Mechanism, as applied to cybersecurity, utilizes principles from epidemiological models to represent the spread of vulnerabilities within a network. This approach treats compromised systems as ‘infected’ hosts and vulnerabilities as the ‘disease’ vector. Propagation occurs when an attacker exploits a vulnerability on one system and then uses that compromised system to target others, mirroring disease transmission. Key parameters, analogous to those in epidemiology, include the infection rate (probability of successful exploitation) and the recovery rate (time to patch or mitigate the vulnerability). The model assesses how these rates, combined with network topology, determine the speed and extent of a potential breach, allowing for the prediction of outbreak size and the evaluation of mitigation strategies. This allows for quantitative analysis of risk beyond simple vulnerability scoring.

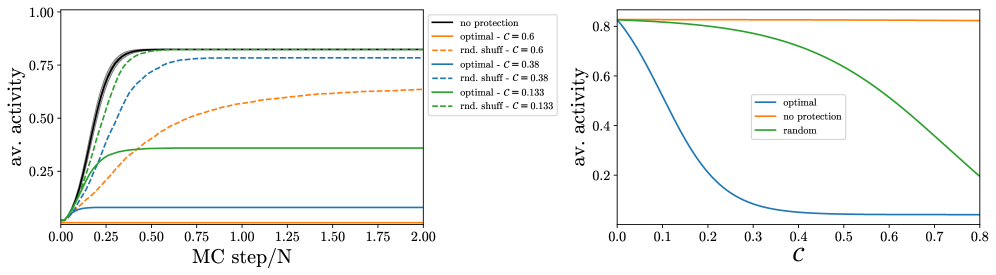

Two primary frameworks model vulnerability propagation based on differing assumptions about infection persistence: the Threshold Model and SI Contagion Dynamics. The Threshold Model posits that a vulnerability will only propagate if the number of already-compromised systems exceeds a defined threshold; propagation ceases once this threshold is not met. In contrast, SI Contagion Dynamics, representing Susceptible-Infected dynamics, assumes that once a system is infected, it remains so indefinitely, continually attempting to propagate the vulnerability to susceptible systems without recovery. This difference impacts model behavior; the Threshold Model predicts self-limiting outbreaks, while SI dynamics can result in widespread, persistent compromise given sufficient initial infections and network connectivity.

Modeling breach propagation demonstrates that a single, initially isolated vulnerability can lead to systemic compromise under specific conditions, particularly within interconnected systems. The rate of escalation is determined by factors such as network topology, vulnerability severity, and the prevalence of automated exploitation techniques. Research indicates that optimal mitigation strategies – including prioritized patching and network segmentation – exhibit resilience across a range of contagion dynamics, meaning their effectiveness isn’t significantly diminished by variations in how the breach spreads. Validation testing has confirmed that these strategies maintain a high degree of protection even when modeled under different attack vectors and exploitation speeds, suggesting a robust approach to reducing overall risk.

The Strategic Equilibrium: Optimizing Investment with Game Theory

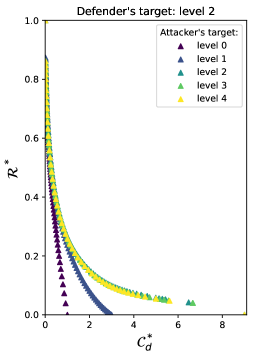

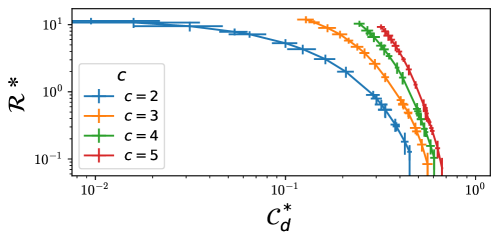

The Stackelberg game models cybersecurity investment as a sequential decision process where the defender moves first, committing to a security budget allocation, and the attacker then responds by selecting the most profitable attack vector given the defender’s strategy. This differs from simultaneous-move games where both actors choose strategies concurrently. The framework, developed in this work, allows for the explicit representation of the attacker’s optimization problem – maximizing damage given the defensive posture – and the defender’s problem of minimizing expected loss, acknowledging the attacker will rationally exploit vulnerabilities. This sequential nature enables the defender to proactively shape the attacker’s response, rather than simply reacting to it, and provides a means to calculate an optimal defensive strategy – the Stackelberg equilibrium – which anticipates the attacker’s best response.

The application of the Stackelberg game to cybersecurity investment enables defenders to move beyond static resource allocation by modeling the interaction with an attacker as a sequential decision process. This allows for the proactive allocation of security resources based on anticipated attacker strategies, maximizing protection given a limited budget. Crucially, the analysis of the Stackelberg equilibrium approximation provides quantifiable error bounds, establishing a demonstrable level of confidence in the effectiveness of the investment strategy. These bounds define the range within which the actual security outcome will fall, given the modeled attacker behavior and resource constraints, thereby providing a rigorous basis for evaluating and justifying investment decisions.

Analyzing the network’s risk profile – specifically, the distribution of vulnerabilities and their potential impact – enables targeted cybersecurity investment. This approach prioritizes resource allocation to areas exhibiting the highest risk, maximizing the reduction of overall network vulnerability per unit of budget. The resulting ‘Efficient Frontier’ graphically represents this trade-off; it depicts the set of investment strategies that yield the lowest level of risk for a given budget, or conversely, the minimum budget required to achieve a desired risk level. Points along the frontier represent Pareto-optimal solutions, meaning no improvement in risk reduction can be achieved without increasing the budget, and vice versa. Investment strategies falling below the frontier are suboptimal, indicating that a better risk-to-budget ratio is attainable.

Measuring the Shield: Refining Security Investments

Determining the true value of security investments requires moving beyond simple expenditure reports and embracing quantifiable metrics. Network Protection Metrics offer a systematic approach to objectively assess how well specific interventions – such as firewalls, intrusion detection systems, or employee training – actually reduce risk to critical assets. These metrics aren’t simply about counting blocked attacks; they delve into the likelihood of successful breaches, the potential impact of those breaches – considering data loss, financial repercussions, and reputational damage – and the cost of implementing each security measure. By translating abstract concepts like “security” into concrete, measurable values, organizations can gain a clear understanding of what’s working, what isn’t, and where to focus resources for maximum protection. This data-driven approach enables a shift from reactive security spending to proactive, optimized investment strategies.

Security investment strategies often operate under the assumption that all system nodes warrant equal protection, however, quantifying risk reduction reveals a more nuanced reality. Recent analyses demonstrate that strategically allocating resources – even to the point of deliberately under-protecting certain nodes – can maximize overall security posture while minimizing expenditure. This counterintuitive approach stems from the principle that the cost of protecting a node must be weighed against the likelihood and impact of a successful attack on that specific asset. By modeling potential attack paths and associated losses, organizations can identify nodes where the cost of full protection outweighs the risk, allowing resources to be redirected to more critical areas. The result is an ‘efficient frontier’ of security investment, where optimal strategies prioritize high-impact defenses over uniformly applied, and potentially wasteful, protections.

The concept of an ‘Efficient Frontier’ offers a powerful visualization for security investment strategy. This frontier, typically represented graphically, demonstrates the range of achievable risk reduction for a given cost, or conversely, the minimum cost required to achieve a desired level of risk reduction. Points on the frontier represent optimal allocations – meaning no further risk reduction can be gained without increasing cost, and no cost savings are possible without accepting greater risk. Analyses reveal that the most effective strategies don’t necessarily involve maximizing protection across all assets; instead, resources are strategically allocated to achieve the highest possible risk reduction within budgetary constraints, sometimes deliberately accepting a higher risk posture for less critical nodes to bolster defenses around more valuable ones. This approach allows organizations to move beyond simply spending more on security and towards making informed, data-driven decisions that maximize impact and demonstrate a clear return on investment.

The Adaptive Shield: Enhancing Resilience Through Deception

Cyber deception introduces a strategic layer of complexity for potential attackers by creating intentionally realistic, yet false, targets and information within a network. This isn’t simply about hiding assets; it actively lures adversaries away from genuine critical systems and data. Through the deployment of decoys – servers, applications, or even entire virtualized environments designed to mimic legitimate resources – security teams can divert attacker attention, observe their tactics, and ultimately increase the time and resources required for a successful breach. The effectiveness stems from exploiting an attacker’s inherent reconnaissance processes; believing they’ve found a valuable target, they dedicate effort to a fabricated system, providing defenders with crucial intelligence and delaying progress toward actual vulnerabilities. This proactive misdirection not only protects core assets but also introduces uncertainty and cost, making a successful attack significantly less appealing.

A robust security posture increasingly relies on proactive measures that go beyond traditional detection and prevention, and integrating cyber deception offers a compelling approach to reducing an organization’s attack surface. This work details how strategically placed decoys and illusions not only divert attackers from genuine assets, but also demonstrably increase the cost and complexity of a successful breach. By analyzing optimal strategy deviations – points where an attacker’s planned course is altered by deceptive elements – researchers have quantified the ‘cyber deception effect’. The findings indicate that effective deception forces adversaries to expend valuable resources on false targets, slows their progress, and ultimately raises the economic barrier to compromise, providing a significant advantage to defenders.

Research is shifting towards proactive cyber defenses centered on adaptive deception, moving beyond static honeypots and decoys. These next-generation techniques aim to build security systems that learn and evolve alongside attacker methodologies, dynamically altering deceptive elements in response to observed probing and exploitation attempts. This involves leveraging machine learning algorithms to analyze threat actor behavior in real-time, tailoring deceptive environments to maximize engagement and misdirection. The ultimate goal is to create a continuously shifting landscape of false targets and misleading information, increasing the attacker’s operational costs and effectively neutralizing their efforts within the network – a system where the defense not only reacts to threats, but anticipates and shapes them.

The pursuit of network security, as detailed within this framework, inherently acknowledges the inevitable spread of vulnerabilities. It’s a system constantly battling entropy, attempting to delay, not necessarily prevent, systemic failure. This resonates with Alan Turing’s observation: “No system is immune to error.” The article’s modeling of contagion-how cyber risk propagates-is a direct manifestation of this principle. Investment strategies, even optimal ones, are not about achieving perfect safety, but about managing the decay rate, understanding that vulnerabilities will, over time, emerge and spread, demanding continuous adaptation and recalibration. The Stackelberg game approach attempts to impose order, but time-the relentless march of potential exploits-remains the ultimate adversary.

The Horizon of Resilience

The framework presented here, while addressing the immediate problem of strategic cybersecurity investment, inevitably highlights the limitations inherent in any attempt to fully anticipate systemic failure. Each optimized defense is, fundamentally, a temporary deferral of entropy. The study rightly models contagion, but the true complexity lies not merely in how risk propagates, but in the unforeseen vectors that will inevitably emerge. Every failure is a signal from time, revealing the incompleteness of even the most rigorous predictive models.

Future work must move beyond optimizing for known threats. The field requires a deeper engagement with the concept of ‘unknowable unknowns’ – a shift from seeking perfect foresight to cultivating graceful degradation. Refactoring is a dialogue with the past; subsequent iterations must prioritize adaptability over absolute security. Investment strategies should not aim to eliminate risk entirely, but to build systems capable of absorbing and containing unforeseen shocks.

The pursuit of network security is, ultimately, an exercise in applied metaphysics. It is a constant negotiation with the inevitable decay of all complex systems. The challenge is not to conquer vulnerability, but to understand its patterns, and to design systems that, when they ultimately yield, do so with a measure of dignity.

Original article: https://arxiv.org/pdf/2601.16805.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Ragnarok X Next Generation Class Tier List (January 2026)

- Gold Rate Forecast

- Answer to “A Swiss tradition that bubbles and melts” in Cookie Jam. Let’s solve this riddle!

- ‘That’s A Very Bad Idea.’ One Way Chris Rock Helped SNL’s Marcello Hernández Before He Filmed His Netflix Special

- Shameless is a Massive Streaming Hit 15 Years Later

- Ex-Rate My Takeaway star returns with new YouTube channel after “heartbreaking” split

- Best Doctor Who Comics (October 2025)

- Drunk fishermen under fire for jumping on dead whale in viral video

2026-01-26 21:32