Author: Denis Avetisyan

A new deep learning approach tackles the challenge of reliably detecting weak health signals from wearable sensors amidst the crowded 2.4 GHz radio spectrum.

This review details a framework for classifying SmartBAN signals using spectrogram analysis and deep learning techniques to mitigate interference in the ISM band.

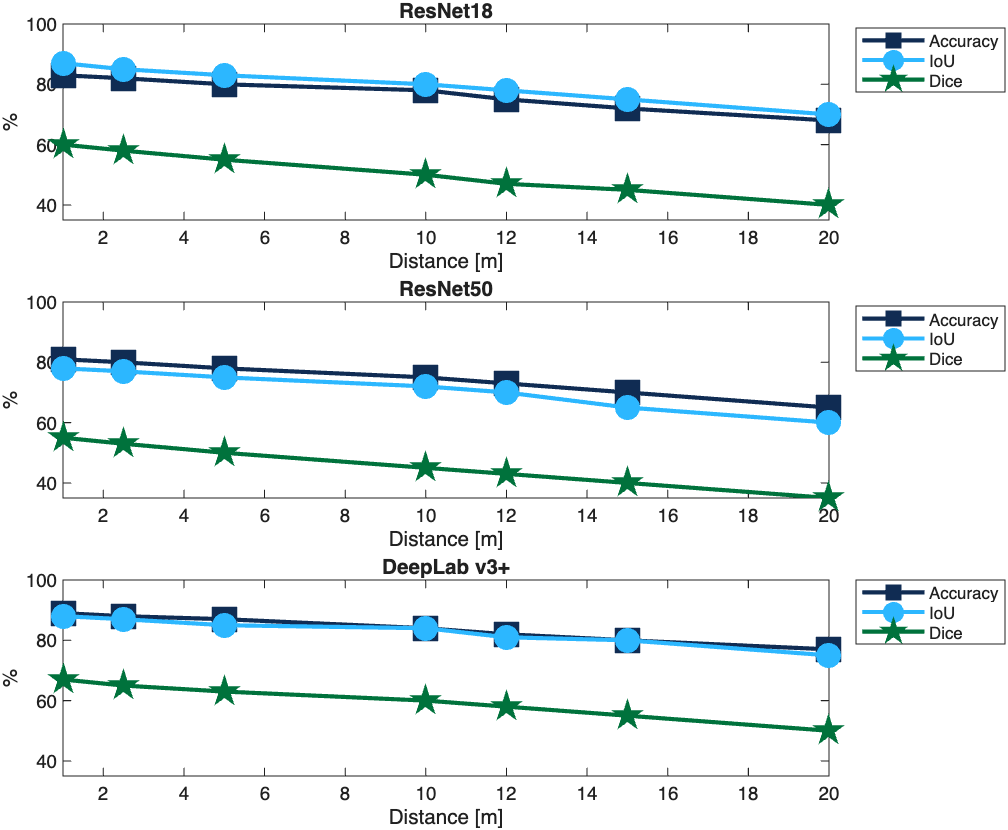

Reliable operation of wearable health monitoring systems is increasingly challenged by spectral congestion in the ubiquitous 2.4 GHz Industrial, Scientific, and Medical (ISM) band. This paper, ‘RF Intelligence for Health: Classification of SmartBAN Signals in overcrowded ISM band’, introduces a novel deep learning framework designed to accurately classify weak Body Area Network (BAN) signals amidst significant interference. Utilizing both synthetic and real-world radio frequency (RF) data acquired via Software-Defined Radios, the approach achieves over 90% accuracy with ResNet-U-Net architectures enhanced by attention mechanisms. Could this framework pave the way for more robust and dependable wireless healthcare solutions in densely populated spectral environments?

Decoding the Wireless Health Challenge

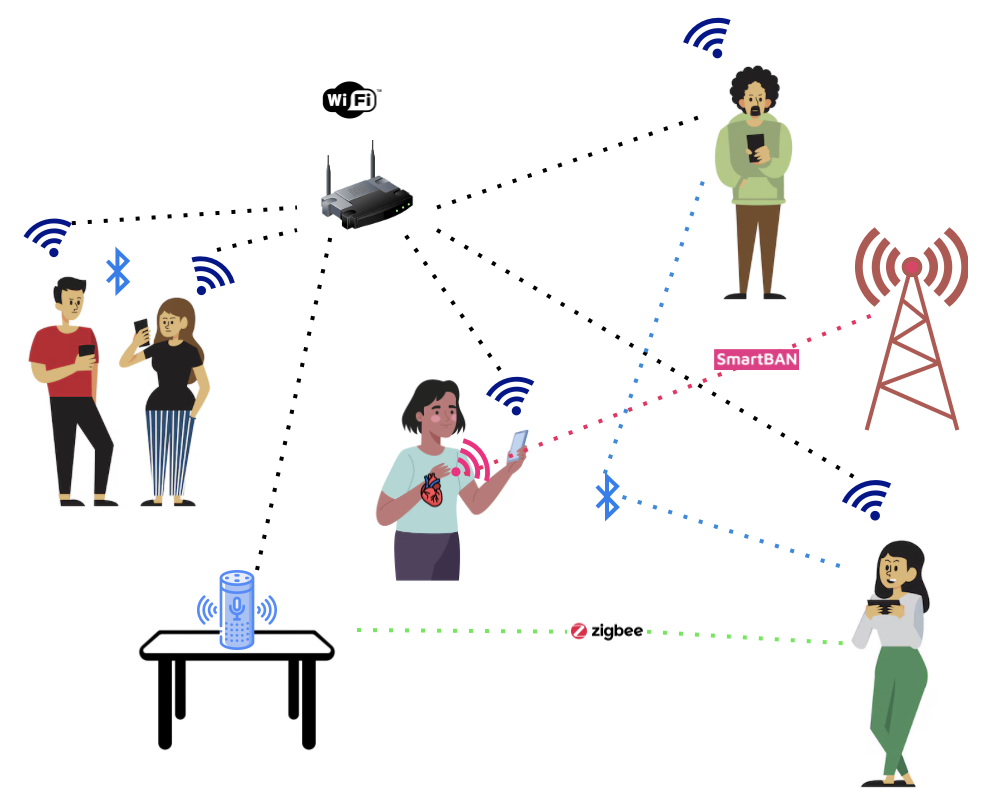

Body Area Networks represent a significant advancement in personalized healthcare, envisioning a future where continuous physiological monitoring becomes commonplace. These wireless networks, designed to be worn on the body, hold the potential to track vital signs, activity levels, and other health indicators with unprecedented granularity. However, this promise is currently constrained by their reliance on the Industrial, Scientific and Medical (ISM) radio band. The ISM band, intentionally left open for public use, is now heavily populated with signals from a growing number of devices – including Wi-Fi routers, Bluetooth accessories, and ZigBee-enabled smart home systems. This escalating congestion creates a challenging environment for Body Area Networks, as their relatively low-power transmissions are susceptible to interference, potentially compromising the reliability and accuracy of collected health data. Overcoming these spectrum limitations is therefore critical to realizing the full potential of continuous, wireless health monitoring.

The proliferation of wireless technologies has created a fiercely competitive radio frequency environment, particularly within the Industrial, Scientific, and Medical (ISM) band utilized by many health monitoring devices. Commonly used protocols like Wi-Fi, Bluetooth, and ZigBee all vie for limited bandwidth, resulting in significant signal interference that directly impacts the reliability of data transmitted from body area networks. This congestion manifests as dropped packets, corrupted data, and inconsistent readings – critical flaws when monitoring vital signs or delivering time-sensitive medical alerts. Consequently, the increasing density of wireless signals poses a substantial hurdle to the widespread adoption of continuous, real-time health monitoring systems, demanding innovative solutions to mitigate interference and ensure data integrity.

Conventional signal classification techniques, such as Support Vector Machines, often falter when applied to the chaotic radio frequency environment of wireless health monitoring. These methods, while effective in simpler scenarios, are fundamentally limited by their inability to effectively disentangle the weak biosignals from the pervasive and constantly shifting interference generated by Wi-Fi, Bluetooth, and other devices operating in the same Industrial, Scientific, and Medical (ISM) band. The non-stationary and highly complex nature of this interference – characterized by rapidly changing signal strengths, frequencies, and patterns – overwhelms the linear decision boundaries typically employed by these classifiers, leading to frequent misclassifications and unreliable data transmission. Consequently, the pursuit of robust and accurate wireless health solutions demands innovative approaches to signal processing that can overcome these limitations and ensure the integrity of vital patient information.

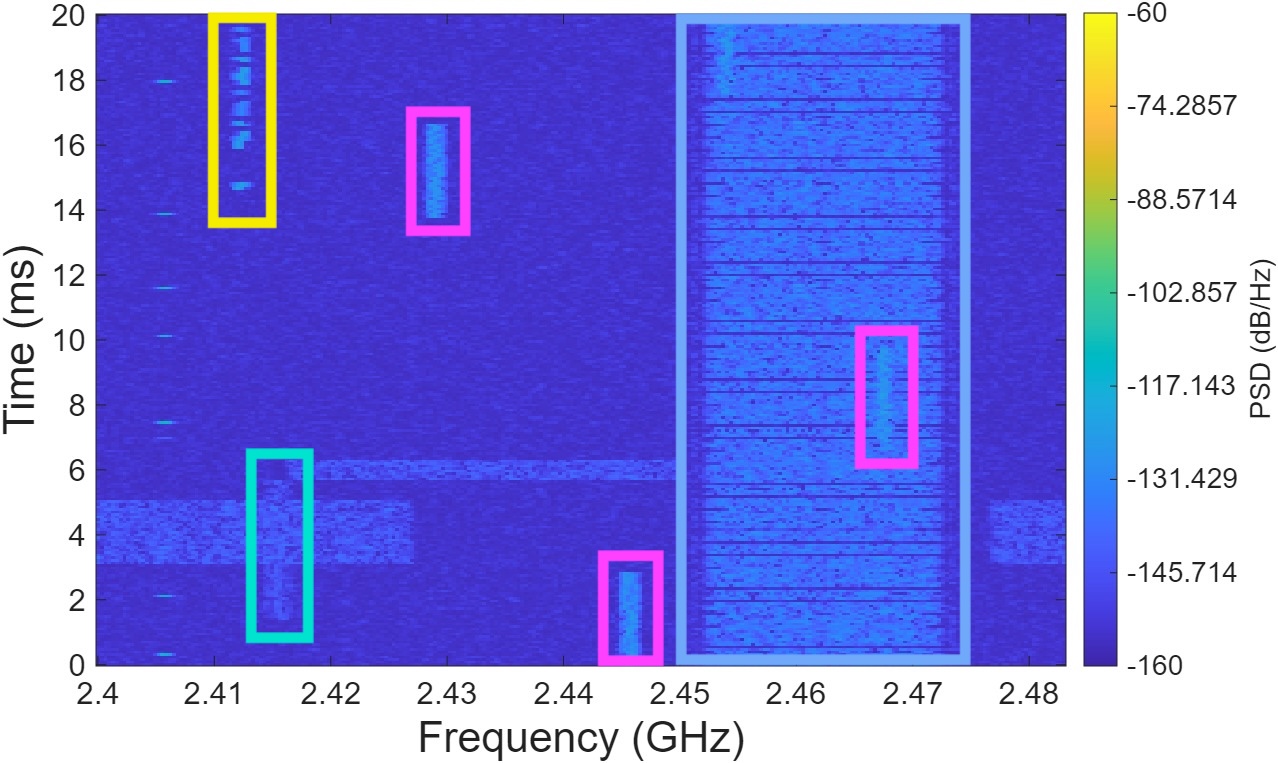

Visualizing Signal Clarity: Spectrogram Segmentation

Spectrogram Segmentation is a signal processing technique that converts one-dimensional raw signals – such as audio, seismic data, or time-series measurements – into two-dimensional visual representations known as spectrograms. This transformation is achieved via the Short-Time Fourier Transform (STFT), which decomposes the signal into its constituent frequencies over time, with frequency and time represented as the axes of the spectrogram image. The resulting visual format facilitates the application of image-based analysis techniques, enabling the identification of patterns and features that might be obscured in the raw signal data. This approach is particularly beneficial for analyzing non-stationary signals where frequency content changes over time, as the spectrogram provides a time-frequency representation of the signal’s characteristics.

Convolutional Neural Networks (CNNs) are employed to analyze spectrogram images, capitalizing on their ability to automatically learn hierarchical feature representations. These networks utilize convolutional layers with learnable filters to detect local patterns – such as harmonic structures or transient events – within the spectrogram. Subsequent pooling layers reduce dimensionality and provide translational invariance, enhancing robustness to variations in signal timing. The learned features are then passed through fully connected layers for pattern classification or regression tasks, enabling the identification and segmentation of specific signal characteristics. This data-driven approach bypasses the need for manual feature engineering, allowing the network to discover complex patterns imperceptible through traditional signal processing methods.

The proposed framework utilizes a ResNet architecture as its encoder component to perform robust feature extraction from spectrogram images. ResNet’s deep residual connections mitigate the vanishing gradient problem, enabling the training of significantly deeper networks and improved representation learning. Subsequently, a U-Net architecture functions as the decoder, employing an encoder-decoder structure with skip connections to effectively reconstruct signals from the extracted features. The U-Net’s symmetrical architecture and skip connections preserve spatial information lost during encoding, resulting in higher-resolution output and improved signal reconstruction accuracy. This combination of ResNet and U-Net provides a powerful system for both identifying and recreating signal characteristics within the spectrogram data.

Generating Reality: Synthetic Data and Validation

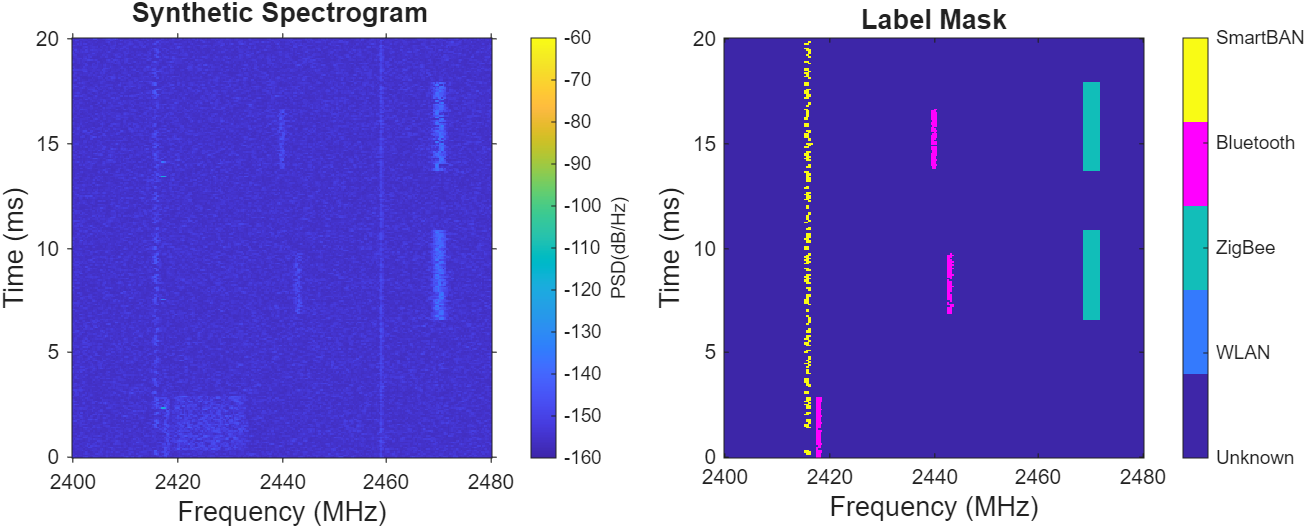

Synthetic Spectrograms are employed as the primary training data for our Deep Learning models due to the limitations of acquiring sufficiently diverse and labeled real-world radio frequency (RF) data. These spectrograms are not simply pre-recorded signals, but are computationally generated through a process known as Channel Modeling. This involves mathematically simulating the propagation of RF signals from a transmitter to a receiver, accounting for various environmental factors and impairments. The resulting synthetic data allows for controlled experimentation and the creation of large datasets with precise labeling, which is essential for effectively training and validating the performance of our Deep Learning algorithms. The parameters of the channel model are adjusted to create a broad range of simulated scenarios, enhancing the robustness and generalization capability of the trained models.

Signal propagation simulation employs fading models to accurately represent the variability encountered in wireless communication channels. Rayleigh fading is utilized to model signals arriving from multiple paths with no dominant component, characteristic of heavily scattered environments. Conversely, Rician fading accounts for scenarios with a distinct line-of-sight component alongside multipath reflections, represented by a non-zero K-factor. These models introduce statistical variations in signal amplitude and phase, simulating the impact of multipath propagation, Doppler shift, and shadowing, thereby creating synthetic data that mirrors the complexities of real-world radio frequency (RF) environments. The parameters of these fading distributions, including the K-factor for Rician fading and the statistical distributions governing the channel impulse response, are crucial for generating realistic and representative datasets.

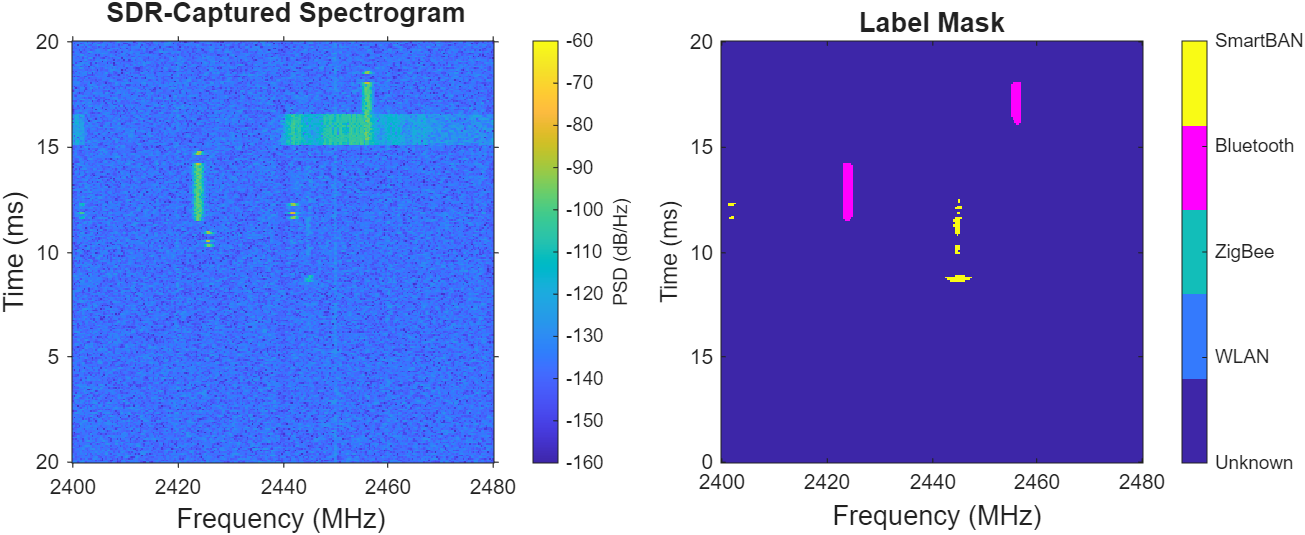

Synthetic data generation is critically validated and refined through the integration of real-world signal captures using Software-defined Radios (SDRs), specifically the ADALM-Pluto SDR platform. Captured signals serve as ground truth for assessing the fidelity of the simulated channel models, enabling iterative refinement of parameters like fading characteristics and noise profiles. This process involves comparing statistical properties – including power spectral density and time-domain characteristics – between the synthetic and captured data. Discrepancies are then used to adjust the simulation parameters, ensuring a strong correlation and minimizing the domain gap between synthetic and real-world data used for training Deep Learning models. This augmentation with real-world data improves the generalization capability and robustness of the trained models when deployed in actual environments.

Attention mechanisms are incorporated into the deep learning network architecture to selectively focus on relevant portions of the input spectrogram. This is achieved through weighted summation of input features, allowing the model to prioritize spatially distinct signal characteristics. By assigning higher weights to areas containing subtle features – such as weak reflections or transient signals – the network improves its ability to detect and classify these characteristics which might otherwise be obscured by noise or dominant signals. The implementation effectively enhances the model’s spatial awareness, enabling more precise feature extraction and ultimately improving detection accuracy, particularly in challenging signal environments.

Towards Reliable Wireless Health: A Vision Realized

The proliferation of wireless devices operating within the Industrial, Scientific, and Medical (ISM) band creates a congested radio frequency environment, often hindering reliable data transmission from Body Area Networks (BANs) used in health monitoring. To address this, a Spectrogram Segmentation framework was developed to accurately classify these extremely low-power signals, effectively distinguishing them from background noise and interference. This framework transforms raw radio signals into visual spectrograms, which are then segmented and analyzed using advanced machine learning techniques. By precisely identifying and categorizing the relevant signals, the system significantly reduces data loss and improves the integrity of health data being transmitted wirelessly, paving the way for more consistent and dependable remote patient monitoring.

The advancement of reliable wireless health monitoring holds significant promise for transforming preventative care and disease management. By enabling the consistent and accurate capture of physiological data – from heart rate variability to subtle changes in movement – continuous monitoring systems can detect anomalies indicative of developing conditions much earlier than traditional episodic assessments. This proactive approach facilitates timely interventions, potentially slowing disease progression or even preventing acute events. Consequently, patients experience improved outcomes, reduced healthcare costs, and an enhanced quality of life, as healthcare shifts from reactive treatment to proactive wellness management. The ability to discern critical health signals amidst wireless interference represents a key step towards realizing this future, offering the potential for personalized, data-driven healthcare delivered seamlessly and continuously.

The framework demonstrates a high degree of reliability in identifying signals originating from low-power Body Area Networks (SmartBANs), consistently achieving over 90% accuracy in classification. This performance was validated through a rigorous two-pronged approach: initially utilizing synthetic data to establish baseline metrics, and subsequently confirmed with real-world signal captures obtained via Software Defined Radios (SDR). The consistency between these two datasets underscores the robustness of the methodology and its ability to function effectively in practical, often noisy, environments. This level of accurate signal identification is crucial for ensuring data integrity and enabling dependable wireless health monitoring systems.

Ongoing development centers on refining the Spectrogram Segmentation framework for deployment on resource-constrained, low-power devices commonly used in Body Area Networks. This optimization will involve techniques such as model quantization, pruning, and knowledge distillation, aiming to reduce computational demands and energy consumption without significantly impacting classification accuracy. Beyond the initial focus on SmartBAN signals, researchers are actively investigating the model’s adaptability to a broader spectrum of wireless health applications, including remote patient monitoring, wearable sensor networks for chronic disease management, and even emergency medical services communication. This expansion seeks to establish a versatile and resilient signal classification solution capable of enhancing the reliability and effectiveness of diverse wireless health systems, ultimately contributing to more proactive and personalized healthcare.

The development of intelligent and resilient wireless health systems promises a transformative impact on global well-being. By enabling consistently reliable data transmission from Body Area Networks, this work directly addresses a critical limitation in current remote patient monitoring. Such advancements extend beyond simple data collection, fostering proactive healthcare through continuous physiological assessment and early anomaly detection. This capability not only empowers individuals to take greater control of their health but also allows medical professionals to intervene swiftly, potentially preventing acute episodes and improving long-term outcomes for millions facing chronic conditions or requiring post-operative care. Ultimately, a more robust and dependable infrastructure for wireless health monitoring represents a significant step towards a future where healthcare is more accessible, preventative, and personalized.

The pursuit of signal classification, as demonstrated in this work, necessitates a ruthless stripping away of complexity. The framework presented efficiently navigates the overcrowded ISM band not through intricate algorithms, but through a focused application of deep learning principles. This echoes the sentiment of Carl Friedrich Gauss: “If others would think as hard as I do, they would not have so many questions.” The ability to discern weak SmartBAN signals amidst interference isn’t achieved through elaborate signal processing; rather, it stems from a fundamental understanding – and simplification – of the underlying data, mirroring Gauss’s emphasis on clarity and rigorous thought. The deep learning model achieves robust performance by distilling meaningful information from the spectrogram analysis, a testament to the power of elegant solutions.

Beyond the Signal

This work addresses signal classification. It is a necessary, but not sufficient, step. Abstractions age, principles don’t. The true challenge lies not in identifying a signal, but in interpreting its meaning within a chaotic environment. Current deep learning approaches excel at pattern recognition, yet lack contextual understanding.

Future research must move beyond simple classification. It needs to explore methods for robust signal extraction from extremely low signal-to-noise ratios. Every complexity needs an alibi. The ISM band will only become more crowded. Mitigation strategies focused on interference cancellation are less scalable than strategies that embrace, and intelligently filter, the noise itself.

Ultimately, the goal isn’t just to detect health signals. It is to infer physiological state. This demands a shift toward incorporating prior knowledge and probabilistic reasoning into the framework. The current paradigm favors data volume over data quality. A more parsimonious approach, focused on fundamental signal characteristics, will prove more resilient-and more useful-in the long run.

Original article: https://arxiv.org/pdf/2601.15836.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Gold Rate Forecast

- Ragnarok X Next Generation Class Tier List (January 2026)

- ‘That’s A Very Bad Idea.’ One Way Chris Rock Helped SNL’s Marcello Hernández Before He Filmed His Netflix Special

- Shameless is a Massive Streaming Hit 15 Years Later

- A Duet of Heated Rivalry Bits Won Late Night This Week

- Beyond Agent Alignment: Governing AI’s Collective Behavior

- Mark Ruffalo Finally Confirms Whether The Hulk Is In Avengers: Doomsday

- XDC PREDICTION. XDC cryptocurrency

2026-01-24 13:56