Author: Denis Avetisyan

A new approach leverages the relationships between borrowers and financial products to improve credit default prediction, moving beyond traditional scoring methods.

This review explores the use of heterogeneous graph neural networks and hybrid ensemble learning for enhanced accuracy, fairness, and interpretability in credit risk assessment.

While traditional credit scoring models excel at leveraging tabular data, they often fail to capture the complex relational dependencies inherent in financial networks. This research, ‘Relational Graph Modeling for Credit Default Prediction: Heterogeneous GNNs and Hybrid Ensemble Learning’, explores the use of heterogeneous graph neural networks to model these relationships, integrating borrower attributes with detailed transaction histories. Results demonstrate that combining graph-derived customer embeddings with established gradient-boosted trees yields superior predictive performance, improving both ROC-AUC and PR-AUC, but necessitates careful consideration of fairness and model interpretability. How can we best leverage relational modeling to build more robust and equitable credit risk assessments?

The Interconnectedness of Financial Risk

The stability of financial systems hinges on the accurate anticipation of loan defaults, yet conventional credit risk models often fall short when faced with the intricacies of modern borrower relationships. These traditional approaches typically analyze individual credit histories in isolation, neglecting the crucial influence of interconnectedness – where a borrower’s risk is not solely determined by their own profile, but also by the financial health of their associates, partners, and even co-applicants. This oversight proves particularly problematic in environments with complex lending networks, where distress can propagate rapidly through seemingly unrelated individuals. Consequently, a failure to adequately model these relationships can lead to systemic underestimation of risk, potentially triggering broader financial instability and necessitating more robust, network-aware predictive methodologies.

Conventional credit risk assessments frequently depend on static borrower characteristics – age, income, credit history – yet these snapshots fail to capture the dynamic and interconnected nature of financial behavior. This reliance on limited features overlooks crucial contextual information, such as recent transaction patterns, the borrower’s position within a network of related applicants, and external economic indicators. A purely static approach treats each application in isolation, neglecting the influence of broader financial ecosystems and the potential for risk contagion. Consequently, models built on these foundations often struggle to accurately predict default, particularly in complex lending environments where relationships and external factors significantly impact repayment ability. The omission of this vital contextual data represents a fundamental limitation in achieving robust and reliable credit risk prediction.

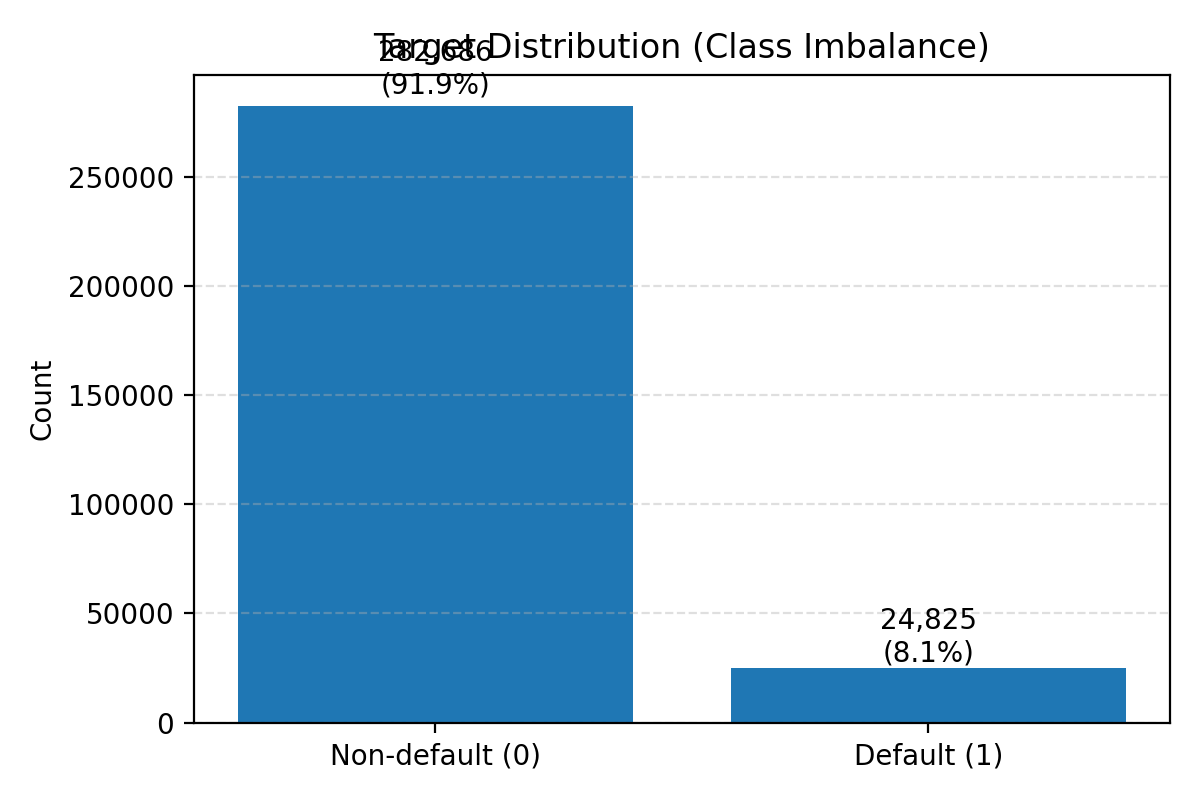

The Home Credit Default Risk dataset has emerged as a significant benchmark in the field of credit risk modeling due to its unprecedented scale and structural complexity. Containing over a million loan applications with hundreds of features, it dwarfs many previously used datasets, demanding substantial computational resources for analysis. Beyond sheer size, the dataset’s intricacy stems from its diverse data types – encompassing application details, credit bureau information, and loan performance – each requiring specialized processing. Critically, the dataset includes related applicant data, such as family relationships, which introduces challenges in modeling interconnectedness and potential group behavior. This multifaceted structure necessitates advanced machine learning techniques capable of handling high dimensionality, heterogeneous data, and complex relationships, making it a robust testbed for evaluating the performance and generalizability of new credit risk prediction models.

Conventional approaches to credit risk assessment frequently overlook the subtle interdependencies within borrower networks and the wealth of information embedded in associated data. These methods typically treat each applicant in isolation, failing to recognize that a borrower’s risk profile is often influenced by their relationships with other applicants – for instance, co-applicants or those sharing common loan characteristics. Furthermore, valuable predictive signals are often concealed within auxiliary data, such as previous loan histories, application details, and behavioral patterns, which are not fully leveraged by traditional models. This inability to discern nuanced patterns hinders accurate risk prediction, potentially leading to both increased financial losses for lenders and restricted access to credit for deserving applicants. Consequently, research is increasingly focused on developing more sophisticated techniques capable of capturing these complex relationships and unlocking the predictive power of previously untapped data sources.

Modeling Relationships with Graph Neural Networks

Borrower relationships extend beyond simple connections and encompass diverse roles – applicants, guarantors, co-signers, and entities like lenders and credit bureaus. A Heterogeneous Graph explicitly models these varying relationship types as distinct edge types connecting nodes representing each entity. This contrasts with homogeneous graphs which treat all connections equally. By representing the borrower network as a heterogeneous graph, Graph Neural Networks (GNNs) can leverage this structural information to differentiate between the significance of various connections, enabling more nuanced and accurate risk assessment and fraud detection compared to traditional methods that rely on aggregated or simplified network representations. The nodes represent individual actors or entities, while the edges define the specific relationship between them, providing a richer contextual understanding for downstream modeling tasks.

The Relation-Aware Attentive Heterogeneous GNN architecture addresses the complexities of modeling borrower relationships represented as a heterogeneous graph by incorporating relation-specific attention mechanisms. These mechanisms allow the model to dynamically weigh the importance of different relationships – such as co-borrower, guarantor, or shared-address connections – when aggregating information for each node. Instead of treating all relationships equally, the attention weights are calculated based on the features of the connected nodes and the specific relation type. This process enables the GNN to prioritize salient connections, capturing nuanced interactions and improving the accuracy of learned node embeddings. The attention weights are computed using a feedforward neural network parameterized by the relation type, effectively learning a relation-specific transformation of node features prior to aggregation.

Contrastive learning, employed as a self-supervised pre-training technique, enhances the quality and generalization capability of Graph Neural Network (GNN) embeddings. This approach involves constructing positive and negative pairs of nodes within the heterogeneous graph; positive pairs represent semantically similar nodes based on graph structure and attributes, while negative pairs denote dissimilar nodes. The GNN is then trained to maximize the similarity of embeddings for positive pairs and minimize similarity for negative pairs, utilizing a loss function such as InfoNCE. By learning robust representations from unlabeled data through this contrastive objective, the GNN requires less labeled data for downstream tasks and demonstrates improved performance on unseen nodes and graph structures. This pre-training process effectively initializes the GNN’s weights, facilitating faster convergence and better generalization when fine-tuned on specific credit risk prediction tasks.

To effectively model borrower relationships within our heterogeneous graph, we implemented extensions to the GraphSAGE algorithm. These modifications enable GraphSAGE to operate on a graph containing multiple node and edge types, accommodating the diverse relationships present in our dataset. Specifically, we adapted the aggregation step to incorporate relation-specific transformations; different weight matrices are applied during message passing based on the type of edge connecting nodes. This allows the model to learn distinct representations for interactions representing different relationship types (e.g., co-application, shared address). Furthermore, we employed a multi-layer perceptron (MLP) to transform the aggregated features for each node, generating node embeddings optimized for downstream tasks. This approach maintains the scalability of GraphSAGE while enhancing its capacity to learn from complex, heterogeneous graph structures.

Synergistic Prediction: Combining GNNs and Gradient Boosting

The Hybrid Ensemble model combines the strengths of Graph Neural Networks (GNNs) and Gradient Boosted Decision Trees to enhance predictive capabilities. GNNs generate node embeddings that capture relational information within the input data, effectively representing network structures. These embeddings are then incorporated as features into a Gradient Boosted Decision Tree model, specifically utilizing LightGBM for its computational efficiency and scalability. This integration allows the model to leverage both the relational insights from the GNN and the non-linear feature interaction capabilities of the tree-based component, resulting in improved performance compared to models relying solely on tabular data.

The Hybrid Ensemble model leverages the complementary strengths of Graph Neural Networks (GNNs) and tree-based gradient boosting algorithms. GNNs are specifically designed to process data with inherent relational structures, effectively capturing dependencies between entities that traditional machine learning models may overlook. Conversely, gradient boosted decision trees, such as those implemented in LightGBM, demonstrate a strong capability in identifying and modeling complex non-linear interactions between individual features. By combining these approaches, the model benefits from both relational awareness and the ability to capture nuanced feature relationships, leading to improved predictive performance, particularly on datasets where both factors are significant.

The gradient boosting component of the Hybrid Ensemble utilizes LightGBM, a gradient boosting framework designed for efficiency and scalability. LightGBM employs a leaf-wise tree growth strategy, as opposed to the level-wise growth used in many other implementations, resulting in faster training speeds and reduced memory consumption. This implementation also incorporates techniques such as Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB) to further accelerate training, particularly on high-dimensional datasets. These optimizations allow for the effective handling of complex datasets and facilitate the rapid prototyping and deployment of the hybrid model.

Rigorous performance evaluation of the hybrid model was conducted using the Home Credit Default Risk dataset, yielding a Receiver Operating Characteristic Area Under the Curve (ROC-AUC) of 0.7816 and a Precision-Recall Area Under the Curve (PR-AUC) of 0.2807. These results demonstrate a measurable improvement over strong tabular baselines, specifically a LightGBM model which achieved a PR-AUC of 0.2540 on the same dataset. The use of both ROC-AUC and PR-AUC provides a comprehensive assessment of the model’s ability to discriminate between classes and accurately identify positive cases, respectively.

Responsible AI: Ensuring Fairness and Interpretability

A rigorous fairness audit forms a critical component in the development of this hybrid model, proactively identifying and addressing potential biases embedded within its predictive capabilities. This detailed assessment meticulously examines performance variations across distinct demographic groups, going beyond simple accuracy metrics to reveal disparities in outcomes. By quantifying these differences, researchers can pinpoint specific features or algorithmic components contributing to unfair predictions. Subsequent mitigation strategies, ranging from data re-balancing to algorithmic adjustments, are then implemented and re-evaluated to ensure equitable performance across all represented populations, fostering trust and responsible deployment in sensitive financial applications.

A critical component of responsible AI development involves a rigorous assessment of performance across diverse demographic groups. This audit meticulously examines whether the hybrid model exhibits disparities in accuracy, precision, or recall for different populations, identifying potential biases that could lead to inequitable financial outcomes. By quantifying these differences, researchers can pinpoint areas where the model systematically underperforms for specific groups, prompting targeted interventions and refinements to ensure fairness. Such analysis isn’t simply about achieving statistical parity; it’s about proactively mitigating the risk of perpetuating or amplifying existing societal inequalities through automated decision-making systems, fostering trust and promoting equitable access to financial services.

Explainability analysis serves as a critical component in validating the hybrid model’s reasoning, moving beyond simply assessing what it predicts to understanding why those predictions are made. Through techniques like feature importance ranking and sensitivity analysis, researchers dissect the model’s internal logic, identifying the key variables driving each decision. This process reveals potential biases or unexpected dependencies, fostering greater confidence in the model’s reliability and trustworthiness. Ultimately, this transparency isn’t merely an academic exercise; it’s essential for regulatory compliance in financial applications and, more importantly, for building user trust by demonstrating that the model operates on sound, interpretable principles – ensuring accountability and responsible deployment.

The successful integration of artificial intelligence into financial applications hinges not merely on predictive power, but on demonstrable responsibility. Rigorous fairness and explainability practices are therefore paramount, serving as essential safeguards against unintended consequences and fostering user trust. Without these considerations, models risk perpetuating existing societal biases, leading to discriminatory outcomes in areas like loan applications or credit scoring. Furthermore, a lack of transparency in algorithmic decision-making can erode public confidence and hinder the widespread adoption of these powerful tools. Consequently, prioritizing responsible AI isn’t simply an ethical imperative, but a practical necessity for building sustainable and equitable financial systems that benefit all stakeholders.

The pursuit of robust credit default prediction, as detailed in this research, echoes a fundamental principle of system design: structure dictates behavior. The modeling approach, leveraging heterogeneous graph neural networks and ensemble learning, isn’t merely about achieving higher accuracy; it’s about representing the complex relationships inherent in financial networks. As Robert Tarjan aptly stated, “Complexity is not necessarily a bad thing, but it must be managed.” This sentiment aligns directly with the need for careful attention to fairness and interpretability-managing the inherent complexity of these models to ensure responsible and ethical deployment. The research emphasizes that a truly effective system doesn’t just predict default; it understands the underlying connections driving risk, mirroring a holistic approach to system architecture.

What Lies Ahead?

The pursuit of improved credit risk assessment, as demonstrated by relational graph modeling, inevitably reveals the limitations inherent in seeking purely predictive power. While the fusion of graph neural networks with ensemble learning offers demonstrable gains, it does not, of itself, solve the fundamental problem: a model is only as trustworthy as the system it represents. The increasing complexity of these systems-heterogeneous graphs mirroring the tangled web of financial relationships-demands a corresponding rigor in interpretability and fairness evaluations. Simplification, in the form of feature selection or algorithmic shortcuts, always carries a cost, potentially obscuring biases or creating new vulnerabilities.

Future work must therefore shift beyond mere performance metrics. The field requires a more nuanced understanding of how these models arrive at their conclusions, not just that they do. A focus on causal inference, rather than purely correlational relationships, could offer a pathway towards more robust and reliable predictions. Furthermore, the integration of explainable AI (XAI) techniques is not merely a matter of compliance, but a necessity for building trust and ensuring responsible deployment in a domain where consequences are substantial.

Ultimately, the challenge lies not in building ever-more-intricate predictive engines, but in crafting systems that align with a broader understanding of economic stability and social equity. The elegance of a solution is not measured by its accuracy alone, but by its ability to reveal, rather than conceal, the underlying structure of the problem.

Original article: https://arxiv.org/pdf/2601.14633.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- RIVER Coin’s 1,200% Surge: A Tale of Hype and Hope 🚀💸

- Beyond Agent Alignment: Governing AI’s Collective Behavior

- Indiana Jones Franchise Future Revealed As Kathleen Kennedy Speaks Out

- Mark Ruffalo Finally Confirms Whether The Hulk Is In Avengers: Doomsday

- XDC PREDICTION. XDC cryptocurrency

- Stephen King Is Dominating Streaming, And It Won’t Be The Last Time In 2026

- Arc Raiders A First Foothold Quest Guide

- How To Use All New Amiibos In Animal Crossing: New Horizons 3.0

- How To Use Resetti’s Reset Service In Animal Crossing: New Horizons

2026-01-22 10:26