Author: Denis Avetisyan

A new framework integrates neural networks into the OPM Flow reservoir simulator, promising faster and more accurate modeling of complex near-well behavior.

This work demonstrates a data-driven hybrid approach for improved near-well modeling using machine learning and automatic differentiation within OPM Flow.

Accurate representation of wellbore dynamics remains a persistent challenge in reservoir simulation due to the computational expense of fine-scale modeling or the limitations of simplified analytical approaches. This is addressed in ‘A Machine-Learned Near-Well Model in OPM Flow’ through the novel integration of neural networks within the high-performance reservoir simulator OPM Flow, enabling a data-driven hybrid modeling framework. The presented method learns a refined well index directly from ensemble simulations, offering high-fidelity results at significantly reduced computational cost compared to traditional techniques. Could this approach unlock new possibilities for efficient and accurate modeling of complex subsurface flow phenomena and accelerate the development of robust reservoir management strategies?

The Inevitable Refinement: Modeling Near-Wellbore Complexity

Despite decades of refinement, conventional reservoir simulation techniques frequently encounter difficulties when modeling the intricate physics occurring immediately around wellbores. These challenges stem from the highly distorted flow patterns and complex interactions between fluids and the porous medium in this region, which are often oversimplified by the coarse grids used in full-field models. Consequently, predictions regarding well deliverability, breakthrough times, and ultimate recovery can deviate significantly from actual field performance. The inherent limitations in capturing phenomena like localized pressure drops, shear effects, and multi-phase flow behavior near the well necessitate more nuanced approaches to accurately represent this critical zone and minimize prediction errors, particularly as reservoir development progresses and wells mature.

The Peaceman well model, a cornerstone of reservoir simulation, offers an elegant analytical solution for approximating fluid flow within the near-wellbore region; however, its inherent assumptions limit its capacity to accurately represent realistic reservoir conditions. Originally designed for single-phase, radial flow in a homogeneous medium, the model struggles with the complexities introduced by multi-phase flow – the simultaneous movement of oil, water, and gas – and vertical heterogeneity, where permeability varies significantly with depth. These limitations necessitate modifications and often lead to inaccuracies when applied to reservoirs exhibiting complex geological features or undergoing enhanced oil recovery processes. While serving as a valuable starting point, the Peaceman model frequently requires refinement or replacement with more sophisticated numerical methods to adequately capture the intricate interplay of forces governing fluid behavior near production or injection wells.

Accurately forecasting reservoir performance during dynamic processes demands modeling techniques that extend beyond traditional steady-state assumptions. The transient nature of fluid flow – particularly during operations like CO2 injection for enhanced oil recovery or geological sequestration – introduces complexities that conventional methods often fail to capture. These processes involve time-dependent pressure and saturation distributions, requiring simulations capable of resolving rapid changes near wellbores and within the heterogeneous reservoir rock. Advanced approaches, including time-stepping numerical schemes and detailed representations of multi-phase flow and capillary pressure effects, are essential for predicting breakthrough times, sweep efficiency, and overall storage capacity with sufficient reliability for informed decision-making and effective reservoir management.

A fundamental rethinking of near-wellbore flow modeling is becoming essential as conventional techniques fall short in predicting reservoir behavior. Existing approaches frequently simplify the complex interplay of fluid dynamics, rock properties, and wellbore geometry, hindering accurate forecasts, particularly during dynamic processes. This shift demands moving beyond purely numerical simulations – which can be computationally expensive and prone to grid orientation effects – towards hybrid methods that integrate analytical solutions with advanced numerical techniques. Such a paradigm prioritizes capturing the critical physics occurring within the immediate vicinity of the well, enabling more reliable predictions of production rates, breakthrough times, and the effectiveness of enhanced oil recovery or carbon sequestration initiatives. Ultimately, this evolution seeks to deliver reservoir models that are not only computationally efficient but also physically representative, leading to better-informed decisions and optimized resource management.

Learning the Subsurface: Machine-Learned Models for Near-Well Dynamics

Machine-learned near-well models represent an advancement over conventional near-well modeling techniques by incorporating Artificial Neural Networks (ANNs) to estimate pressure and flow rates within the near-wellbore region. Traditional methods often rely on analytical solutions or numerical simulations which can be computationally expensive and time-consuming, particularly for complex reservoir geometries or fluid properties. These machine-learned models utilize the ANN’s capacity for non-linear regression to learn the relationship between reservoir parameters – such as permeability, porosity, and fluid saturations – and the resulting pressure and flow responses. This allows for rapid prediction of well performance under varying conditions, effectively emulating the behavior predicted by detailed numerical simulations but with significantly reduced computational cost.

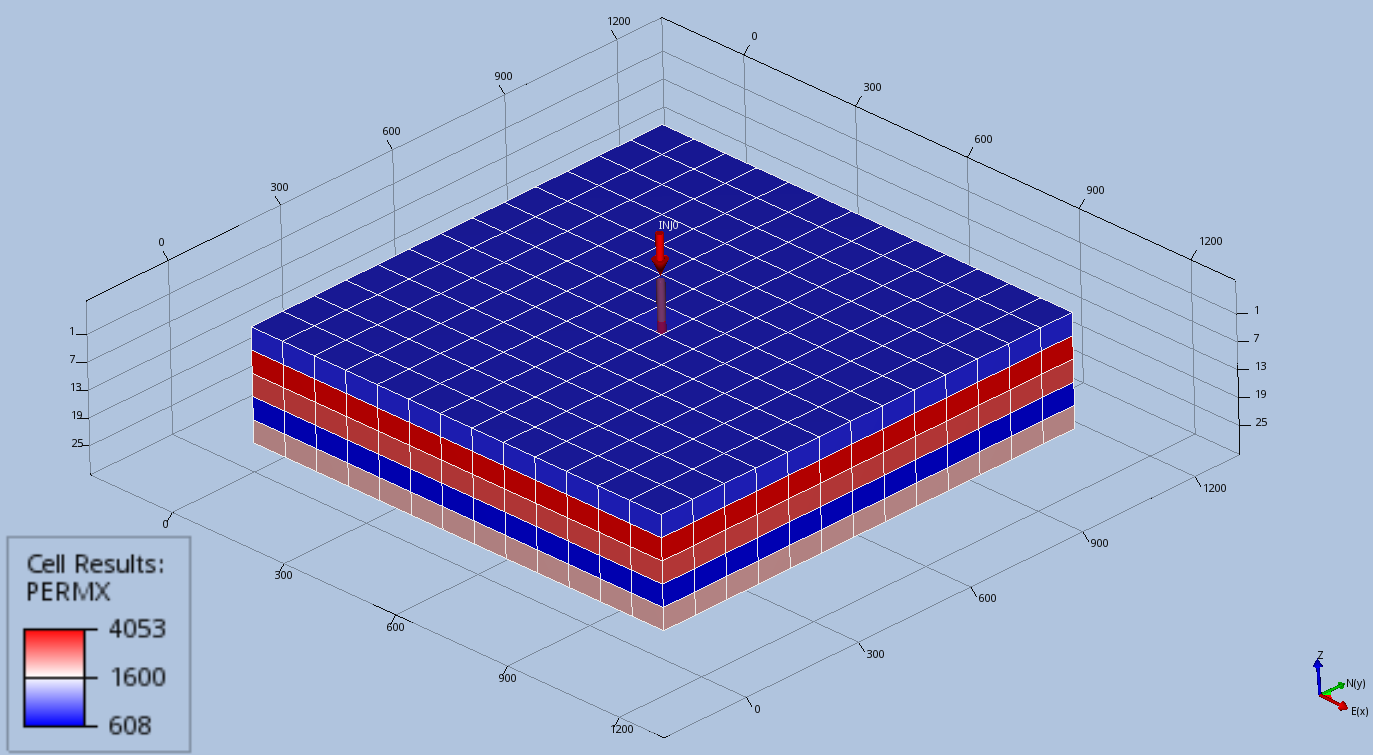

Machine-learned near-well models rely on ensemble simulations to create the datasets used for training. These simulations involve running numerous reservoir flow simulations, each with slightly varied input parameters representing the range of geological and operational uncertainty inherent in reservoir characterization. Parameters subject to variation typically include permeability, porosity, and fluid properties. The resulting dataset encompasses a broad spectrum of possible reservoir responses, covering a wide range of conditions and enabling the neural network to learn complex relationships between input parameters and resulting pressure/flow rate behavior. This comprehensive training data is crucial for the model’s ability to accurately generalize predictions to unseen reservoir conditions.

Data upscaling is a critical preprocessing step in the creation of machine-learned near-well models, addressing the computational demands of reservoir simulation while preserving predictive accuracy. This process involves reducing the granularity of the reservoir representation – specifically, coarsening the fine-scale grid used in detailed simulations to a lower-resolution grid suitable for training the neural network. Effective upscaling techniques, such as multi-grid methods or flow-based upscaling, aggregate fine-scale properties like permeability and porosity into representative values for each coarse grid block. This reduction in grid size significantly decreases the computational cost associated with generating training data through ensemble simulations, enabling the creation of a comprehensive dataset without compromising the model’s ability to accurately predict near-well pressure and flow rates across a range of reservoir conditions.

Neural networks, when trained on data generated from ensemble simulations, provide rapid prediction of near-well flow behavior due to their ability to approximate complex, non-linear relationships. This functionality bypasses the computational expense of repeatedly running full physics-based simulations for each prediction request. The trained network effectively learns the mapping between reservoir parameters, well controls, and resulting pressure/flow rate responses, enabling predictions to be generated in a fraction of the time required by conventional methods. Accuracy is maintained by the breadth of the training data, derived from numerous ensemble realizations, which allows the network to generalize effectively to unseen reservoir conditions and well configurations.

OPM Flow-NN: Bridging the Gap Between Physics and Prediction

The OPM Flow-NN framework enables the incorporation of neural networks trained in Keras directly into the OPM Flow reservoir simulation environment. This integration is achieved by utilizing the existing OPM Flow infrastructure to call and evaluate the Keras model as part of the simulation workflow. Specifically, the framework handles the transfer of relevant reservoir properties as inputs to the neural network and subsequently incorporates the network’s output – typically a prediction of a reservoir property like permeability or relative permeability – into the broader physics-based simulation. This allows for the replacement of computationally expensive numerical solvers with the faster evaluation of the trained neural network, thereby accelerating the simulation process.

The OPM Flow-NN framework utilizes Automatic Differentiation (AD) to compute derivatives of the neural network output with respect to the reservoir simulator’s input parameters. This capability is crucial for efficient optimization and sensitivity analysis workflows. Unlike traditional finite difference methods, AD computes exact derivatives to machine precision, eliminating the need for perturbation-based approximations and significantly reducing computational cost. Specifically, AD enables gradient-based optimization of reservoir parameters to history match production data and allows for accurate quantification of uncertainty through sensitivity analysis, determining the impact of input parameters on simulation results without repeated model evaluations.

This work successfully integrated artificial neural networks (ANNs) into the OPM Flow reservoir simulator for near-well modeling applications. Comparative analysis demonstrated that the ANNs achieve accuracy levels comparable to, and in some cases exceeding, those obtained using traditional numerical methods such as the multiphase Peaceman model. Specifically, the integrated framework was tested on a 3D scenario using coarse grid resolutions, confirming its ability to maintain predictive power while reducing computational cost. This validation confirms the feasibility of leveraging ANNs as a viable alternative or complement to conventional reservoir simulation techniques in near-well regions.

The OPM Flow-NN framework allows reservoir engineers to utilize substantially coarser grid resolutions in their simulations without significant loss of accuracy. This is achieved by combining the established physics-based modeling capabilities of OPM Flow with the function approximation strengths of neural networks. Traditional reservoir simulations often require fine grids to resolve complex flow phenomena, leading to computationally expensive models. By training a neural network to emulate the behavior of the reservoir at a fine scale, and then integrating this network into OPM Flow, the framework effectively bypasses the need for a fine grid during the simulation run, reducing computational costs and enabling faster turnaround times while maintaining, or even improving, prediction accuracy.

Performance evaluations of the OPM Flow-NN framework demonstrate significant error reduction in 3D reservoir modeling scenarios. Specifically, testing on the coarsest grid configuration yielded up to a 32.4% decrease in error when compared to results obtained using the multiphase Peaceman model. This improvement indicates the framework’s ability to maintain accuracy while utilizing substantially reduced grid resolutions, thereby improving computational efficiency. The error reduction was quantified through comparison of predicted and analytical solutions for standard reservoir flow problems.

Beyond Prediction: Implications for Resource Management and Carbon Sequestration

Machine-learned models are increasingly vital for optimizing oil and gas production by providing a detailed understanding of fluid flow immediately surrounding wellbores-a region traditionally difficult to simulate accurately. These models move beyond conventional approaches by learning complex relationships between operational parameters and reservoir behavior, enabling proactive adjustments to production strategies. This capability translates to improved recovery rates, reduced operational costs, and more efficient reservoir management overall. By precisely capturing near-wellbore dynamics-including effects like multiphase flow and wellbore friction-operators can minimize unwanted effects like water or gas breakthrough and maximize the economic viability of each well. The technology ultimately facilitates data-driven decisions, leading to substantial improvements in both short-term production and long-term reservoir performance.

The accurate prediction of fluid flow within subsurface reservoirs is often hampered by vertical heterogeneity – the presence of varying rock types and properties in layers. This framework addresses this challenge by explicitly incorporating the effects of these vertical variations, resulting in more realistic simulations of how fluids move through complex geological formations. By accounting for differences in permeability and porosity across layers, the model captures nuanced flow patterns that traditional, homogenized approaches often miss. This capability is particularly crucial in reservoirs with significant stratification, such as fractured carbonates or thinly bedded sandstones, where vertical connectivity strongly influences overall production and recovery rates. Consequently, operators gain a more reliable understanding of reservoir behavior, enabling informed decisions regarding well placement, injection strategies, and overall field development plans.

Evaluations within a three-dimensional reservoir simulation reveal a substantial improvement in predictive accuracy with the newly developed framework. Specifically, the model achieves a 32.4% reduction in error when compared to the widely used Peaceman model, even when utilizing the coarsest computational grid. This marked decrease in error, accomplished with reduced computational demands, underscores the framework’s potential to deliver both efficient and accurate reservoir simulations. The ability to maintain precision while simplifying calculations represents a significant advancement, promising to streamline workflows and improve the reliability of predictions in complex geological settings.

The developed framework offers a crucial advancement for carbon capture and storage (CCS) technologies, particularly regarding the safe and effective implementation of CO2 injection for long-term sequestration. Accurate modeling of subsurface fluid flow is paramount to preventing leakage from storage reservoirs – a key concern in CCS viability – and this technology directly addresses that need. By providing more precise predictions of CO2 plume migration and distribution within complex geological formations, the framework enables optimized injection strategies, minimizing the risk of pressure build-up and potential fracturing of caprock formations. This enhanced predictability is vital for site selection, monitoring, and ultimately, ensuring the permanence of stored carbon, thereby bolstering the potential of CCS as a significant climate change mitigation strategy.

Continued development of this predictive framework centers on incorporating increasingly intricate physical processes, such as multi-phase flow and geochemical reactions, to more realistically simulate subsurface environments. A key advancement lies in the integration of real-time data streams – including pressure, flow rate, and geochemical sensors – to enable dynamic reservoir optimization. This capability will move beyond static predictions to offer a continuously updated, responsive model, allowing for proactive adjustments to production or injection strategies. Ultimately, this adaptive framework promises to significantly enhance reservoir management, maximizing resource recovery and ensuring the long-term efficiency of projects like carbon sequestration by responding to changing conditions as they occur.

The pursuit of accurate reservoir simulation, as detailed in this work, inherently acknowledges the transient nature of any predictive model. Like all systems, these simulations are subject to the relentless march of time and the accumulation of discrepancies between prediction and reality. Igor Tamm observed, “The most important thing is to always move forward.” This sentiment resonates with the core idea of hybrid modeling presented here – a continuous refinement of the system through data integration and machine learning. The framework detailed doesn’t seek a perfect, immutable solution, but rather an adaptive approach-a versioning of the model, if you will-that gracefully ages with new data and improved understanding of near-well complexities. Each iteration, guided by data-driven insights, extends the model’s lifespan and predictive power, accepting that the arrow of time always points toward refactoring.

What Lies Ahead?

The integration of machine learning into reservoir simulation, as demonstrated by this work, merely shifts the locus of eventual decay. Uptime is extended, certainly, and the illusion of predictive power strengthened, but the fundamental entropy remains. The model’s accuracy, while improved in the near-well region, is still tethered to the quality and distribution of the training data-a static snapshot of a dynamic system. Future iterations will inevitably confront the limitations of these frozen moments.

A critical avenue for exploration resides not in refining the neural network architecture itself, but in acknowledging its inherent temporality. The system doesn’t simply become inaccurate; it unwinds from its initial calibration. Developing methods to quantify and incorporate model drift, to treat the network as a decaying asset rather than a fixed prediction engine, is paramount. Stability is an illusion cached by time, and latency is the tax every request must pay; these realities demand acknowledgement.

Ultimately, the challenge isn’t building a perfect model, but crafting systems that gracefully accommodate imperfection. The pursuit of increasingly complex algorithms will yield diminishing returns; a more fruitful path lies in embracing the transient nature of prediction and building resilience into the framework itself. The next generation of reservoir modeling will likely focus on adaptive systems-those capable of self-correction and recalibration-rather than static, albeit sophisticated, approximations.

Original article: https://arxiv.org/pdf/2601.11193.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- How to Complete the Behemoth Guardian Project in Infinity Nikki

- Six Flags Qiddiya City Closes Park for One Day Shortly After Opening

- Fans pay respects after beloved VTuber Illy dies of cystic fibrosis

- Pokemon Legends: Z-A Is Giving Away A Very Big Charizard

- Robert Pattinson’s secret Marty Supreme role revealed

- Stephen King Is Dominating Streaming, And It Won’t Be The Last Time In 2026

- Mark Ruffalo Finally Confirms Whether The Hulk Is In Avengers: Doomsday

- Bitcoin After Dark: The ETF That’s Sneakier Than Your Ex’s Texts at 2AM 😏

- Arc Raiders A First Foothold Quest Guide

2026-01-21 03:37