Author: Denis Avetisyan

A new approach leverages statistical guarantees to provide pilots with real-time risk assessments and preemptive warnings during flight testing.

This review details a conformal prediction framework for runtime safety monitoring in flight tests, offering statistically calibrated alerts for human-in-the-loop systems.

Despite increasing reliance on complex systems, ensuring runtime safety with uncertain parameters remains a critical challenge, particularly in high-risk applications like flight testing. This paper, ‘Conformal Safety Monitoring for Flight Testing: A Case Study in Data-Driven Safety Learning’, introduces a data-driven approach leveraging conformal prediction to provide statistically calibrated, preemptive risk assessments for pilots. By combining predictive modeling with rigorous statistical guarantees, our method reliably identifies unsafe scenarios and outperforms baseline approaches in proactively classifying risk. Could this framework be extended to enhance safety monitoring across other complex, human-in-the-loop systems facing dynamic uncertainties?

The Inevitable Uncertainty of Flight

The uncompromising demand for safety in aircraft operation necessitates meticulous verification, especially when executing complex maneuvers such as the Rudder Doublet. This maneuver-involving rapid rudder deflection followed by a return to neutral-intentionally pushes an aircraft to its dynamic limits, revealing potential instabilities and control challenges. Consequently, it serves as a crucial benchmark for evaluating the robustness of flight control systems and the aircraft’s overall handling qualities. Rigorous testing during and after the Rudder Doublet is therefore paramount, ensuring the aircraft remains within safe operational boundaries and can reliably recover from potentially hazardous deviations. The precision required to maintain stability throughout this maneuver highlights the critical interplay between aerodynamic forces, control surface effectiveness, and pilot input, all of which must be thoroughly understood and accounted for in the design and certification process.

Conventional aircraft safety verification methods often fall short when confronted with the complex realities of flight. These approaches typically rely on pre-defined scenarios and meticulously controlled simulations, but fail to adequately account for the inherent uncertainties present in aerodynamic forces, atmospheric conditions, and the unpredictable nature of operational environments. Factors like turbulence, gusts, and even subtle variations in aircraft manufacturing can introduce dynamic behaviors not fully captured in standard testing. This limitation creates a disconnect between the controlled laboratory setting and the chaotic world of actual flight, potentially leaving critical safety margins underestimated and increasing the risk of encountering unforeseen and potentially hazardous conditions during maneuvers like the Rudder Doublet. Consequently, a paradigm shift is needed – one that embraces robust methods capable of handling the dynamic uncertainties inherent in aerospace systems.

Aircraft safety increasingly depends on a proactive approach that moves beyond reactive measures to anticipate and mitigate potential hazards before they manifest. This necessitates continuous, real-time assessment of flight dynamics, leveraging advanced sensors and computational models to predict deviations from safe operating envelopes. Such systems don’t simply respond to emergencies; they forecast them, enabling preemptive interventions – like subtle control surface adjustments or automated flight path corrections – that steer the aircraft away from dangerous situations. The goal is to create a ‘safety net’ woven from predictive analytics and automated responses, effectively shifting the paradigm from damage control to hazard prevention, and ultimately ensuring a significantly higher margin of safety in increasingly complex flight scenarios.

Modeling the Trajectory of Risk

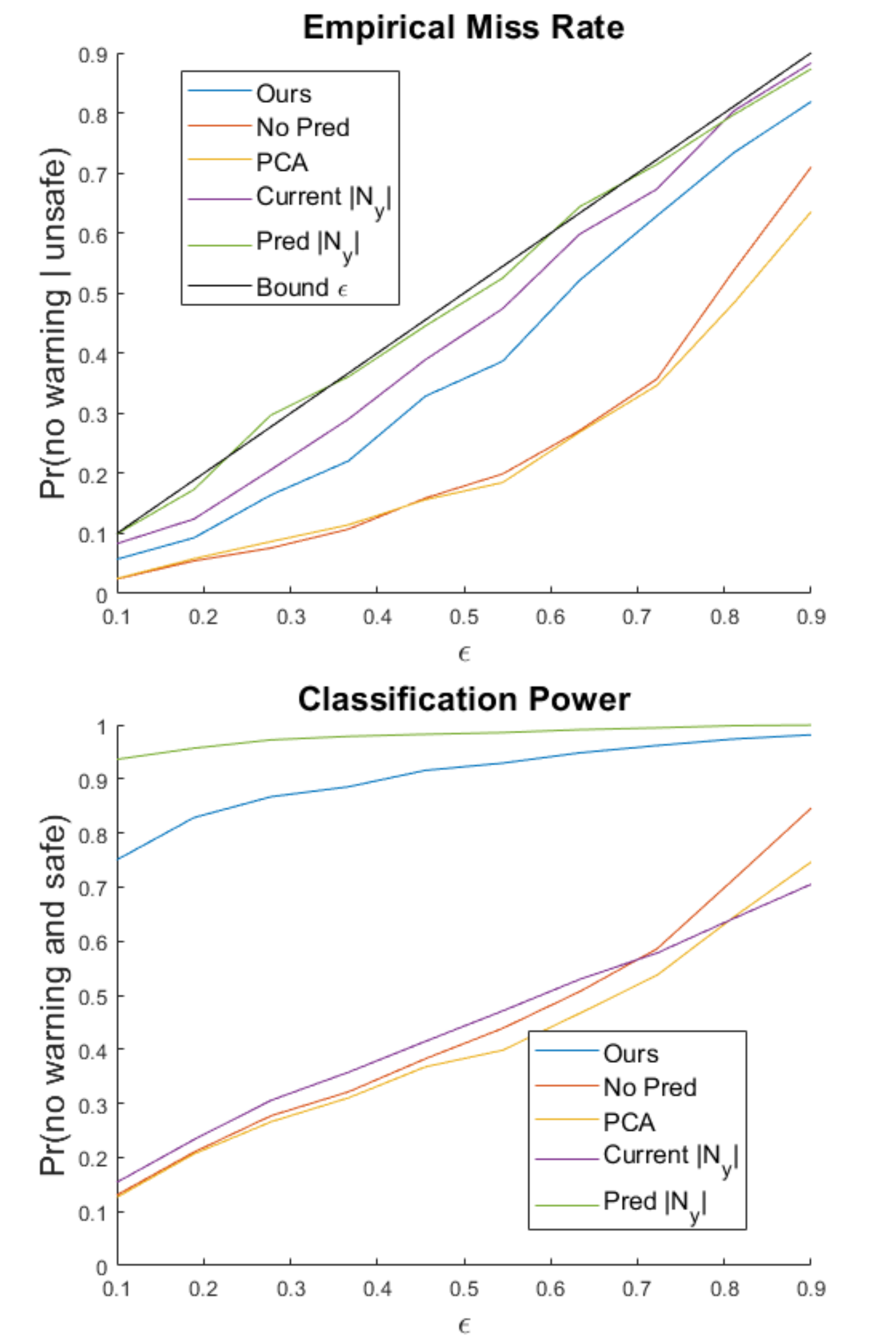

The predictive system utilizes a Linear Model to forecast future aircraft states based on current observations. To manage computational complexity and prevent overfitting given the high dimensionality of the aircraft’s state space, Principal Component Analysis (PCA) is employed for dimensionality reduction. PCA identifies the principal components – the directions of greatest variance in the data – allowing the Linear Model to be trained and executed on a significantly reduced set of features while retaining the most critical information for state prediction. This approach enables real-time forecasting of aircraft behavior by projecting the current state onto the reduced PCA space, applying the Linear Model, and then reconstructing the predicted state.

The safety classification of predicted aircraft states utilizes a Nearest Neighbor Classifier, a supervised learning algorithm that assigns a safety level based on the proximity of the predicted state to known safe and unsafe states within a training dataset. This classification is performed by calculating the distance – typically Euclidean distance – between the predicted state vector and the vectors representing previously observed flight conditions. The algorithm then assigns the predicted state the same classification as its $k$ nearest neighbors in the feature space, where $k$ is a user-defined parameter controlling the sensitivity of the classifier. The training dataset consists of labeled flight data representing both nominal and hazardous scenarios, enabling the classifier to learn the boundaries between safe and unsafe operating regions.

Simulated flight rollouts are integral to the iterative refinement and calibration of the predictive safety system. These rollouts generate extensive datasets of aircraft states under varied conditions, allowing for quantitative assessment of the prediction model’s accuracy and the safety classification algorithm’s performance. Specifically, discrepancies between predicted and actual aircraft trajectories during rollouts are used to adjust the parameters of both the Linear Model – informed by PCA – and the Nearest Neighbor Classifier. This process involves systematically varying rollout parameters, such as initial conditions and environmental disturbances, to comprehensively map the system’s response and identify areas for improvement in predictive capability and safety threshold determination. Data from these simulations also facilitates the calculation of key performance indicators, including false positive and false negative rates, which are used to optimize the system for reliable operation.

Quantifying the Inevitable: A Statistical Boundary

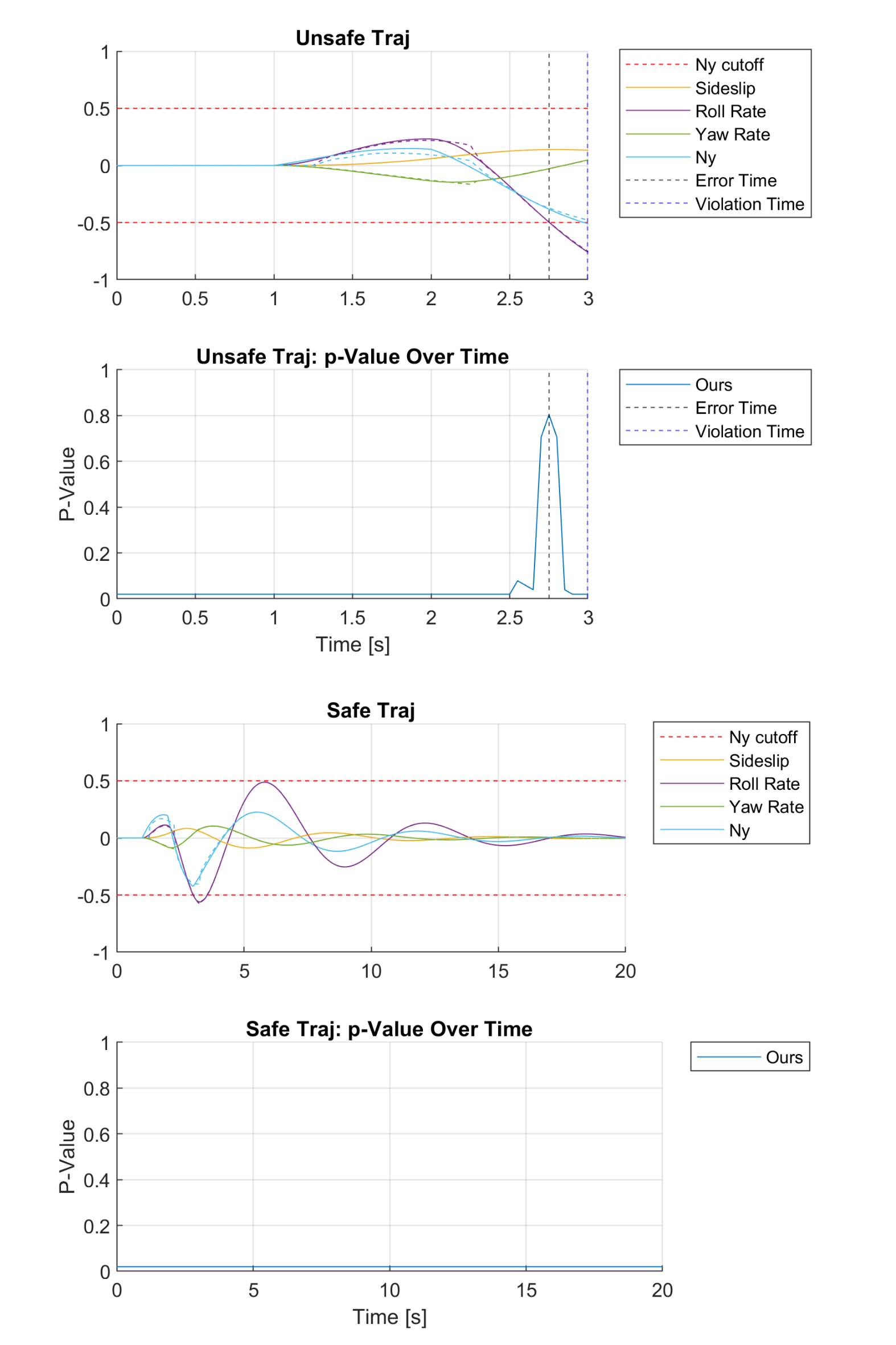

Conformal Prediction is utilized to produce a $P_p$-value, which serves as a quantitative metric for assessing the risk of a safety violation. This $P_p$-value is a scalar output, ranging from 0 to 1, representing the probability that a given prediction will result in an undesirable outcome. The methodology calculates this value by evaluating the compatibility of a new data point with a calibration set, effectively measuring how ‘unusual’ the new input is relative to previously observed data. A lower $P_p$-value indicates a higher risk, suggesting the prediction is less reliable, while a value closer to 1 suggests greater confidence in the prediction’s safety. This allows for a principled approach to risk quantification, providing a statistically grounded measure of uncertainty associated with each prediction.

Conformal Calibration enhances the reliability of Pp-value calculations within a Nearest Neighbor Classifier framework by providing statistical guarantees regarding its accuracy. This process involves adjusting the prediction set construction to ensure that, over a test dataset, the empirical frequency of safety violations does not exceed a user-defined error rate, denoted as $ϵ$. Specifically, the calibration procedure modifies the neighborhood size used in the Nearest Neighbor algorithm, effectively controlling the coverage probability of the prediction sets. This ensures that the Pp-value, representing the probability of a safety violation, is statistically valid and reflects the true risk associated with a given prediction.

Statistical calibration is essential for ensuring the reliability of risk assessments generated by conformal prediction. This process aligns predicted risk levels – represented by the $p$-value – with their corresponding empirical probabilities of error. Specifically, we demonstrate calibration through the miss rate, which quantifies the proportion of instances incorrectly classified as safe. Our results show that the empirically observed miss rate consistently remains below the user-defined theoretical upper bound, denoted as $\epsilon$. This guarantees that the system’s risk predictions are statistically valid and provide a conservative estimate of potential safety violations.

Mapping the Implicit Boundaries of Flight

The system generates a Pp-value – a probabilistic measure of predicted pilot deviation from safe operational boundaries – which directly informs data-driven safety alerts. This isn’t merely a warning system; it’s a predictive tool translating complex flight data into actionable intelligence for both pilots and autonomous flight control systems. A higher Pp-value indicates an increased probability of a safety compromise, triggering an immediate alert allowing for corrective action. By quantifying risk in this manner, the system moves beyond reactive safety measures, offering a proactive layer of defense against potential incidents and fostering a more reliable flight experience. The alerts are designed to be concise and informative, providing pilots or automated systems with the specific information needed to address the identified risk effectively and efficiently.

The system demonstrably reduces the likelihood of undetected critical safety violations – known as the Miss Rate – through precise risk quantification. This is achieved by proactively identifying potential hazards and issuing alerts 0.25 seconds before a projected breach of safety parameters. This short, yet crucial, lead time allows for pilot intervention or autonomous system correction, effectively mitigating risk before it escalates. The system doesn’t simply flag anomalies; it assesses the probability of a genuine safety issue, minimizing false positives and ensuring that alerts are genuinely actionable. By focusing on probabilistic forecasting rather than reactive detection, the technology represents a significant advancement in preventative flight safety measures, offering a higher margin of safety than traditional systems.

The system’s analytical capabilities reveal more than just immediate flight risks; it systematically maps the often-unspoken boundaries of safe aircraft operation. By continuously analyzing flight data, the approach identifies implicit constraints – limitations not explicitly defined in manuals or regulations but consistently observed in successful flights. This extends safety analysis beyond reactive alerts to a proactive understanding of the aircraft’s true operating envelope, highlighting subtle interdependencies between control inputs and environmental factors. Consequently, the insights gained facilitate a more nuanced assessment of risk, informing improved pilot training, refined autopilot algorithms, and ultimately, the development of aircraft designed to operate closer to – yet safely within – their inherent performance limits.

The pursuit of absolute certainty in complex systems is a phantom. This work, detailing conformal safety monitoring for flight testing, acknowledges this inherent unpredictability. Rather than striving for flawless prediction, it embraces statistical calibration, offering pilots risk assessments grounded in observed data. As Vinton Cerf observed, “There are no best practices – only survivors.” This sentiment perfectly encapsulates the approach detailed within; the system doesn’t prevent failure, it prepares for it, offering a measured response to the inevitable chaos of flight. The conformal prediction framework, providing guarantees even with uncertain dynamics, represents an architecture designed not to postpone chaos indefinitely, but to navigate its arrival with resilience.

What’s Next?

The pursuit of ‘safety’ via statistical guarantee is, predictably, a refinement of the problem, not its resolution. This work, by formalizing risk assessment during flight testing, does not eliminate uncertainty – it merely relocates the boundaries of acceptable failure. The system, as presented, operates under the assumption that historical data sufficiently captures the relevant state space. This is, of course, an optimistic proposition. The true dynamics of complex systems are rarely, if ever, fully represented in training sets; unforeseen conditions will always emerge.

Future iterations will inevitably grapple with the limitations of predictive models themselves. Conformal prediction offers calibration, but calibration is not omniscience. A guarantee is merely a contract with probability. The next frontier isn’t necessarily improving prediction accuracy, but developing methods to gracefully degrade performance – to acknowledge that stability is merely an illusion that caches well.

The integration of human-in-the-loop systems demands further attention. The alerts generated by such a system are not neutral signals, but interventions in a complex socio-technical system. Understanding how pilots interpret and react to probabilistic risk assessments-and the potential for automation bias or alert fatigue-is crucial. Chaos isn’t failure-it’s nature’s syntax. The challenge lies in designing systems that can not only predict disruption, but adapt to it.

Original article: https://arxiv.org/pdf/2511.20811.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Lacari banned on Twitch & Kick after accidentally showing explicit files on notepad

- YouTuber streams himself 24/7 in total isolation for an entire year

- Adolescence’s Co-Creator Is Making A Lord Of The Flies Show. Everything We Know About The Book-To-Screen Adaptation

- The Batman 2 Villain Update Backs Up DC Movie Rumor

- Warframe Turns To A Very Unexpected Person To Explain Its Lore: Werner Herzog

- Gold Rate Forecast

- After Receiving Prison Sentence In His Home Country, Director Jafar Panahi Plans To Return To Iran After Awards Season

- Now you can get Bobcat blueprint in ARC Raiders easily. Here’s what you have to do

- Best Hulk Comics

- Amanda Seyfried “Not F***ing Apologizing” for Charlie Kirk Comments

2025-12-01 01:53